keynote session

How to Scalably Test LLMs

About the Talk

Companies are excited by the prospect of generative AI, but they are equally concerned about potential LLM failure modes like hallucinations, unexpected behavior, and unsafe outputs. Testing LLMs is very different from testing traditional software. There is an element of unpredictability given there is a wide space of LLM behavior. In this talk, I focus on the most reliable automated methods to scalably testing LLMs.

Key Takeaways:

- Intrinsic evaluation metrics like perplexity tend to be weakly correlated with human judgments, so they shouldn't be used to evaluate LLMs post-training

- Creating test cases to measure LLM performance is as much an art as it is a science. Test sets should be diverse in distribution and cover as much of the use case scope as possible

- Open source LLM benchmarks are no longer a trustworthy to measure progress in AI, since they most LLM developers have already trained on them

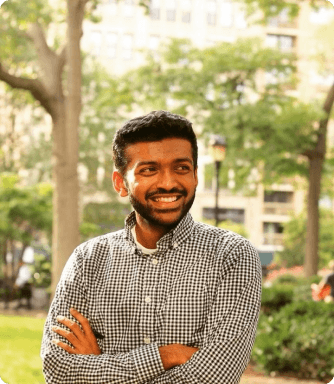

About the Speaker

Anand is co-founder and CEO of Patronus AI, the industry-first automated AI evaluation and security platform to help enterprises catch LLM mistakes at scale. They are backed by Lightspeed Venture Partners, Gokul Rajaram, Replit CEO Amjad Masad, and Fortune 500 executives. Previously, Anand led ML explainability and advanced experimentation efforts at Meta Reality Labs, and exited a quant hedge fund backed by Mark Cuban.

About Testµ Conference

Testµ Conference is a virtual or online-only conference to define the future of testing. Join over 30,000+ software testers, developers, quality assurance experts, industry experts, and thought leaders for 3 days of learning, testing, and networking at Testμ Conference 2024 by LambdaTest.