Next-Gen App & Browser

Testing Cloud

Trusted by 2 Mn+ QAs & Devs to accelerate their release cycles

- Testing Basics

- Home

- /

- Learning Hub

- /

- Top 40+ Quality Assurance (QA) Interview Questions

- -

- August 05 2024

Top 40+ Quality Assurance (QA) Interview Questions

Learn QA interview questions on fundamentals, intermediate challenges, and practical scenarios to boost your interview preparation and success.

- Share:

- Testing Framework Interview Questions

- Testing Types Interview Questions

- General Interview Questions

- Software Testing Interview Questions

- Manual Testing Interview Questions

- Automation Testing Interview Questions

- Agile Interview Questions

- CI/CD Interview Questions

- BDD Interview Questions

- Mobile Testing Interview Questions

- Software Engineering Interview Questions

- SDET Interview Questions

- Quality Assurance (QA) Interview Questions

- PWA Interview Questions

- iOS Interview Questions

- Linux Interview Questions and Answers

- Operating System Interview Questions

- Web Development Interview Questions

- Frontend Interview Questions

- Git interview Questions

- Scrum Master Interview Questions

- Data Structure Interview Questions

- SAP Interview Questions and Answers

- Salesforce Interview Questions and Answers

- CI/CD Tools Interview Questions

- Programming Languages Interview Questions

- Development Framework Interview Questions

- Automation Tool Interview Questions

OVERVIEW

Quality Assurance (QA) is an essential aspect of the software development life cycle, playing a critical role in ensuring that applications meet high quality and performance standards before reaching end-users. Implementing effective QA practices helps identify defects, verify functionality, and ensure that software is reliable and user-friendly. It's important to be well-versed in understanding the fundamentals of QA.

Having a solid foundation in QA not only improves software quality but is also crucial for excelling in QA interviews. To successfully crack QA interviews, it's essential to have strong fundamentals and practical aspects of QA. Preparing with a comprehensive set of QA interview questions can help you deepen your understanding and enhance your interview readiness.

Note : We have compiled all QA Interview Questions for you in a template format. Check it out now!

QA Interview Questions for Freshers

Here are some essential QA interview questions for freshers. These questions cover the basic concepts of QA to help you crack the interview.

1. What Is Quality Assurance?

Quality Assurance(QA) focuses on ensuring that proper processes are followed, providing confidence that the desired levels of quality will be achieved. It ensures that everything reaching end users meets requirements and functions correctly. It involves establishing standards, guidelines, and procedures to prevent quality issues and maintain product integrity throughout development. It aims to get things right the first time, fixing any mistakes as soon as they appear during the Software Development Life Cycle (SDLC).

2. What Is the Difference Between Quality Assurance and Quality Control?

Quality Assurance (QA) is defined as the organized and systematic procedures needed to provide confidence that a product, service, or result will satisfy given quality requirements and be fit for use. QA is process-related, focusing on enforcing standards and techniques to improve the development process and prevent faults. It concentrates on good software engineering practices and defect prevention, with allegiance to development. Examples include reviews and audits.

Quality Control (QC) is the system used to maintain an intended level of quality in any product or service, focusing on the precise approach to handling factors that impact quality. QC is product-related, focusing on building and thoroughly testing a software system to detect and correct defects. It concentrates on specific products and defect detection and correction, usually performed after software development. Examples include software testing at various levels.

3. What Is Black Box Testing?

In black-box testing, you test the software’s front-end and back-end without needing to see or interact with the code. The goal is to ensure the software meets specified requirements and behaves as expected based on its functionality. Common types of black-box testing include acceptance, system, functional, and regression testing.

4. What Is White Box Testing and Its Techniques?

White box testing involves examining a program's internal structure. It is primarily used in unit testing but can also be applied to integration tests. It requires extensive knowledge of the technology used to develop the program and verifies the correct implementation of internal units, structures, and relationships.

Techniques for white box testing are:

- Static Testing: Involves testing activities without executing the source code, including inspections, walkthroughs, and reviews. It provides good results when started early in the software development process.

- Dynamic Testing: Involves executing the source code and observing its performance with specific inputs.

- Data Flow/Control Testing

- Performance Testing

- Usability Testing

- Compatibility Testing

- Recovery Testing

- Security Testing

It includes validation activities and techniques such as:

5. What Is Grey Box Testing?

It combines white and black box testing, which is called grey box testing. In this type of testing, testers have partial knowledge of the internal workings of the system, application, or software being tested. This knowledge can include access to the design documents, architecture diagrams, or limited knowledge of the code.

6. Describe Boundary Value Analysis

Boundary value analysis is a black box technique that focuses on designing test cases with input values close to boundary values. Test cases are created with values at or near the boundaries of input ranges, such as minimum, just above minimum, maximum, just below maximum, and nominal values. This technique helps detect faults more likely to occur at these boundaries.

7. What Is Equivalence Partitioning?

Equivalence partitioning is a black box testing technique. If many test cases are created for any program, then executing all such test cases is neither feasible nor desirable.

You will have to select a few test cases to achieve a reasonable level of coverage. Many test cases do not test anything new and execute the same lines of source code repeatedly. We may divide the input domain into various categories with some relationship and expect every test case from a category to exhibit the same behavior.

If categories are well selected, you may assume that if one representative test case works correctly, others may also give the same results. This assumption allows us to select exactly one test case from each category, and if there are four categories, four test cases may be selected. Each category is called an equivalence class, and this type of testing is known as equivalence partitioning or equivalence class testing.

8. Explain Decision Table Testing

Decision tables are used in many engineering disciplines to represent complex logical relationships. An output may depend on many input conditions, and decision tables give a pictorial view or various combinations of input conditions.

There are four portions of the decision table:

- Condition Stubs: All conditions that determine specific actions or sets of actions are listed on the upper left of the decision table.

- Condition Entries: Located in the upper right, this section includes several columns where each column represents a rule. The values entered here are the inputs for those rules.

- Action Stubs: This section at the lower left of the decision table lists all possible actions.

- Action Entries: Found in the lower right, this section shows the actions associated with each entry. These are the outputs and depend on the functionality of the program.

9. What Is State Transition Testing?

State transition testing is a black box technique that uses state-based models to define and test software behavior. It examines how a software's behavior changes in response to different sequences of input conditions, focusing on the transitions between states based on various events. This approach helps achieve thorough test coverage by analyzing how the software responds to different scenarios.

10. What Is Exploratory Testing?

Exploratory testing is where testers explore the product with specific objectives and plans, often based on past experiences and knowledge. It can be performed during any testing phase and involves using methods like guesses, architecture diagrams, use cases, past defects, and checklists to identify issues.

11. How Do You Estimate Test Coverage?

Test coverage is a metric that measures how much of the software's code or functionality has been tested. To estimate test coverage, start by identifying the software's testable units, such as components, functionalities, and user stories. Then, determine the types of coverage needed, such as code, statement, branch, path, functional, or test case coverage.

Develop and execute test cases to cover these units, then measure the coverage using tools or manual methods. Calculate the coverage percentage by dividing the number of executed units by the total testable units and multiplying by 100. Analyze the results to identify gaps and write additional test cases if necessary. This process helps ensure comprehensive testing of the software.

12. What Is Risk-Based Testing?

Risk-based testing is a testing approach where you prioritize and focus on the areas of the software that pose the highest risk of failure. It involves analyzing potential bugs based on their impact and likelihood and allocating testing resources to address the most critical and high-risk issues. This approach helps manage quality risks and ensure that testing is aligned with the most significant areas of concern.

13. What Is Automation Testing and When Should It Not Be Used?

Automation testing is a process of converting manual test cases into test scripts using automation tools or programming languages to enhance the speed of test execution and save human effort. It delivers key benefits such as increased coverage to detect errors, repeatability to save time and leverage to improve resource productivity.

However, automation testing should not be used in the following scenarios:

- Short-term Projects: If the project is short-term or has a limited lifespan, the effort and cost of developing automated tests may not be justified.

- Frequent UI Changes: Applications with frequently changing UIs can make maintaining automated tests difficult and costly, outweighing the benefits.

- Early Development Stages: Writing automated tests during the early stages of development, when the application is subject to frequent changes, can lead to excessive maintenance.

- Complex Logic with Unpredictable Outputs: Automated tests may not accurately assess applications with highly complex logic requiring subjective evaluation.

- Tight Budget: If the project budget is tight, manual testing might be more viable due to the initial investment required for automation tools, infrastructure, and skilled personnel.

- Non-repeatable Actions: Test cases involving non-repeatable actions, such as interactions with unique hardware states, make automation impractical.

14. What Is Data-Driven Testing?

Data-driven testing is a methodology where the same test scripts run using multiple data sets. The test scripts utilize a set of inputs stored in external sources such as databases, spreadsheets, or flat files (e.g., CSV, JSON) rather than having the values hard-coded directly into the scripts.

For example, in a web testing scenario, data-driven testing can test search functionality by automatically running the test script with various search queries stored in an external file instead of manually entering different queries each time.

15. What Are the Main Concepts of Data-Driven Testing?

Some of the main concepts of data-driven testing are mentioned below.

- Separation of Data and Logic: Test data is stored in external files such as Excel (.xlsx or .xls), CSV files, XML files, JSON files, databases, and config files. Test scripts are written to read the test data from these external sources and use it during test execution.

- Parameterization: Test cases are parameterized to accept different input values. This allows the same test case to run multiple times with different data sets.

- Reusability: Test scripts are reused across different data sets, reducing the need to write multiple test scripts for similar test scenarios.

16. What Is Performance Testing and Why Is It Important?

Performance testing ensures that applications run quickly and efficiently, meeting business requirements and handling future needs. It evaluates a system's speed, responsiveness, and stability under a particular workload. This type of testing is crucial because slow performance can result in lost business opportunities and dissatisfied customers.

For example, if an educational website takes too long to display exam results, users may turn to other sources, resulting in a loss for the organization. Performance testing helps identify and rectify such issues, ensuring the system can handle high loads and provide a good user experience.

17. Explain the Difference Between Load Testing and Stress Testing

- Load Testing: Load test that’s performed at the specified load level.

- Stress Testing: Stress testing assesses system robustness under extreme, beyond-normal conditions.

| Load Testing | Stress Testing |

|---|---|

| Help us to determine the reliability of the application. | Help us to determine the stability of the application. |

| This test tests the server's expected time for the expected load. | This testing is done to verify the system’s behavior if the load exceeds the expected limit. |

| It is the first step of performance testing. | It is performed after load testing. |

| It is performed to check the behavior of the application. This means it is performed at the specified load level. | It is performed to check how the application responds to load spikes. This means that the application is tested beyond the normal limits. |

| A huge number of user input is provided. | Many users and a high volume of data input are provided. |

| Issues like load balancing, bandwidth issues, system capacity, throughput issues, poor response time, etc., are verified in load testing. | Issues like security loopholes, memory leakage, data corruption, etc., are verified in overload situations in this type of testing. |

| E.g., LoadRunner, K6, JMeter, WebLoad, Gatling, Locust, etc | E.g., LoadRunner, K6, JMeter, WebLoad, Gatling, Locust, NeoLoad, etc |

18. What Is Security Testing, and Why Is It Necessary?

Security testing identifies vulnerabilities and ensures security functionality by verifying and validating software system requirements related to confidentiality, integrity, availability, authentication, authorization, and non-repudiation. It aims to maintain data confidentiality, check against information leakage, and ensure the intended functionality. Security testing helps expose vulnerabilities such as cross-site scripting, SQL injection, buffer overflow, file inclusion, URL injection, and cookie modification.

19. What Are the Key Differences Between Mobile Testing and Desktop Testing?

Desktop Testing: Focuses on evaluating a program's functionality, performance, and compatibility with various hardware configurations, operating systems, and screen resolutions.

Mobile Testing: Includes native mobile app testing, mobile device testing, and mobile web app testing, ensuring quality in features such as flexibility, usability, interoperability, connectivity, security, privacy, functions, behaviors, performance, and quality of service.

| Desktop Testing | Mobile Testing |

|---|---|

| larger screen size, the user can see most of the contents on a single page | With a smaller screen size, the user has to scroll up and down to see the contents |

| Fonts are visible due to the big screen size | Font visibility is not good due to the small screen size |

| Windows, Mac, Linux | Android, iOS |

| Generally stable and high-speed internet connections, including Ethernet and Wi-Fi. | Varies widely, including 3G, 4G, 5G, Wi-Fi, and offline. |

| Tools like QTP/UFT and TestComplete. | Tools like Appium, Espresso, and XCUITest. |

| Focus on data security, network security, and system vulnerabilities. | Focus on data security, app permissions, and secure storage. |

| Less frequent updates and longer support cycles for OS versions. | Frequent updates need to be tested on multiple OS versions. |

20. What Is API Testing, and Why Is It Important?

API testing verifies that an API (Application Programming Interface) functions as expected. It involves sending requests to the API, receiving responses, and checking if they match the expected outcomes. API testing ensures the API correctly processes inputs, returns accurate data, and handles errors appropriately.

Importance of API testing:

- Functionality: Ensures the API performs its intended functions correctly.

- Reliability: Confirms that the API produces accurate results based on inputs.

- Performance: Evaluates responsiveness, throughput, and scalability to meet performance criteria.

- Security: Identifies vulnerabilities and ensures data is handled securely.

- Interoperability: Checks if the API integrates well with other software across platforms and protocols.

- Error Handling: Ensures proper responses to unexpected inputs and errors.

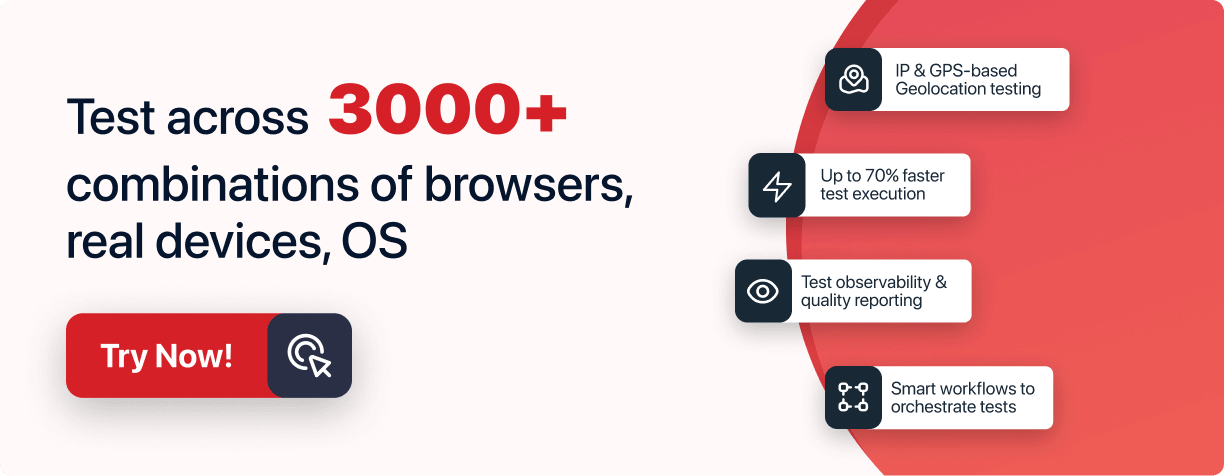

Note : Deliver products and services that meet and exceed your application's quality standards. Try LambdaTest Now!

The above QA interview questions benefit beginners preparing for a QA interview. These questions cover common topics that often come up when starting in QA. Further, you will learn intermediate-level QA interview questions.

QA Interview Question for Intermediates

If you have some experience with QA automation testing, these intermediate-level interview questions will help you deepen your understanding and explore more advanced features and methodologies in quality assurance.

21. Can You Describe the Software Development Lifecycle?

Software Development Lifecycle (SDLC) is creating a new software system efficiently and with high quality, including SDLC models like Waterfall, Iterative, Spiral, V, Big Bang, Agile, RAD, and Prototype.

- Planning: Analyze alignment, resources, scheduling, and costs; consult teams; and create a scope of work document outlining tasks, assignments, and deliverables.

- Requirement Analysis: Gather and document project requirements from business users through meetings and workshops involving business analysts, project managers, technical architects, and lead developers, and develop an SRS document with functional, non-functional, and technical requirements.

- Design: Convert the SRS document into wireframes and prototypes, with architects designing the system architecture, database, data flow, services, and modules, and lead developers handling detailed module design while deciding on application environments like DEV, QA, stage, and prod.

- Coding: Follow the SRS specification to build a clean, scalable, and efficient system, develop a manual for project regulations and best practices, conduct peer reviews, and deploy code to the development environment before moving to QA.

- Testing: Perform various tests (functional, integration, performance, load, penetration) to ensure the system meets requirements, with the QA team documenting defects that developers fix before redeployment and obtaining sign-off for deployment.

- Deployment: Launch the application for users, managed by the DevOps or change requirement team, raise a Request for Change (RFC) detailing deployment steps, including backup and rollback plans, deploy the application, and address any reported issues promptly.

- Maintenance: Handle user-reported issues, new feature requests, and changes in functionality, share feedback with the project management team, prioritize items in the backlog, and include application, server, OS, and framework updates.

22. What Are the Key Challenges in Software Testing?

When performing software testing, testers encounter various challenges that can affect the quality and effectiveness of their testing efforts. Some key challenges include:

- Unstable Test Environment: Ensuring a stable test environment is crucial. Instability, often due to server overload issues requiring frequent restarts, wastes significant time troubleshooting.

- Tight Deadlines: QA is often asked to test at the last minute due to extended development processes, leaving insufficient time for thorough testing. QA must focus on the most critical business requirements when time is limited.

- Wrong Testing Estimation: Precise estimates are challenging, leading to unreliable outcomes and wasted time fixing issues instead of focusing on testing.

- Last-Minute Changes to Requirements: Changes during agile projects, even mid-sprint, can disrupt testers and affect the quality of work, requiring retesting after production deployment.

- Testing the Wrong Things: Shifting priorities and a lack of domain knowledge can lead to incorrect testing, requiring continuous adaptation of the testing focus.

- Lack of Communication: Ineffective communication between the development team, testing team, and stakeholders leads to unclear communication, delays in issue resolution, and lower quality in testing.

- Lack of Skilled Testers: Inexperienced testers can introduce chaos and result in insufficient, unplanned, and incomplete testing throughout the Software Testing Life Cycle (STLC).

23. What Is a Test Plan, and What Does It Include?

A test plan is a document that specifies the systematic approach for planning software testing activities. It is essential to complete system testing and ensure the delivery of quality products on schedule. It provides the framework, scope, resources, schedule, and budget for system testing and includes a requirement traceability matrix to ensure test coverage.

Components of a Test Plan:

- Introduction: Describes the structure and objectives of the test plan, including the test project name, revision history, terminology, names of approvers, date of approval, references, and a summary of the plan.

- Feature Description: Summarizes the system features that will be tested, presenting a high-level description of the system's functionalities.

- Assumptions: Outlines areas where test cases will not be designed for various reasons.

- Test Approach: Covers the overall test approach, including lessons learned from past projects, outstanding issues, test automation strategy, reusable test cases, tools and formats, and test-level categories.

- Test Suite Structure: Identifies test suites and objectives, providing information on the number of new test cases needed and estimating the time required for creation and execution.

- Test Environment: Plans and designs the test environment to ensure effective execution of system-level test cases, including environments like UAT, production, regression, stage, and development.

- Test Execution Strategy: Defines the plan for executing the tests, including organizing and performing testing activities to achieve objectives effectively and efficiently.

- Test Effort Estimation: Estimates the testing effort required, including cost and time to complete tests, and factors such as the number of test cases, creation effort, execution and analysis effort, environment setup, and training needs.

- Scheduling and Milestones: Outlines the schedule and milestones for the test project, including test plan creation, test case preparation, execution schedule, milestones definition, entry and exit criteria, regression testing milestones, defect management milestones, and progress tracking.

24. How Do You Prioritize Testing Tasks?

Prioritizing testing tasks is crucial for effective and efficient software testing. Here’s a structured approach based on the steps provided:

- Understand Your Goals and Scope: Define testing objectives to focus on usability, security, efficiency, and functionality. Specify the scope by identifying features, functionalities, platforms, environments, and user scenarios to ensure comprehensive and relevant testing.

- Identify and Classify Your Risks: Conduct a risk assessment to identify significant risks and problems. Use a risk classification method (e.g., risk matrix) to group risks by likelihood and impact, focusing resources on high-risk areas.

- Use a Prioritization Framework or Method: Choose a framework like MoSCoW (Must, Should, Could, Won't), Kano Model, Pareto Principle (80/20 Rule), or Eisenhower Matrix to categorize and rank test cases based on importance and urgency.

- Plan and Schedule Your Testing Activities: Identify dependencies and prerequisites for tasks, allocate time-based on priority, and use tools for automation, data management, and scheduling.

- Communication and Collaboration with Your Team and Stakeholders: Ensure alignment with other teams, engage stakeholders for input, and report testing results, issues, and progress regularly.

Following these structured steps, you can effectively prioritize your testing tasks, ensuring a focused and efficient testing process that aligns with project goals and stakeholder expectations.

25. Explain the Importance of a Bug Tracking System

A bug-tracking system is essential for logging and monitoring bugs or errors during software testing. It helps manage the evaluation, monitoring, and prioritization of defects in large software systems. Since bugs are often caused by faults from system architects, designers, or developers and may need to be tracked over time, a bug tracking system enables testers to effectively manage these issues.

It improves efficiency by allowing testers to prioritize, monitor, and report the status of each error. A robust system provides a unified workflow for defect monitoring, reporting, and lifecycle traceability. It integrates with other management systems for shared visibility and feedback within the development team and organization.

26. What Methodologies Have You Used in Past Projects (Agile, Waterfall, etc.)?

Several approaches were used in my projects to meet the objectives and particular requirements. The following are some important approaches that were used in the project:

Current Project: Agile with weekly story introductions and deployments is used in the insurance domain, requiring continuous delivery.

- Scrum: Used for projects needing iterative development and continuous feedback. Regular sprints, sprint planning meetings, daily stand-ups, and sprint reviews were involved in adapting to changes and delivering incremental improvements.

- Kanban: Applied to projects requiring continuous delivery without Scrum's rigid structure. Focused on visualizing work, limiting work in progress, and optimizing task flow.

Past Project: In the capital markets domain, Waterfall was used with six sprints per year, including various tests such as smoke testing, sanity testing, regression testing, and user acceptance testing (UAT).

Utilized for projects with clearly defined requirements and an end goal. Followed a structured approach with phases including requirement gathering, design, implementation, testing, and maintenance, ensuring complete documentation and review before moving to the next phase.

Understanding the difference between Agile and Waterfall methodologies can be crucial for selecting the right approach for your project. To learn more about these differences and their impacts, follow this blog on Agile vs Waterfall for an insightful comparison.

27. Describe Any Test Management Tool You Have Used

HP ALM (HP Application Lifecycle Management) is a comprehensive, commercially licensed, web-based test management tool designed to manage the entire application lifecycle. It offers a centralized repository for all testing activities, enabling teams to maintain transparency about the product's status throughout different phases of development. HP ALM supports Agile and Waterfall methodologies, making it versatile for various project management approaches.

28. How Do You Manage Test Data?

To manage test data, ensure it is accurate, reliable, and consistent. Use a centralized repository for storage, apply data masking for sensitive information, automate data generation where possible, and regularly update and maintain the data.

29. Explain the Test Closure Process

The test closure process involves finalizing the testing phase and creating a test closure report. This report summarizes:

- Testing Activities: A detailed account of what was tested.

- Exit Criteria: Confirmation that the product has met the defined exit criteria.

- Bug Status: Overview of open defects and their statuses.

- Testing Analysis: Insights into the types of testing performed and their outcomes.

The report, prepared by the QA engineer and reviewed by stakeholders, ensures that all testing is complete and provides a final review of the software's quality before release.

30. Are You Familiar With Selenium WebDriver? If So, Describe It

Selenium WebDriver enables the creation of robust, browser-based regression automation suites and tests. It provides a programming interface for creating and executing test scripts that interact with web elements in browsers.

Some of the key features include:

- Browser Automation: Supports running tests across different web browsers.

- Cross-Browser Testing: Ensures compatibility by running the same tests on different browsers and operating systems.

- Support for Multiple Languages: Provides language bindings for Java, C#, Python, Ruby, and JavaScript, among others.

- Handling Dynamic Content: Manages dynamic web content using explicit and implicit waits.

- Screen Capture: Takes screenshots of web pages, which is useful for debugging and verifying visual aspects of the web application.

31. What Frameworks Are Used for Automation Testing?

Some of the most commonly used automation testing frameworks include Selenium, Playwright, Cypress, Puppeteer, and more. The choice of automation testing frameworks depends on the project’s needs, as each framework offers distinct features and services.

32. How Do You Select Test Cases for Automation?

To select test cases for automation, consider the following criteria:

- Frequency of Execution: Automate test cases that are frequently executed, such as those in smoke, sanity, and regression testing.

- Core Functionality: Focus on automating test cases that validate the essential functionalities of the application to ensure critical features perform consistently.

- Complexity and Time-Consumption: Choose test cases that are complex or time-consuming to execute manually, especially those involving multiple data sets.

- Repetitiveness and Error-Prone Nature: Automate repetitive test cases prone to human error.

- Stability: Select test cases for automation in stable features less likely to change frequently.

- Handling Multiple Data Sets: Prioritize test cases that require testing with various data sets for efficiency.

- Integration into Regression Suites: Include test cases in the regression suite to verify that new changes do not affect existing functionality.

33. What Are the Main Benefits of Automated Testing?

Automated testing brings many benefits that significantly enhance the efficiency and overall quality of the software.

Below are the key benefits of leveraging automated testing:

- Accelerated Execution: Faster test execution, especially for repetitive tasks.

- Seamless Integration: Integration with CI/CD pipelines for rapid feedback.

- Error Elimination: Consistent and error-free test execution.

- Dependable Results: Consistent results ensure no new bugs.

- Reduced Manual Effort: Saves time and effort by automating repetitive tasks.

- Long-Term Cost Savings: Upfront costs are offset by savings over time.

- Extensive Testing Coverage: Covers more scenarios and edge cases.

- Parallel Execution: Runs tests simultaneously across multiple environments.

- Reusability of Test Scripts: Test scripts can be reused across test cases and projects.

- Modular Test Design: Organized and independent test units.

- Prompt Feedback: Immediate results on code changes.

- Regular Regression Testing: Frequent checks ensure the existing functionality remains intact.

- Unattended Execution: Can run tests autonomously, including off-hours.

- Global Execution: Testing across different time zones and environments.

- Shift Left Approach: Early testing in the development process to catch defects sooner.

34. What Tools Are Used for Performance Testing?

Many performance testing tools help to validate the performance of the software application. Some of the popular tools are mentioned below.

- JMeter: Apache JMeter is a popular open-source tool designed to measure performance and load test both static and dynamic websites. It supports various applications and protocols such as HTTP, HTTPS, FTP, SOAP/REST, LDAP, TCP, SMTP(S), POP3(S), and IMAP(S).

- K6: K6 is a modern, open-source load testing tool for developers and testers. It offers a highly accessible scripting environment using JavaScript.

- LoadRunner: A comprehensive performance testing tool from Micro Focus, it supports many protocols and provides detailed performance analysis.

- Gatling: Gatling is an open-source load testing tool based on Scala, Akka, and Netty. It's known for its high performance and ability to simulate large user loads.

- Locust: Locust is an open-source load-testing tool that allows you to define user behavior with Python code. It is highly scalable and can be distributed over multiple machines.

- BlazeMeter: BlazeMeter provides performance testing as a service compatible with JMeter scripts. It supports large-scale load testing and integrates with CI/CD pipelines.

- NeoLoad: NeoLoad is a performance testing tool for continuous testing and integration. It supports testing web, mobile, and API applications.

35. How Do You Monitor Performance Bottlenecks?

A point that restricts overall performance is called a bottleneck. For example, processing user requests may take longer than expected due to a slow database query, which would be identified as a bottleneck.

Some methods to monitor performance and overcome bottlenecks include:

- Define KPIs: Identify key performance metrics such as response time, throughput, error rate, CPU and memory usage, disk I/O, and network latency.

- Use Performance Testing Tools: Tools like JMeter and k6 help simulate load and measure performance metrics.

- Monitor System Resources: Use tools like top or Task Manager for CPU and memory; iostat for disk I/O; and Wireshark for network monitoring.

- Analyze Logs: Check application and server logs for errors, slow queries, and unusual patterns.

- Use APM Tools: Tools like New Relic, Dynatrace, or AppDynamics offer detailed insights into application performance.

- Conduct Code Profiling: Identify inefficient code with profilers like VisualVM or YourKit.

- Monitor Database Performance: Use SQL Profiler and database monitoring tools to track query performance and resource use.

- Perform Stress and Load Testing: Test the application under high load to find breaking points.

- Review and Optimize Configuration: Adjust server and application settings for better performance.

- Continuous Monitoring: Integrate performance testing into CI/CD pipelines and set up alerts for early issue detection.

The intermediate-level QA interview questions listed above are designed to help beginners and those with some experience preparing for interviews.

Further, you will learn more challenging QA interview questions that are particularly relevant for experienced professionals.

QA Interview Question for Experienced

These QA interview questions are designed for individuals with significant experience in QA testing. These questions assess advanced knowledge and practical expertise in QA testing tools, methodologies, and best practices.

36. What Is a Baseline Test?

A baseline test involves collecting performance metrics to establish a reference point for evaluating future changes to a system or application. It sets a "normal" operational state by measuring key metrics under consistent conditions. This baseline allows for comparison with subsequent test results to identify deviations, improvements, or issues in performance.

37. Mention Common Security Threats and How Do You Test?

Security testing should encompass various potential attacks to identify and address various vulnerabilities. Common issues and challenges related to web application security include:

- SQL Injection: Inserting malicious SQL code into database queries.

- Content Injection: Injecting unauthorized content into a web application.

- File Injection: Uploading and executing malicious files.

- XML Injection: Injecting malicious XML code to alter application behavior.

- LDAP Injection: Manipulating LDAP queries to gain unauthorized access.

- XPATH Injection: Exploiting XPATH queries to access or modify data.

- Cookie Manipulation: Tampering with cookies to bypass security controls.

- Cookie Sniffing: Intercepting cookies to steal session information.

- Cross-Site Request Forgery (CSRF): Exploiting users' trust to perform unauthorized actions.

- Cross-Site Scripting (XSS): Injecting malicious scripts into web pages.

- Session Hijacking: Taking over a user's session to gain unauthorized access.

- Authentication Issues: Weak or flawed authentication mechanisms.

- Information Disclosure: Exposing sensitive information to unauthorized users.

- Clickjacking: Trick users into clicking on something different from what they perceive.

Addressing these vulnerabilities helps ensure a web application is secure and resilient to potential attacks. Below are the ways to address these vulnerabilities.

- Manual Testing: Input SQL commands into fields to see if they trigger unexpected database responses or errors.

- Automated Testing: Use tools like SQLMap or Burp Suite to scan for SQL injection vulnerabilities.

- Review: Ensure that parameterized queries or ORM frameworks are used to handle SQL queries securely.

38. What Tools Do You Use for Security Testing?

Here are some commonly used security testing tools:

- Burp Suite

- OWASP ZAP

- Nessus

- Nmap

- Metasploit

- SQLMap

- Hydra

- Wireshark

- Netsparker

- Acunetix

- Arachni

- Nikto

- QualysGuard

- OpenVAS

- XSSer

- BeEF

- W3af

- SonarQube

39. What Challenges Have You Faced in Mobile Testing?

Here are some common challenges faced in mobile testing:

- Operating System Fragmentation: Ensuring compatibility across various OS versions and handling new OS updates that may introduce compatibility issues.

- Screen Resolutions and Orientations: Adapting to different screen sizes, resolutions, and orientations while ensuring proper layout and functionality.

- Performance and Resource Constraints: Manage limited resources like CPU, memory, and battery and address performance issues such as memory leaks and excessive battery consumption.

- Fast Release Cycles: Keeping up with rapid release cycles while maintaining high-quality standards.

- Testing a Mobile App on Staging: Preventing defects from reaching production by thoroughly testing in a staging environment.

- Mobile Network Bandwidth Issues: Handling varying network speeds and conditions and ensuring app functionality under different network scenarios.

40. What Tools Do You Use for Mobile Application Testing?

For mobile application testing, the following tools are commonly used:

- Appium: An open-source tool that supports cross-platform testing for Android and iOS apps. It uses the WebDriver protocol, making it versatile and allowing for integration with various programming languages.

- XCUITest: A testing framework provided by Apple for iOS applications. It is integrated with Xcode and supports UI and unit testing.

- Espresso: A Google-developed framework for Android applications that provides robust testing capabilities for UI interactions.

- Detox: A JavaScript-based end-to-end testing framework for React Native applications. It is useful for ensuring the quality of mobile apps built with React Native.

- Calabash: An open-source tool that supports Android and iOS applications, offering Cucumber-based automated testing focusing on Behavior-Driven Development (BDD).

These mobile automation testing help streamline the testing process, provide insights into app performance, and ensure a seamless user experience across various mobile devices and operating systems.

41. How Do You Test for Different Mobile Platforms (iOS, Android)?

Testing across different mobile platforms like iOS and Android involves several strategies to ensure that your app performs well and is consistent across devices.

Here’s a streamlined approach:

- Unit Testing: Write unit tests for individual components of your app to ensure they work as expected. Use platform-specific frameworks like XCTest for iOS and JUnit for Android.

- UI Testing: Perform UI testing to check how your app’s interface behaves on different devices. Use tools like XCTest and XCUITest for iOS and Espresso for Android.

- Cross-Platform Testing Tools: Utilize cross-platform testing tools such as Appium or Detox, which can automate tests for both iOS and Android from a single codebase.

- Manual Testing: Test the app manually on various devices and OS versions to identify issues that automated tests might miss. Use real devices or simulators/emulators.

- Performance Testing: Assess your app’s performance on different devices to ensure it meets performance standards. Tools like Instruments for iOS and Android Profiler for Android can be useful.

- Compatibility Testing: Verify your app’s compatibility with different screen sizes, resolutions, and hardware configurations.

- Beta Testing: Distribute your app to a group of beta testers on both platforms to gather feedback and identify issues in real-world usage.

- Automated Build and Test Pipelines: Set up CI/CD pipelines to automate builds and tests for both iOS and Android. This helps catch issues early and ensures consistent quality.

Combining these strategies allows you to comprehensively test your app across different mobile platforms and ensure a smooth user experience.

Testing a mobile application to ensure it works seamlessly across various screen sizes, resolutions, and OS versions can be challenging due to the need to maintain an extensive mobile device lab.

To overcome this challenge, you can use a cloud-based platform like LambdaTest. It is an AI-Native test execution platform that allows you to perform both manual and automated mobile app tests at scale across a wide range of real devices and OS combinations. This approach eliminates needing a physical device lab and enables efficient, comprehensive testing.

42. Describe an Approach to Testing REST APIs

REST is a resource-based architecture where resources are accessed using standardized HTTP methods. This approach makes web services "RESTful." A REST API consists of a collection of interconnected resources, known as its resource model. When designed thoughtfully, REST APIs effectively engage client developers, promoting the use of web services. The REST architectural style is widely used in designing modern web service APIs.

A Web API that adheres to this style is called a REST API.

- Understanding API Documentation: Critical for designing effective tests based on the API’s structure and behavior.

- Test Planning: This is important for creating relevant test scenarios, prioritizing cases, and setting clear objectives.

- Test Environment Setup: Ensures testing is done in a reliable and controlled environment, minimizing external issues.

- Test Execution:

- Functional Testing: Verifies that the API meets its functional requirements.

- Integration Testing: Ensures that the API works well with other systems.

- Security Testing: Identifies vulnerabilities and ensures data protection.

- Performance Testing: Assesses how the API performs under different conditions.

- Load Testing: Evaluates how the API handles various levels of load.

- Test Data Management: Ensures that the data used in testing is accurate and consistent.

- Test Reporting: Documentation of test results is crucial for identifying issues and improving.

This structured approach ensures thorough and effective testing of REST APIs.

43. What Are API Testing Tools?

Some of the most used API testing tools are:

- Postman: Used for both manual and automated API testing. It allows the organization of requests, management of environments, and execution of automated tests with JavaScript. It also supports performance testing of APIs.

- Rest Assured: A Java-based library used for testing REST APIs. It provides a domain-specific language for writing clear API tests and integrates well with Java test frameworks like TestNG and JUnit. It ensures frontend data matches API data in the Selenium with Java framework.

Various tools designed for different stages of the API lifecycle—from development to testing and monitoring—are used in API testing. Here are some widely used tools:

- Swagger (OpenAPI): Utilized for designing, building, and documenting APIs. It features a user-friendly interface, allowing users to interact directly with APIs from the documentation.

- SoapUI: Designed specifically for testing SOAP and REST APIs. Provides advanced testing features, including security and load testing.

- Apache JMeter: Specializes in performance testing, providing detailed performance metrics.

- cURL: Facilitates quick command-line testing for APIs, ideal for simple and direct API requests and responses.

- Rest Assured: A Java-based testing framework that offers a domain-specific language for writing clear and expressive API tests in Java. It integrates well with Java test frameworks like TestNG and JUnit.

These tools service various testing needs, ensuring comprehensive API testing coverage for developers and QA engineers.

44. How Do You Validate Responses From API Calls?

To validate responses from API calls, follow these steps:

- Verify the HTTP Status Code: Confirm that the status code denotes success (200 OK signifies a successful GET request).

- Examine the Response Body: The response body usually contains the data you requested. Validate its structure and content against the expected schema.

- Verify Headers: Ensure that the headers contain the necessary information, such as content type (application/json).

- Check for Errors: Look for any error messages or codes within the response body that might indicate an issue.

- Validate Data Consistency: Compare the response data with known values to ensure accuracy and consistency.

- Test Different Scenarios: Perform tests for various scenarios, including edge cases and error conditions, to ensure robust API behaviors.

45. What Are the Various API Methods?

Here is a high-level overview of several types of API methods:

- GET Method: Retrieves information or data from a specified resource.

- POST Method: Submits data to be processed to a specified resource.

- PUT Method: Updates a specified resource with new data.

- DELETE Method: Deletes a specified resource.

- PATCH Method: Partially updates a specified resource.

- OPTIONS Method: Retrieves the supported HTTP methods of a server endpoint.

- HEAD Method: Retrieves only the headers of a response without the response body.

- CONNECT Method: Establishes a network connection to a resource, typically used for SSL/TLS tunneling.

- TRACE Method: Echoes the request received by the client for debugging purposes.

46. What Is Regression Testing, and Why Is It Necessary?

Regression testing involves re-running previously completed tests to ensure that recent changes, such as bug fixes or new features, have not adversely affected existing functionality.

It is necessary as it helps verify if the new changes function correctly and ensures that new code does not disrupt existing functionality.

As software frequently evolves with updates and enhancements, regression testing is crucial for maintaining stability and ensuring new releases do not introduce new issues.

47. How Often Should Regression Testing Be Performed?

Regression testing should be performed whenever there are significant changes to the software , such as bug fixes, new features, or updates. The frequency of regression testing varies based on the development methodology and project complexity:

- In Waterfall Models: Regression testing is conducted at defined stages within the development cycle, often in multiple cycles, such as after major bug fixes and before final release.

- In Agile Methodologies: Regression testing is performed with each new deployment or change, typically within each sprint, which lasts between 10 to 35 days.

The exact frequency depends on the project's complexity and the organization's strategy, with testing adjusted based on the nature and scope of changes.

48. What Tools Do You Recommend for Regression Testing?

The experience of your team, the project's complexity, your budget, and the particular testing requirements all play a role in selecting the best tool. Many automation solutions, including Microfocus UFT, Playwright, RPA, Selenium, Appium, Cypress, Karate, and Katalon, are available on the market, the most popular and dominant.

- Selenium: It is a versatile tool for web automation and scraping, supporting multiple browsers and platforms. It is suitable for extensive browser-based regression testing.

- Appium: Best for mobile app testing across both Android and iOS platforms.

- Cypress: Known for its speed and reliability in web application testing. It offers strong integration with frameworks like React and Angular and is well-regarded for its ease of use.

- Playwright: Provides robust web testing features, including cross-browser and cross-platform testing, automated UI testing, and parallel testing capabilities.

- RPA Tools: UiPath, Blue Prism, and Workfusion are useful for automating repetitive test executions, which is beneficial for regression testing.

- Karate: An open-source tool for API and web service testing that integrates API test automation, mocks, and performance testing into a single framework.

- Micro Focus UFT: Ideal for comprehensive testing across desktop and web applications. It supports integration with HP ALM for test management but requires VB scripting knowledge.

49. What Is Localization Testing?

Localization testing ensures that an application is adapted to meet the linguistic, cultural, and regional requirements of a specific target audience. It involves:

- Translating Text: Ensuring all text appears correctly in the target language.

- Adjusting Interface: Adapting date/time formats, currency, keyboard usage, symbols, icons, colors, and cultural references to suit local preferences and prevent misunderstandings.

It goes beyond translation to ensure the product is culturally appropriate and functions correctly in different regions. This applies to websites, applications, and installation files.

50. What Key Aspects Should Be Considered During Localization Testing?

When performing localization testing, keep the following points in mind:

- Verify Text Translation: Ensure all text is correctly translated into the target language.

- Check Date Formats: Confirm the correct format (e.g., dd/mm/yyyy or mm/dd/yyyy).

- Validate Time Zones: Account for any effects of Daylight Saving Time.

- Ensure Currency Accuracy: Verify correct currency symbols and formats.

- Confirm Phone Number Formats: Check the arrangement of country code, area code, and number.

- Validate Address Formats: Ensure the layout matches local conventions for name, street, city, state, and postal code.

- Check Zip/Postal Code Formats: Ensure the correct format is used.

- Verify Special Characters: Ensure they display correctly according to locale.

- Test Web Page Encoding: Confirm proper encoding for different languages.

It assures that your application meets its users' cultural and regional needs, enhancing usability and user satisfaction.

Conclusion

Mastering QA interview questions across various levels is crucial for demonstrating your understanding of Quality Assurance principles and practices. Testers starting their career in QA need to have a strong foundation on QA concepts and different testing tools and techniques. At the same time, intermediate and experienced candidates should delve into more complex aspects like SDLC challenges, testing strategies, test planning, and more. You can showcase your expertise and readiness to contribute effectively to QA teams by preparing for these QA interview questions.

Frequently asked questions

- General

Did you find this page helpful?