Next-Gen App & Browser

Testing Cloud

Trusted by 2 Mn+ QAs & Devs to accelerate their release cycles

- Automation

- Home

- /

- Learning Hub

- /

- What is a Code Review and How Does it Work

- -

- June 28 2023

What is a Code Review and How Does it Work

Learn what is code review & its importance in software development. Explore the best code review tools available, to enhance your project's quality.

- Share:

OVERVIEW

“At Cisco, costs per customer support call were $33, and the company wanted to reduce the number of calls per year from 50,000. Code review was used both to remove defects and to improve usability. - Source”

It is a tale of the past when code reviews used to be lengthy and time-consuming processes. As the development landscape has transitioned towards speedier and more agile methodologies, the code review process has also transformed into a lightweight approach that aligns with modern methodologies and it makes your programming better .

In the modern scenarios we have access to review tools that seamlessly integrate into Software Configuration Management (SCM) systems and Integrated Development Environments (IDEs). These resources, including static application security testing (SAST) tools, which automate manual reviews, empower developers to spot and rectify vulnerabilities with increased effectiveness.

These code review tools seamlessly integrate with various development platforms like GitHub or GitLab, or IDEs like Eclipse or IntelliJ. By adopting these state-of-the-art review tools you can streamline your code review process, save time, and enhance the overall quality of your software.

What is a Code Review?

Code review, famously also known as peer code review, is an essential practice in software development where programmers collaboratively examine each other's code to detect errors and enhance the software development process. Accelerate and streamline your software development with this effective technique.

Industry experience and statistical data overwhelmingly support the implementation of code reviews. According to empirical studies, up to 75% of code review flaws have an effect on the software's capacity to be updated and maintained rather than its functioning. It is a great resource for software organizations with lengthy product or system life cycles.

Let's face it: creating software involves humans, and humans make mistakes—it's just a part of who we are. That's where effective code reviews come in. By catching issues early on, they reduce the workload for QA teams and prevent costly bugs from reaching end users who would express their dissatisfaction.

Instituting efficient code reviews is a wise investment that pays off in the long run. And the merits of code reviews extend beyond just fiscal aspects. By nurturing a work culture where developers are encouraged to openly discuss their code, you also enhance team communication and foster a stronger sense of camaraderie.

Taking these factors into account, it's evident that the introduction of a thoughtful and strategic code reviewing process brings substantial benefits to any development team.

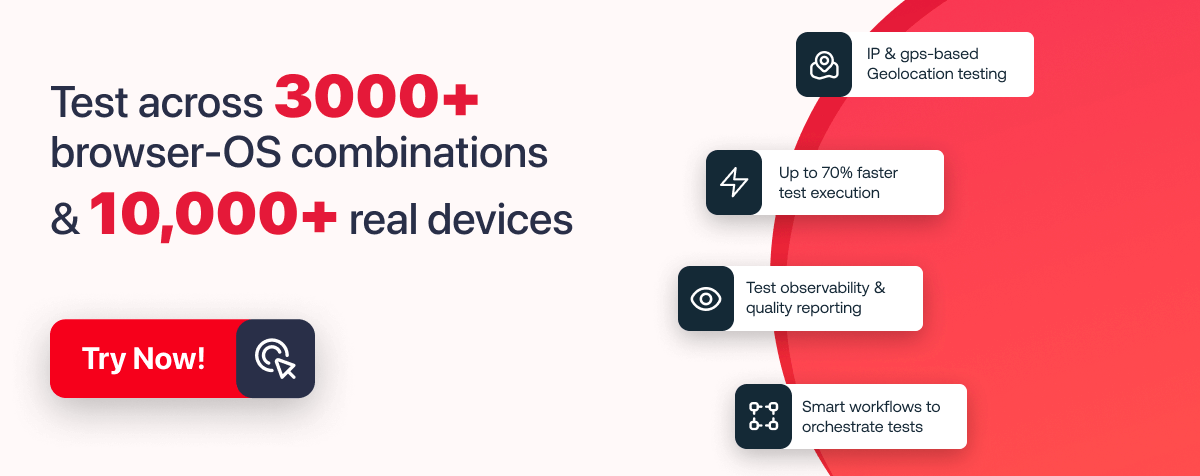

Note : Test your websites across 3000+ environments. Try LambdaTest Now!

Benefits of Code Review

By embracing peer reviews as a regular practice, developers can harness these benefits and elevate the overall quality and efficiency of their software development process.

- Share knowledge: It equips developers with an avenue for mutual learning, allowing for an exchange of strategies and solutions. Junior members of the team can glean invaluable insights from their more seasoned peers, thus catalyzing skill enhancement and forestalling the emergence of knowledge chasms within the group.

- Maintenance of Compliance: It ensures compliance with coding norms and fosters uniformity within the team. For open-source projects with numerous contributors, reviews conducted by maintainers aid in preserving a unified coding style and preclude departures from pre-established guidelines.

- Bug Identification: By spotting bugs during peer reviews, developers can rectify them before they are exposed to customers. Implementing reviews early in the software development lifecycle, in conjunction with unit testing, facilitates swift identification and rectification of issues, eliminating the need for eleventh-hour fixes.

- Boosted Security: These reviews are instrumental in detecting security vulnerabilities. Incorporating security experts into targeted reviews adds an extra tier of protection, supplementing automated scans and tests. Early detection and resolution of security issues contribute to the creation of sturdy and secure software.

- Elevation of Code Quality: Code reviews aid in delivering high-quality code and software. Human reviewers can pinpoint code quality issues that might evade automated tests, aiding in reducing technical debt and ensuring the release of reliable and maintainable software.

- Fostering Collaboration: Collaborative code reviews nurture a sense of responsibility and camaraderie among team members. By collectively striving to find the best solutions, developers enhance their collaborative skills and stave off informational silos, resulting in a smooth workflow.

Steps for Code Review Process

The procedure for code reviewing can show variations based on the particular team dynamics or project specifics. Nonetheless, it generally navigates through the following stages:

1. Code Creation

This initial stage involves the developer creating the code, often in a separate branch or dedicated environment. It's critical for the developer to conduct a self-review of their own work before calling for a review from peers.

This self-review serves as the first checkpoint to catch and fix obvious errors, enforce coding norms, and ensure alignment with the project's guidelines. This proactive step not only saves reviewers' time by filtering out elementary mistakes, but it also affords the developer a valuable learning opportunity by allowing them to reflect on and improve their code.

2. Review Submission

After the developer has thoroughly checked their own code, they put it forward for peer review. In many contemporary development workflows, this step is executed via a pull request or merge request.

This request, made to the main codebase, signals to the team that a new piece of code is ready for evaluation. The developer typically leaves notes highlighting the purpose of the changes, any areas of concern, and specific points they want feedback on.

3. Inspection

In this critical stage, one or more team members examine the submitted code. This inspection is not just a hunt for bugs or errors, but also an assessment of code structure, design, performance, and adherence to best practices. Reviewers leave comments, pose questions for clarity, and suggest potential modifications. The primary purpose here is to ensure that the code is robust, maintainable, and in sync with the overall project architecture.

4. Modification

Following the feedback from the inspection stage, the original developer addresses the suggestions and concerns raised. They revisit their code to make the necessary alterations, fix highlighted issues, and possibly refactor their code for better performance or readability. This iterative process continues until all the review comments are addressed satisfactorily.

5. Endorsement

After the developer has made the required revisions and the reviewers have rechecked the changes, the reviewers provide their approval. This endorsement signifies that the reviewers are satisfied with the quality, functionality, and integration capability of the code.

6. Integration

The final step in the review process involves integrating the revised and approved code into the main codebase. This integration, often carried out through a 'merge' operation, signifies the completion of the code review process. It ensures that the newly added code is now a part of the overall software project, ready for further stages like testing or deployment.

AI-Native test orchestration and execution platform like LambdaTest provides a secure and reliable cloud grid for your further testing stages. It allows developers and testers to perform manual and automation testing at scale on an online browser farm of 3000+ real browsers, devices, and platform combinations.

You can run automated tests using Selenium, Cypress, Appium across desktop and mobile environments.

Subscribe to our LambdaTest YouTube Channel to catch up with the latest tutorials around Selenium testing, Cypress testing, and more.

Tools for Automated Code Review

These tools offer a structured framework for conducting reviews, seamlessly integrating them into the larger development workflow. With the help of tools, the entire process of code review becomes more organized and streamlined.

Incorporation of peer review tools into your development workflow ensures that your code is thoroughly examined, promoting the discovery of potential bugs or vulnerabilities. One significant advantage of these tools is the improved communication they enable between the parties involved. By providing a centralized platform, these tools allow developers to communicate and exchange feedback efficiently. This not only enhances collaboration but also creates a record of the review process.

It's important to select a tool that is compatible with your specific technology stack so that it can easily integrate into your existing workflow. Let's explore some of the most popular code review tools that can greatly assist you in enhancing your code quality and collaboration within your development team.

These tools offer various features and integrations that can fit your specific needs and technology stack, enabling you to achieve optimal results in your code reviewing process.

1. GitHub

GitHub provides code review tools integrated into pull requests. You can request reviews, propose changes, keep track of versions, and protect branches. Github offers both a free plan, and paid plans which start from $4 per user, per month.

2. GitLab

GitLab allows distributed teams to review code, discuss changes, share knowledge, and identify defects through asynchronous review and commenting. It offers automation, tracking, and reporting of code reviews. GitLab has a free plan, and paid plans start from $19 per user, per month.

3. Bitbucket

Bitbucket Code Review by Atlassian offers a code-first interface for reviewing large diffs, finding bugs, collaborating, and merging pull requests. It has a free plan, and paid plans start from $3 per user, per month.

4. Azure DevOps

Azure DevOps, developed by Microsoft, integrates code reviews into Azure Repos and supports a pull request review workflow. It provides threaded discussions and continuous integration. The basic plan is free for teams of five, and then it costs $6 per month for each additional user.

5. Crucible

Crucible, from Atlassian, is a lightweight peer review software with threaded discussions and integrations with Jira Software and Bitbucket. It requires a one-time payment of $10 for up to five users or $1,100 for larger teams.

6. CodeScene

CodeScene goes beyond traditional static code analysis by incorporating behavioral code analysis. It analyzes the evolution of your codebase over time and identifies social patterns and hidden risks. CodeScene offers cloud-based plans, including a free option for public repositories on GitHub, and on-premise solutions.

It visualizes your code, profiles team members' knowledge bases, identifies hotspots, and more. You can explore CodeScene through a free trial or learn more about it in their white paper.

7. Gerrit

Gerrit is an open-source tool for web-based code reviews. It supports Git-enabled SSH and HTTP servers and follows a patch-oriented review process commonly used in open-source projects. Gerrit is free to use.

8. Upsource

JetBrains Upsource offered post-commit code reviews, pull requests, branch reviews, and project analytics. However, it is no longer available as an independent tool. Instead, JetBrains has incorporated peer review functionality into their larger software platform called JetBrains Space.

9. Reviewable

Reviewable is a code review tool specifically designed for GitHub pull requests. It offers a free option for open-source repositories, and plans for private repositories start at $39 per month for ten users. Reviewable overcomes certain limitations of GitHub's built-in pull request feature and provides a more comprehensive peer review experience.

10. JetBrains Space

JetBrains Space is a modern and comprehensive platform for software teams that covers code reviews and the entire software development pipeline. It allows you to establish a customizable and integrated Performance review process.

Space offers turn-based code reviews, integration with JetBrains IDEs, and a unified platform for hosting repositories, CI/CD automation, issue management, and more. Minimum Price starts at $8 per user, per month, and a free plan is also available.

11. Review Board

Review Board is an extensible tool that supports reviews on various file types, including presentations, PDFs, and images, in addition to code. It offers paid plans starting from $29 per 10 users, per month.

12. Axolo

Axolo takes a unique approach to code review by focusing on communication. It brings review discussions into Slack by creating dedicated Slack channels for each code review. Only the necessary participants, including the code author, assignees, and reviewers, are invited to the channel. Axolo minimizes notifications and archives the channel once the branch is merged. This approach streamlines the code reviewing process and eliminates stale pull requests.

13. AWS CodeCommit

AWS CodeCommit is a source control service that hosts private Git repositories and has built-in support for pull requests. It is compatible with Git-based tools and offers a free plan for up to five users. Paid plans start from $1 per additional user, per month.

14. Gitea

Gitea is open-source projects that provide lightweight and self-hosted Git services. They support a standard pull request workflow for code reviews and are free to use.

15. Helix Swarm

Helix Swarm is a web-based tool designed specifically for the Helix Core VCS. It seamlessly integrates with the complete suite of Perforce tools, providing teams that use Helix Core with a range of resources for collaborative work. Helix Swarm is free to use, making it an accessible choice for teams looking for an effective code review solution.

16. Peer Review for Trac

The Peer Review Plugin for Trac is a free and open-source code review option designed for Subversion users. It integrates seamlessly into Trac, an open-source project management platform that combines a wiki and issue tracking system. With Peer Review Plugin, you can compare changes, have conversations, and customize workflows based on your project's requirements.

17. Veracode

Veracode offers a suite of code review tools that not only enable you to improve code quality but also focus on security. Their tools automate testing, accelerate development, and facilitate remediation processes. Veracode's suite includes Static Analysis, which helps identify and fix security flaws, and Software Composition Analysis, which manages the remediation of code flaws. You can also request a demo or else quote to explore Veracode further.

18. Rhodecode

Rhodecode is a web-based code review tool that supports Mercurial, Git, and Subversion version control systems. It offers both cloud-based and on-premise solutions. The cloud-based version starts at $8 per user per month, while the on-premise solution costs $75 per user per year. Rhodecode facilitates collaborative code reviews, provides permission management, a visual changelog, and an online code editor for making small changes.

Choose the one that best satisfies the requirements and financial constraints of your team from among these tools as each one has distinctive features and pricing options. Code reviews can enhance the quality of your development process, help you find errors faster, and promote teamwork among members.

Automated Code Review V/S Manual Code Review

Manual code review and automated code review are two independent techniques used in software development to evaluate the quality of the code and identify any issues in the codebase.

Automated code review tools eliminate human intervention by scanning source code for bugs, security vulnerabilities, and performance issues, offering instant results, enabling developers to proceed without delays. On the other hand, manual code reviewing relies on human expertise to meticulously examine source code, line by line, in search of vulnerabilities. This approach proves invaluable in gaining insight into the context behind coding decisions, ensuring comprehensive and precise evaluations.

Here's a table summarizing the differences between manual code review and automated code review:

| Aspect | Manual Code Review | Automated Code Review |

|---|---|---|

| Human Involvement | Human developers manually review the code. | Automated tools scan the code without human input. |

| Speed | Slower due to manual inspection. | Faster, suitable for large codebases. |

| Contextual Understanding | Reviewers can understand project context. | Lacks understanding of project-specific context. |

| Flexibility | Can adapt to changes in requirements. | Limited adaptability to changing requirements. |

| Subjectivity | Reviewers' opinions may vary. | Applies consistent rules across the codebase. |

| Comprehensive Checks | May overlook certain issues. | Can catch specific patterns and coding standards. |

| False Positives/Negatives | Less likely to have false positives/negatives. | Can generate false positives/negatives. |

| Detection of Logical Issues | More likely to identify complex logic issues. | Focuses on patterns and may miss logical issues. |

| Scalability | May not scale well for large codebases. | Scales well for continuous integration workflows. |

| Resource Requirements | Requires skilled human reviewers. | Needs tool setup and maintenance. |

| Best Suited For | Critical code parts and high-level issues. | Common issues and coding standards enforcement. |

Four approaches to code review

Here are four common approaches to code review:

1. Email Pass- Around Reviews

Under this approach, when the code is ripe for review, it gets dispatched to colleagues soliciting their feedback. This method provides flexibility but can swiftly turn complex, leaving the original coder to sift through a multitude of suggestions and viewpoints.

2. Paired Programming Reviews

Here, developers jointly navigate the same code, offering instantaneous feedback and mutually scrutinizing each other's work. This method encourages mentorship and cooperation, yet it might compromise on impartiality and may demand more time and resources.

3. Over-the-shoulder Reviews

This approach involves a colleague joining you for a session where they review your code as you articulate your thought process. While it's an informal and straightforward method, it could be enhanced by incorporating tracking and documentation measures.

4. Tool-assisted Reviews

Code review tools that are software-based bring simplicity and efficiency to the table. They integrate with web development frameworks, monitor comments and resolutions, permit asynchronous and remote reviews, and generate usage statistics for process enhancement and compliance reporting.

Code Review Best Practices

Let's delve further into the best practices for code reviews, ensuring that your code is of the highest quality. By implementing these techniques, you can foster a positive and collaborative environment within your team. Here are some additional tips:

- Create a code review checklist

- Introduce code review metrics

- Keep code reviews under 60 minutes

- Limit checks to 400 lines per day

- Offer valuable feedback

The code review checklist serves as a structured method for ensuring code excellence. It covers various aspects such as functionality, readability, security, architecture, reusability, tests, and comments. By following this checklist, you can ensure that all important areas are thoroughly reviewed, leading to better code quality.

Metrics play a crucial role in assessing code quality and process improvements. Consider measuring inspection rate, defect rate, and defect density.

The inspection rate helps identify potential readability issues, while the defect rate and defect density metrics provide insights into the effectiveness of your test process. By monitoring these metrics, you can make data-driven testing decisions to enhance peer reviews.

It's advisable to keep code evaluation sessions shorter than 60 minutes. Extended sessions may lead to decreased efficiency and attention to detail.

Conducting compact, focused code evaluations allows for periodic pauses, giving reviewers time to refresh and return to the code with a renewed perspective. Regular code evaluations foster ongoing enhancement and uphold a high-quality code repository.

Reviewing a large volume of code at once can make it challenging to identify defects. To ensure thorough reviews, it is advisable to limit each review session to approximately 400 lines of code or less. Setting a lines-of-code limit encourages reviewers to concentrate on smaller portions of code, improving their ability to identify and address potential issues.

When delivering feedback during code evaluations, aim to be supportive rather than critical. Rather than making assertions, pose questions to spark thoughtful conversations and solutions. It's also vital to provide both constructive criticism for improvements and commendation for well-done code. If feasible, conduct evaluations face-to-face or via direct communication channels to ensure effective and lucid communication.

Keep in mind, code evaluations are a chance for learning and progress. Approach the process with an optimistic attitude, centering on continual enhancement and fostering a team-based environment. By observing these beneficial practices, you can improve your code quality, enhance team collaboration, and eventually deliver superior software solutions.

Code Review Checklist

A code review checklist can act as a handy guide to ensure a thorough and effective review process. Here are some essential points to consider:

- Functionality

- Does the code accomplish the intended purpose?

- Have edge cases been considered and handled appropriately?

- Are there any logical errors or potential bugs?

- Readability and Coding Standards

- Is the code clear, concise, and easy to understand?

- Does the code follow the project's coding standards and style guidelines?

- Are variables, methods, and classes named descriptively and consistently?

- Are comments used effectively to explain complex logic or decisions?

- Error Handling

- Are potential exceptions or errors being appropriately caught and handled?

- Is the user provided with clear error messages?

- Does the code fail gracefully?

- Performance

- Are there any parts of the code that could potentially cause performance issues?

- Could any parts of the code be optimized for better performance?

- Are unnecessary computations or database queries being avoided?

- Test Coverage

- Are appropriate unit tests written for the functionality?

- Do the tests cover edge cases?

- Are the tests passing successfully?

- Security

- Does the code handle data securely and protect against potential threats like SQL injection, cross-site scripting (XSS), etc.?

- Is user input being validated properly?

- Are appropriate measures being taken to ensure data privacy?

- Modularity and Design

- Is the code well-structured and organized into functions or classes?

- Does the code follow good design principles like DRY (Don't Repeat Yourself) and SOLID (Single Responsibility, Open-Closed, Liskov Substitution, Interface Segregation and Dependency Inversion)?

- Does the code maintain loose coupling and high cohesion?

- Integration

- Does the code integrate correctly with the existing codebase?

- Are APIs or data formats being used consistently?

- Documentation

- Is the code or its complex parts documented well for future reference?

- Is the documentation up-to-date with the latest code changes?

Remember, a good peer review isn't just about finding what's wrong. It's also about appreciating what's right and maintaining a positive and constructive tone throughout the process.

Python Code Review Checklist

Performing code reviews in Python involves evaluating various aspects of the code to ensure its quality, maintainability, and adherence to best practices. Below is a Python code review checklist that covers essential points to consider during the review process:

- Code Readability:

- Are variable and function names descriptive and meaningful?

- Is the code properly indented and formatted according to PEP 8 guidelines?

- Are comments used where necessary to explain complex logic or assumptions?

- Code Structure:

- Is the code organized into functions, classes, and modules appropriately?

- Are there any overly long functions that could be refactored?

- Are there any duplicated code blocks that should be extracted into reusable functions?

- Error Handling:

- Is there adequate error handling to handle potential exceptions and edge cases?

- Are exceptions logged or handled gracefully to prevent application crashes?

- Input Validation and Sanitization:

- Are user inputs properly validated to prevent security vulnerabilities such as SQL injection or cross-site scripting (XSS)?

- Are inputs sanitized before being used in queries or displayed in the output?

- Performance Considerations:

- Are there any potential performance bottlenecks in the code?

- Are large data structures or complex operations optimized for better performance?

- Testing:

- Are unit tests provided for critical functions and features?

- Do the tests cover various scenarios, including edge cases and error conditions?

- Documentation:

- Is the code adequately documented, including function and class docstrings?

- Are external dependencies and usage instructions documented?

- Security:

- Are there any security vulnerabilities such as sensitive data exposure or insecure APIs?

- Are encryption and authentication mechanisms properly implemented where needed?

- Dependencies:

- Are the required dependencies properly listed in the project's requirements file?

- Is the use of external libraries justified, and are they being used correctly?

- Code Comments and TODOs:

- Are there any outdated or irrelevant comments?

- Are TODOs or FIXMEs properly addressed or documented?

Remember that the checklist provided above is a general guide, and specific projects or organizations may have additional criteria to consider during their code reviewing process. These reviews are an essential part of maintaining code quality and promoting a healthy development culture within a team.

Secure Code Review Checklist

Performing a secure review code is crucial to identify and address potential security vulnerabilities in software applications. Below is a checklist that outlines key security considerations during a review:

- Input Validation and Sanitization

- Are all user inputs validated and sanitized to prevent common vulnerabilities like SQL injection, Cross-Site Scripting (XSS), and Command Injection?

- Authentication and Authorization

- Is the authentication mechanism secure and implemented correctly?

- Are authorization checks enforced for sensitive operations and resources?

- Sensitive Data Handling

- Is sensitive data (e.g., passwords, API keys, tokens) stored securely, preferably using encryption?

- Is sensitive data transmitted over secure channels, such as HTTPS?

- Session Management

- Are sessions managed securely, with proper session expiration and handling of session tokens?

- Error Handling and Logging

- Are errors handled gracefully, without revealing sensitive information to users?

- Is logging done properly to capture relevant security events and facilitate incident investigation?

- Secure File Handling

- Is user-supplied content checked for malicious file types and properly sanitized?

- Are file permissions set appropriately to prevent unauthorized access?

- Secure Communication

- Are cryptographic protocols (e.g., TLS/SSL) used for secure communication between components and with external services?

- Access Control

- Are access controls in place to limit access to sensitive features and data based on user roles and permissions?

- Secure Configuration Management

- Are default configurations changed to avoid security weaknesses?

- Are sensitive configuration values kept separate from the codebase?

- Third-Party Libraries and Dependencies

- Are third-party libraries used from trusted sources and regularly updated to address security vulnerabilities?

- Are the necessary security patches applied to the used dependencies?

- Denial-of-Service (DoS) Prevention

- Are appropriate measures in place to mitigate DoS attacks, such as rate limiting and request throttling?

- Cryptography

- Is encryption used properly for sensitive data storage, ensuring the correct algorithms and key management practices are followed?

- Code Injection and Code Execution

- Are there any points in the code where untrusted data is used in dynamic code execution?

- Are there any code paths susceptible to code injection vulnerabilities?

- Cross-Site Request Forgery (CSRF) Protection

- Are CSRF tokens used to protect against CSRF attacks on state-changing requests?

- Security Headers

- Are appropriate security headers (e.g., Content Security Policy, X-XSS-Protection) set to enhance browser security?

- Secure API Design

- Are APIs designed to follow secure practices, such as using proper authentication and rate limiting?

- Secure Database Usage

- Is the database access limited to the necessary operations and properly parameterized to avoid SQL injection?

It's essential to tailor this checklist to the specific requirements and technologies used in your application. Regular and thorough secure code reviews are critical to maintaining a strong security posture and protecting applications and data from potential threats.

Code Review for Carrying Out the Development Cycle

A peer review is carried out during the development cycle after a developer has completed coding a feature or fix and is ready to have it reviewed. It typically occurs when the code changes are submitted as a pull request or merge request in the version control system.

At this stage, the code is reviewed by one or more team members (reviewers) to ensure its quality, readability, and adherence to coding standards and best practices. The code review process aims to catch potential issues early in the development process and promote collaboration among team members. Once the code is reviewed and approved, it can be merged into the main codebase.

The peer reviews are typically conducted at specific stages during the development cycle. The exact timing may vary based on the development methodology and team preferences. Here are some common points in the development cycle when peer reviews are carried out:

1. Pre-Commit or Pre-Merge Review

In this approach, code reviews are performed before the developer's changes are committed to the version control system or merged into the main codebase. Developers submit their changes for review, and the review process takes place in a separate branch or code review tool. Once the code is approved, it can be merged into the main branch. Pre-commit reviews help catch issues early and prevent potentially problematic code from reaching the main codebase.

2. Post-Commit or Post-Merge Review

With this approach, developers are allowed to commit or merge their changes into the main codebase without a pre-commit review. After the changes are committed, these reviewed code are conducted in the main branch. This approach may be more suitable for teams that value speed and agility during development but still want to ensure code quality through post-merge reviews.

3. Continuous Integration/Continuous Deployment (CI/CD)

In a CI/CD setup, peer reviews are often integrated with automated testing and continuous deployment processes. Developers submit their changes, and automated tests are triggered to check for basic functionality and compliance with coding standards. If the automated tests pass, the code is then subjected to code review. If the review is successful, the changes are automatically merged and deployed to production.

Note : Streamline your CI/CD workflow with Jenkins. Try LambdaTest Now!

4. Pull Request (PR) Review

Pull requests are a common mechanism in version control systems like Git. Developers create a pull request to propose changes, and the review process takes place within the pull request. Team members review the code, provide feedback, and discuss changes before the pull request is merged into the main branch.

5. Milestone or Feature Completion Review

Some teams may choose to conduct peer reviews at the completion of a specific milestone or when a significant feature is finished. This approach allows for comprehensive reviews of larger portions of code and ensures that all aspects of the feature or milestone are thoroughly examined.

6. Ad Hoc or On-Demand Review

In some cases, these reviews may be conducted on-demand or whenever there is a need to review a specific piece of code. This approach allows for flexibility and can be beneficial when addressing critical issues or reviewing code that requires urgent attention.

Ultimately, the specific timing of peer reviews should align with the development process and the team's needs. The key is to integrate peer reviews as a regular practice to maintain code quality, catch issues early, and promote collaboration and knowledge sharing among team members.

Conclusion

Code review, despite being just one part of a comprehensive Quality Assurance strategy for software production teams, leaves a remarkable mark on the process. Its role in early-stage bug detection prevents minor glitches from snowballing into intricate problems and helps identify hidden bugs that could impede future developments.

In the current high-speed climate of software development, where continuous deployment and client feedback are crucial, it's rational to rely on proficient digital tools. The surge in code reviews conducted by development teams is largely attributed to what's known as the "Github effect."

By endorsing peer reviews and cultivating a cooperative environment, we tap into the collective wisdom and diligence of developers, which leads to better code quality and diminishes issues arising from human errors.

On This Page

- Overview

- What is a Code Review?

- Benefits of Code Review

- Steps for Code Review Process

- Tools for Automated Code Review

- Automated Code Review V/S Manual Code Review

- Four Approaches to Code Review

- Code Review Best Practices

- Code Review Checklist

- Code Review for Carrying Out the Development Cycle

- Frequently Asked Questions (FAQs)

Frequently asked questions

- General

Did you find this page helpful?