Next-Gen App & Browser

Testing Cloud

Trusted by 2 Mn+ QAs & Devs to accelerate their release cycles

- Automation

- Home

- /

- Learning Hub

- /

- Automation Testing

- -

- Dec 28 2023

What is Automation Testing: Benefits, Strategy and Best Practices

Check out this detailed guide to learn about Automation Testing and discover how to create a successful Automation Testing system.

- Share:

OVERVIEW

Automation testing involves using software tools to execute pre-scripted tests on a software application before it is released into production. This process helps in identifying bugs, ensuring software quality, and reducing manual testing efforts, making it a key component in continuous integration and continuous delivery pipelines.

Automation testing proves cost-effective in the long run, where manual testing struggles with repetitive tasks, such as regression testing, causing increased pressure on manual testers as software expands. Despite the initial effort, automation testing is more efficient, reducing errors, speeding up test completion, cutting costs, and improving software quality.

Testers use frameworks like Selenium, Playwright, Appium, etc., to automate test cases and accelerate execution, which is especially beneficial for repetitive tests like integration and regression testing. Faster tests yield quicker results, providing teams with frequent feedback for issue detection and functionality improvement.

In this tutorial, we will explore each aspect of test automation, starting from understanding its core principles, benefits, and types of automation testing. As part of this journey, it's important to consider tools that streamline the process. KaneAI enables faster test creation, debugging, and refinement using natural language, offering a practical solution to enhance your automation workflow.

Let’s dive deeper into how test automation can transform your testing strategies.

What is Automation Testing?

Automation Testing refers to the use of specialized software to control the execution of tests and compare actual outcomes with predicted outcomes. It automates repetitive but necessary tasks in a formalized testing process, or performs additional testing that would be difficult to do manually, enhancing both efficiency and accuracy in software development.

Organizations aim to save time, reduce costs, and enhance overall software quality by automating repetitive and time-consuming manual testing procedures. Automation testing serves the purpose of cross-checking if a software application performs as expected.

This involves using scripted sequences executed by testing tools, with outcomes reported and results compared to previous test runs. The advantage lies in the reusability of automated test scripts, promoting efficiency and consistency.

Why Automation Testing?

Now that we've explored some significant advantages of automation testing, let's delve into its necessity through a practical scenario.

Imagine you're tasked with testing a new feature in your software, outlining a comprehensive test plan that includes 50 identified test cases. As you initiate the testing process, you encounter and report 10 bugs in the initial sprint. Subsequently, these bugs are addressed in subsequent sprints, necessitating the testing of updated software versions with each build. The challenge emerges: How can you guarantee that fixing one bug doesn't adversely impact previously functioning areas?

To address this concern, it becomes imperative to conduct thorough testing of the entire software or perform regression testing after each bug fix. As the software's complexity grows, or when faced with an increasing number of features to test, the manual testing approach becomes unwieldy. This is precisely where automation testing proves invaluable.

In such scenarios, the solution lies in automating the areas already tested and covered manually. By doing so, whenever a new build or bug fix is introduced, you can effortlessly execute the entire suite, thereby ensuring the overall health of your software.

Consequently, by investing less time, you can achieve enhanced coverage and quality for your software. An adept test automation strategy assumes a pivotal role in guaranteeing the integrity of an application, free from bugs.

Benefits of Automation Testing

In software testing, the significance of test automation lies in its ability to speed the testing process, making it more efficient and less open to human errors. With the capability to execute tests quickly and frequently without human intervention, automation allows for the early detection of issues and ensures a faster testing cycle. This is particularly critical when assessing software compatibility across different browsers, operating systems, and hardware configurations, a task impractical to perform manually due to various constraints like geographical limitations and budget considerations.

Let’s go deeper into this tutorial and learn about the benefits of automation testing to explore its advantages.

- Faster and more efficient testing: Test automation can execute tests more quickly and efficiently than manual because it doesn't involve human participation. As a result, problems can be found earlier because testing can be done more frequently and efficiently. For instance, in Shopify, you may have to check if the product works well on different browsers, OS, and hardware configurations. It’s nearly impossible to perform them manually, given many constraints such as geographical location and budget, especially for startups, and this is where automated testing comes into the picture.

- Improved accuracy and consistency: Automated testing eliminates human errors, improving the accuracy and consistency of test results.

- Cost-effectiveness: Despite initial costs for designing and maintaining automated tests, it is cost-effective compared to manual testing, saving time and requiring fewer resources.

- Increases test coverage: Automated testing extends test coverage by manually running complex, impractical tests. This comprehensive testing ensures a thorough examination of the software, enhancing overall test coverage.

- Enables continuous testing and integration: Test automation can be integrated into the continuous testing and integration process, allowing for continuous feedback and quicker software delivery.

- Test automation helps with shift-left testing: Test automation significantly contributes to shift-left testing, strategically incorporating testing activities earlier in the Software Development Life Cycle (SDLC). This proactive approach detects errors and issues earlier in the process, minimizing costs and fostering a more efficient development process where developers and testers can promptly identify bugs in real-time and enhance overall productivity.

Difference between Manual and Automation Testing

In this automation testing tutorial, let’s see what are the major differences between Manual and Automation Testing:

| FEATURES | MANUAL TESTING | AUTOMATION TESTING |

|---|---|---|

| Test Execution | Done manually by the team members | Done using automation tools and scripts |

| Test Efficiency | Time-consuming and less efficient | Saves time and is more efficient |

| Test Accuracy & Reliability | Low, as manual tests are prone to human errors | High, as no scope of human errors |

| Infrastructure Cost | Low, Return of Investment is low | Low (for the cloud-based test), Return Of Investment is high |

| Usage | Suitable for Exploratory, Ad Hoc, and Usability Testing | Suitable for Regression, Load, and Performance Testing |

| Coverage | Difficult to ensure greater test coverage | Easier to achieve greater test coverage |

Who Is Involved in Test Automation?

Testing initiates early in an application's development lifecycle with the shift-left approach in agile development. Under this strategy, developers with technical expertise collaborate with testers to create automation, sharing increased testing responsibilities. This process is fundamental to software testing, using tools and scripts for test automation.

Below are the roles involved in making the test process effective.

- Test Automation Engineers: Responsible for creating, implementing, and maintaining frameworks, scripts, and tools.

- QA/Test Engineers: Develop test plans, conduct manual tests, and collaborate with engineers to ensure alignment between automated and manual tests.

- Developers: They play a crucial role in automation tests, collaborating with engineers to ensure code is written with a focus on testing and automation.

- Business Analysts: They offer advice on what should be tested and how it should be tested, adding value to the testing process.

When to Perform Automated Testing?

Automated testing is strategically used in the software development lifecycle to enhance efficiency, identify defects promptly, and ensure the reliability of applications. Knowing when to implement automated testing to maximize its benefits and streamline the testing process is crucial. It is essential to consider the following factors while selecting which tests to automate:

- Repetitive and time-consuming test cases: Automation is a good option for tests that demand a lot of manual work and repetition. These tests can be automated to save time and lower the possibility of human error.

- Crucial test cases for business: Automation is well-suited for tests essential to the company's operation, such as the checkout process or the add-to-cart function in an e-commerce website. Ensuring the smooth functioning of these processes is critical for preventing user disruptions on the website. By automating these tests, their execution becomes more reliable and consistent, contributing to a seamless user experience.

- Complex test cases: Automating a big test script on different network conditions can lower the possibility of mistakes and ensure the tests are carried out correctly.

- Data-driven tests: Automation is an excellent option for tests that need a lot of data input and output. Automating these tests can make the data accurate and consistent throughout numerous runs. For example, data entry actions are recorded and entered into an application form. Only the values you entered during the recording are included in the test and will not lead to errors in the application. However, other data might throw errors. In this case, automating the tests is valuable to support the accuracy of results.

- Stable test cases: Tests operating in a predictable and steady environment are suitable candidates for automation. The execution of these tests can be made more dependable and accurate by automating them. A familiar example of a stable test case is a CPU test. It examines the stability of the CPU and keeps track of how it performs as the workload rises.

Choosing the proper tests for automation is crucial to ensure that the effort is productive and efficient and adds the maximum value to the business. Software development teams can select the tests best suited for automation by considering these factors and developing a solid and effective strategy.

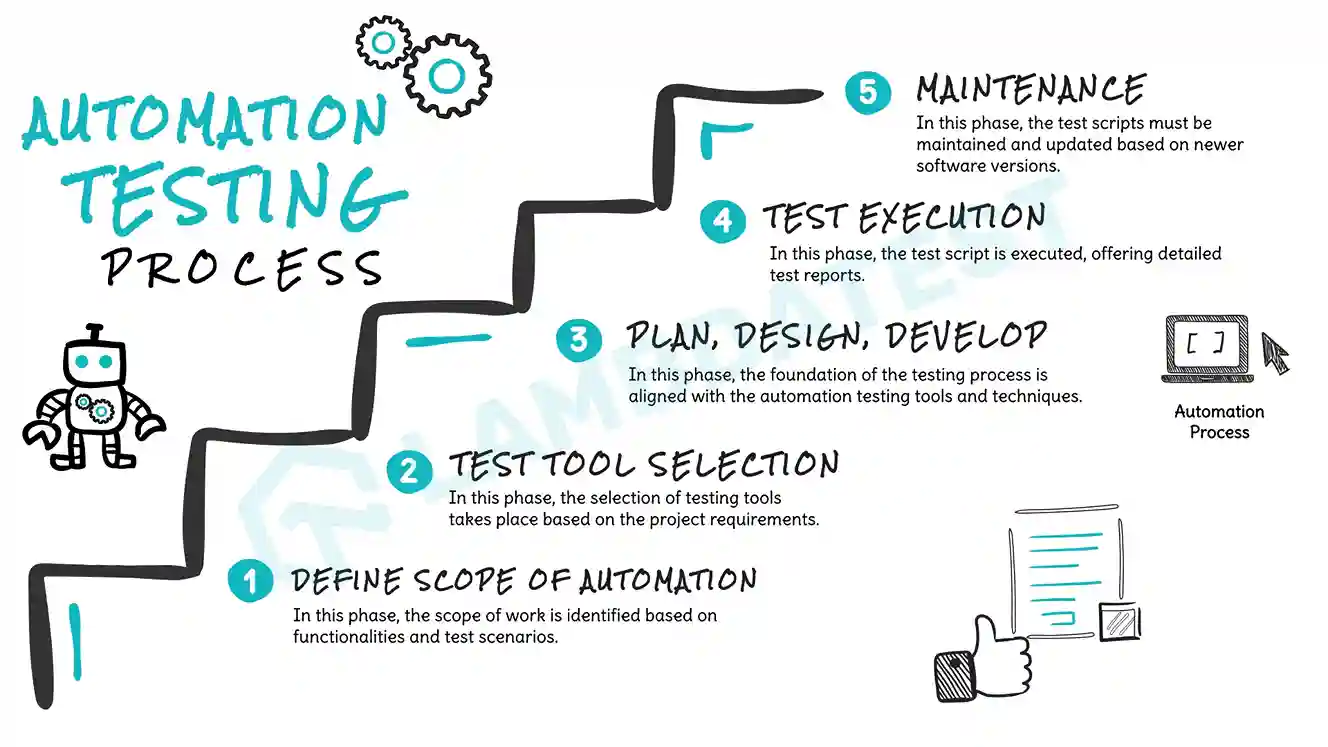

How to Perform Automation Testing?

Let's delve into the essence of the test automation process and explore the steps for implementing them within your organization. It helps build a strong starting point and eliminates issues that make automation difficult. Here’s how you can perform automation testing.

- Choose the Right Tool: Selecting a testing tool that aligns with the project requirements and is compatible with your chosen platforms is essential. Consider factors like ease of use, scalability, and support for various testing types.

- Scope Definition: Clearly define the scope of your automation testing efforts. Identify specific functionalities and areas within your application that will benefit most from automation. This focused approach ensures efficient use of resources.

- Strategize, Design, Develop: Develop a comprehensive strategy highlighting the testing scope and objectives. Design your automation framework with a focus on reusability and maintainability. Implement the automation scripts, keeping the chosen functionalities in mind.

- Test Execution: Execute your automated tests to cover the defined scope comprehensively. Monitor the test runs closely to ensure accurate and precise outcomes. Address any issues or failures promptly, and refine your scripts as needed.

- Continuous Maintenance: Automation suites require ongoing maintenance to adapt to software changes and improvements. Regularly update and enhance your automation suite to align with evolving project requirements. This ensures the longevity and effectiveness of your automation testing efforts.

- Integration with CI/CD: Consider integrating your automation tests into a Continuous Integration/Continuous Deployment (CI/CD) pipeline for seamless and automated testing during the development lifecycle.

- Collaboration and Training: Collaboration between developers and testers to enhance the effectiveness of automation. Train team members to ensure proficiency using the chosen testing tools and frameworks.

- QA metrics and Reporting: Implement metrics and reporting mechanisms to track the performance of your automation tests. This provides valuable insights into your testing strategy's effectiveness and helps identify improvement areas.

To learn more about the metrics, explore this guide on software testing metrics. This resource will guide you in testing, developing, and maintaining your software quality.

By following the above pointers, you can establish a robust automation testing process that makes your testing process faster and contributes to the overall efficiency and quality of your software development lifecycle.

Which Tests Should Be Automated?

This section focuses on what test cases to automate and what to not. Let’s explore it in two parts: What is automated testing (automatable) and automated testing (non-automatable)? Even though you can automate tests such as smoke, sanity, and regression tests, tests requiring human expertise might need manual intervention. Here is a table demonstrating the same.

| Automatable tests | Non-automatable tests |

| Tests like regression tests must be run against every application build/release. | Tests you need to run only once in a lifetime. |

| Tests use the same workflow but different input data for every test, like boundary and data-driven tests. | User experience tests involving human opinion. |

| Tests require collecting multiple pieces of info during runtimes, such as low-level application attributes and SQL queries. | Tests that are short and need to be done soon, where writing test scripts would consume extra time. |

| Tests that can be used for performance testing, like stress and load tests | Ad hoc or random testing based on domain expertise |

| Tests take a long time to perform; you may have to run them apart from your working hours or on breaks | Tests with unpredictable results. If you want your automation validation to succeed, the outcomes should be predictable. |

| Tests where you input large data volumes. | Tests where you need to watch to ensure that the results are right constantly. |

| Tests that must run against multiple configurations — different OS and browser combinations, for example. | Tests that don't need to be performed against multiple browsers, OS, and devices. |

| Tests during which images must be captured to prove that the application behaved as expected | Simple tests with no added value to your team. |

| Automatable tests that carry the utmost importance to your product. | Tests that don't focus on the risk areas of your application. |

What are the Types of Automation Testing?

Automation testing comes in various forms, each serving specific purposes in software development. Common types include Unit Testing, which examines individual components, and Functional Testing, which assesses overall system functionality. Collectively, these methods enhance efficiency and reliability in the software testing process. Let's check all the different types of automation testing below.

Functional Testing

Functional testing checks if the features of an application meet the software requirements, ensuring they deliver consistent results that align with what users expect for the best performance and experience. It involves testing by providing inputs and checking if the outcomes match what users expect.

- Unit testing

- Integration testing

- System testing

- Regression testing

- Smoke testing

- Sanity testing

This type of testing is done by developers while writing code for an application. The main goal of unit testing is to check if each part or unit of the code works correctly. It ensures you get the expected output when you give a particular input to an element of the code. Unit testing is like building the foundation for more complex features in the application.

For instance, an e-commerce website that allows customers to order personalized T-shirts. The website has various functionalities like selecting a color or the T-shirt, adding custom text, and choosing a size; during unit testing, developers test each of these functionalities individually to ensure that each function works as expected.

When different developers build modules or components separately, quality engineers must check if these parts work well together. This is where integration testing becomes crucial. Integration testing ensures a system's various modules and components function correctly when combined.

In modern software setups, microservices often interact with each other. Integration testing becomes even more critical to guarantee that these microservices communicate properly and operate as intended.

For instance, consider a banking app where users can set up a savings account, and there's a feature to transfer money from their main account to the savings account. Since these are separate modules, testers would perform integration testing to ensure the transaction process between the two functions is smooth and accurate. This ensures that all parts of the banking app work seamlessly together.

In this testing phase, the software undergoes comprehensive testing to confirm that all business and functional requirements are fulfilled. This testing is often called end-to-end testing and occurs just before user acceptance testing.

The system testing environment must closely mirror the production environment for accurate validation. Additionally, it follows the white box testing method, where testers have no involvement in the development of the system.

For example, consider a fitness app with features like setting and tracking monthly fitness goals, consolidating fitness metrics, creating personalized exercise sessions, and integrating with smartwatches. Each function is evaluated individually and collectively in system testing to ensure smooth integration.

Whenever software introduces a change or new feature, there's a risk of disrupting existing functionalities. To ensure the stability and continued performance of the software, regression testing is conducted each time alterations are made. Due to the labor-intensive nature of regression testing, it's often automated for efficiency.

For instance, let's take a widely used software application. The Chrome browser has introduced a new feature that improves bookmark management, where users can now organize bookmarks into folders and subfolders with nested structures. This new feature provides a more organized and user-friendly bookmarking experience.

Now, the development teams need to perform regression testing to ensure that this new bookmark management feature doesn't affect the core functionalities of the web browser.

When a new software build is completed, it undergoes smoke testing by Quality Assurance (QA) to ensure that only the most critical and essential functionalities produce the intended results. This phase, often called smoke testing, serves as an early-stage acceptance test, adding a verification layer to decide whether the new build can progress to the next stage or requires rework.

For instance, Imagine a team is working on a weather app, and they have just completed a new version. Before extensive testing, they perform smoke testing to check the core functionality that provides current temperature information quickly.

The QA team performs a quick check to ensure that, when a user opens the app, it correctly shows the current temperature for their location. So, if the smoke test passes, it indicates that the fundamental aspect of the weather app – offering the current temperature – is working as expected. If it fails, the team knows an issue needs immediate attention before further testing.

Like regression testing, sanity testing is done for a new software version with small bug fixes or added code. If the new build doesn't pass sanity testing, it won't proceed to more detailed testing. While regression testing looks at the entire system after changes, sanity testing focuses on specific areas affected by new code or bug fixes.

For instance, consider an e-commerce website where users cannot add a specific product to their cart even when it is in stock. After fixing this issue, sanity testing is carried out to ensure that the "add to cart" function works correctly.

Note : Enhance your testing experience and achieve greater accuracy in functionality testing. Try LambdaTest Now!

Non-functional Testing

Non-functional testing evaluates a software application's performance, usability, dependability, and other non-functional characteristics. Unlike functional testing, it focuses on aspects beyond specific features. This type of testing aims to ensure the software meets non-functional criteria, such as reliability and efficiency.

- Performance testing

- Load testing

- Stress testing

- Volume testing

- Usability testing

- Compatibility testing

- Accountability testing

- Reliability testing

- Security testing

Performance testing ensures a software application meets its performance goals, including response time and throughput. This type of testing reveals how various factors, such as network latency, database transaction processing, data rendering, and load balancing between servers, impact the performance of the software. Performance testing is typically conducted using tools like LoadRunner, JMeter, and Loader.

For instance, consider a popular e-commerce website set for a big sales event, expecting massive user traffic. Performance testing becomes crucial to ensure the website can handle the increased load without slowing down or crashing. Performance testing tool simulates many users accessing the website simultaneously, checking how well the site handles this increased demand. It assesses response times for actions like product searches, adding items to the cart, and completing the checkout process.

Load testing is a critical aspect of software testing that assesses the stability of an application under a specific load, typically equal to or less than the anticipated number of users. In practical terms, if a software application is designed to handle 250 users simultaneously with a target response time of three seconds, load testing is conducted by applying a load of up to 250 users or a number slightly below that. The primary objective is to confirm that the application maintains the desired response time of three seconds even when subjected to the specified user load.

For instance, imagine an online banking system expecting a maximum of 500 users accessing their accounts concurrently during peak hours. Load testing is executed by simulating this user load to validate that the system remains stable and responsive. The goal is to ensure that the online banking platform can efficiently handle up to 500 users without experiencing performance issues or delays, meeting the expected user experience criteria. This type of testing is crucial for identifying potential bottlenecks and optimizing the application's performance under realistic usage scenarios.

Stress testing is conducted to verify that a software program or system can withstand an unusually high load or demand. For instance, stress testing could involve subjecting a web application to many concurrent users attempting to log in simultaneously. This assesses the system's ability to handle stress and high traffic volumes without compromising performance or functionality, ensuring a robust user experience even during peak usage.

For instance, consider online ticket booking platforms expecting massive traffic during an event or festival. Stress testing would simulate a scenario where a significantly more significant number of users than usual try to access the platform simultaneously to ensure that the system remains responsive and functional under such intense demand.

Volume testing is used to verify that a software program or system can effectively handle a substantial volume of data. For instance, if a website is designed to accommodate traffic from 500 users, volume testing assesses whether the site can manage the specified volume without experiencing performance issues or data overload.

Let's take an example of an online database management system used by large e-commerce platforms. Volume testing will ensure that the uploading and managing an extensive dataset and transaction between records is done effortlessly, without slowing down the performance of the database system even when handling large volumes of data.

Usability testing ensures a software program or system is user-friendly and easy to navigate. For instance, in the context of an e-commerce website, usability testing could focus on determining whether users can quickly locate essential elements like the “Buy Now” button. This type of testing assesses the overall user experience, including navigation, instruction clarity, and interface intuitiveness.

For instance, consider that a mobile banking application is under usability testing, and testers evaluate how easy it is for users to perform everyday tasks such as transferring money or checking account balances. Testers check the clarity of interface and easy navigation and if the mobile application is user-friendly and satisfies its users. The goal of usability testing is to ensure that the users can clearly and effortlessly interact with the application with a positive user experience.

To execute usability testing, you must be aware of its various methods. Explore this guide on usability testing methods. This guide will provide you with different methods by which you can make your usability testing effective.

Compatibility testing ensures a software program or system is compatible with other software, operating systems, or environments. For example, during compatibility testing, a tester checks that the software functions seamlessly and without issues when interacting with various software applications and operating systems.

For instance, Compatibility testing is performed to see if the software works well on different web browsers (Chrome, Firefox, Safari) and is compatible with various operating systems (Windows, macOS, Linux) and checking how the software integrates with other tools commonly used in project management, like communication platforms or document-sharing applications.

Accountability testing is conducted to verify if a system functions as intended. It ensures that a specific function delivers the expected outcome. If the system produces the desired results, it passes the test; otherwise, it fails.

For Instance, consider an online shopping website where users can add items to their shopping cart and proceed to checkout. Accountability testing for this system involves checking if the "Add to Cart" function works correctly. If a user adds a product to the cart, the system should accurately reflect, and the item should be visible in the user's cart.

Reliability testing assumes a software system functions without errors within predefined parameters. The test involves running the system for a specific duration and under certain processes to assess its stability. The reliability test will fail if the system encounters issues under predetermined circumstances. For instance, in a web application, reliability testing ensures that all web pages and links consistently perform without errors, providing a reliable user experience.

For instance, consider a web-based email service focused on ensuring the reliability of its core functions—sending and receiving emails. High user activity is simulated in a continuous 72-hour test, including attaching files and marking emails as spam.

Specific scenarios, such as sudden spikes in user activity or temporary server outages, are introduced to evaluate the system's response. This reliability testing aims to confirm error-free email transactions and the system's capability to handle diverse user activities seamlessly. If the test uncovers issues, further investigation and improvements are undertaken to enhance the system's reliability.

This testing process protects the software program or system from unauthorized access or attacks. Organizations conduct security testing to identify vulnerabilities in the security mechanisms of their information systems.

For instance, consider an e-commerce platform that stores sensitive customer information, including personal details and payment data. Security testing for this platform would involve simulating various security threats, such as attempts to hack into the system, unauthorized access to customer accounts, or exploitation of potential vulnerabilities in the website's code.

Automation Testing Life Cycle

Automation Testing is a complex process, and many phases lead to the successful automation of a software application. Automated tests execute a previously defined test case suite against a software application to validate that the software meets its functional requirements. During the software development lifecycle phase, an organization or a project team identifies and defines test cases that can be automated and then creates automated scripts to perform these test cases. This section discusses the following phases of automated testing.

1. Determining Automation Feasibility

Automation testing begins by assessing the project's feasibility. This involves identifying which application modules can be automated and detecting which tests are suitable for automation. Considerations include budgetary constraints, resource allocation, team size, and skills. Specific feasibility checks include

- Test case automation feasibility: Assessing which test cases can be automated.

- AUT (Application Under Test) automation feasibility: Evaluating the application's feasibility.

- Selection of automation testing tool: When choosing the most appropriate tool, consider budget, functionality, team familiarity, and project technologies.

2. Choosing the Right Automation Testing Tool

The choice of an automation testing tool is a crucial factor that significantly influences the effectiveness of the automation process. It marks a pivotal step in the testing cycle, demanding careful consideration to align with the project's requirements. With various automation tools and software options available, testing teams should focus on key factors when making this selection.

- Budget considerations: The budget plays a central role in tool selection. Teams need to balance the functionality required with the cost of acquiring the automation tool. Open-source and paid tools are available in the market, offering different features and capabilities.

- Functionality vs. cost: It's essential to balance the required functionality and the cost implications of the chosen automation tool. Open-source tools may provide cost-effective solutions, while paid tools may offer advanced features with a corresponding price tag.

- Compatibility and familiarity: Organizations should assess the compatibility of the chosen tool with the technologies involved in the overall project. Additionally, familiarity with the tool's usage is critical. Teams need to ensure that the selected automation tool aligns well with the existing technologies and processes within the organization.

The decision-making process for selecting an automation testing tool involves a thoughtful evaluation of budget constraints, functionality requirements, compatibility with project technologies, and the level of familiarity within the testing team.

3. Test Planning, Automation Strategy, and Design

Moving forward in the test automation life cycle, the next critical phase involves determining the approach and procedures for automation testing. This phase encompasses the development of the test automation framework, emphasizing a thorough understanding of the product and awareness of the project's technologies.

During this step, testing teams establish test creation standards and procedures, make hardware, software, and network requirements decisions, and outline test data necessities. A testing schedule is also devised, and the appropriate error detection tool is selected.

Critical considerations for this test management phase include

- Manual test case collection: Gather all manual test cases from the test management tool and identify those suitable for automation.

- Framework evaluation: Thoroughly examine testing frameworks, considering their pros and cons, before selecting the framework to be utilized.

- Test suite construction: Build a test suite for automation test cases.

- Understanding risks and dependencies: It is imperative to comprehend the risks, backgrounds, and dependencies between the testing tool and the application.

- Stakeholder and client approval: Obtaining approval from stakeholders and clients is fundamental in shaping the overall test strategy.

Planning test cases can be critical sometimes, and this step takes place before the development of the product. To know how to write and plan test cases effectively, explore this guide on 21 lessons on writing effective test cases that follow the standard format.

4. Test Environment Setup

The subsequent phase involves setting up the test environment, a crucial step in the automation testing life cycle that determines the configuration of remote or virtual machines for executing test cases. Understanding the significance of virtual machines is essential in ensuring a robust testing process.

Users access applications through diverse browsers, operating systems, and devices, each with multiple versions. Achieving cross browser compatibility is crucial to maintaining a consistent user experience. Hence, the establishment of a virtual testing environment becomes imperative. This phase requires careful planning to maximize test coverage and include various testing scenarios.

Key points to consider before setting up the test environment.

- Thorough planning: Plan the testing environment carefully to cover various scenarios. Schedule and track setup activities, including software installation, network, and hardware setups.

- Refinement of test databases: Ensure test databases are refined for accurate testing.

- Script building: Build test scripts for effective testing.

- Development of environment scripts: Develop scripts to manage the testing environment effectively.

Key points to consider during setting up the test environment:

- Data similarity to production: Feed data into the test environment that resembles production data to avoid issues during application production stages.

- Front-end running environment: Establish a front-end running environment for load testing and web traffic analysis.

- Comprehensive test coverage: Compile a list of systems, modules, and applications to be tested.

- Distinct database server: Set up a separate database server for the staging environment.

- Testing on various platforms: Conduct tests on as many client operating systems and browser versions as possible.

- Website rendering and network conditions: Test website rendering time and application appearance under different network conditions.

- Documentation management: Save all documentation, including installation guides and user manuals, in a centralized database for seamless testing processes in current and future setups.

Tasks involved in the test environment setup include acquiring tool licenses, ensuring AUT (Application Under Test) access with valid credentials, and having utilities such as advanced text editors and comparison tools. Additionally, the setup includes configuring the automation framework, obtaining add-in licenses, and establishing a staging environment for testing.

5. Test Scripting and Execution Overview

This phase involves creating and implementing test scripts to assess the functionality and performance of the application under test. Here's a brief overview of key considerations and steps in this crucial testing stage.

Steps to prepare test scripts:

- Ensure that test scripts align with the actual requirements of the project.

- Implement a uniform testing methodology to maintain consistency throughout the testing process.

- Design test scripts focusing on reusability, clarity, and understanding for all team members involved.

- Conduct a comprehensive review of the code within the test scripts to uphold quality assurance standards.

Let us look into the key points of the execution process of the test scripts below.

Execution process:

- Verify that test scripts cover all essential functional aspects of the application.

- Ensure test scripts can be executed seamlessly across various environments and platforms.

- Explore batch execution possibilities to enhance efficiency and resource optimization.

- Generate detailed bug reports for any functionality-related errors encountered during the execution process.

- Develop a well-structured schedule for executing tests, ensuring systematic coverage.

Evaluation and documentation:

After test execution, evaluate the outcomes, save them appropriately for future reference, and use the results to prepare comprehensive test result documentation. This documentation serves as a valuable record for assessing the success of the testing process.

6. Test Analysis and Reporting

After the execution of tests and the documentation of test results, the final and crucial stage in the automation testing life cycle is the analysis and reporting phase.

Conducting tests alone is insufficient; the results obtained from various testing types in the preceding phase must be thoroughly analyzed. Through this analysis, functionalities and segments potentially encountering issues are identified. These test reports serve as valuable insights for testing teams, guiding them to determine whether additional tests and procedures are necessary. The reports help teams comprehend how well the application performs under unfavorable conditions.

Analysis and reporting form the concluding steps in the automation testing life cycle. Test reports, derived from careful analysis, are then shared with all stakeholders involved in the project. This ensures that the insights gained from testing are communicated effectively, facilitating informed decision-making and further actions as needed.

These six automation testing life cycle stages significantly enhance an organization's software testing efforts. Automation testing is valuable for saving time, streamlining processes, and reducing the risk of human errors.

It's crucial to highlight that the true benefits of automation testing emerge when teams follow the specified sequence of steps outlined above. Implementing automation without proper planning can result in excess scripts that are challenging to manage and may require human intervention. This goes against the primary purpose of integrating automation into software testing.

To ensure a successful testing automation experience, testing teams must have a well-organized and thoughtful testing automation life cycle. Following the sequence of stages is essential for automation, contributing to the software testing process's overall effectiveness and success. The following section lets us learn more about the test automation testing strategy.

It's crucial to highlight that the true benefits of automation testing emerge when teams follow the specified sequence of steps outlined above. Implementing automation testing without proper planning can result in excess scripts that are challenging to manage and may require human intervention. This goes against the primary purpose of integrating automation testing into software testing.

To ensure a successful automation testing experience, testing teams must have a well-organized and thoughtful automation testing life cycle. Following the sequence of stages is essential for performing automation testing, which helps contribute to the overall effectiveness and success of the software testing process. Let us learn more about the automation testing strategy in the next section.

What is a Test Automation Strategy?

A test automation strategy is an integral component of effective software testing, providing a structured approach to determine what to automate, how to do it, and which technology to use.

Aligned with broader testing strategies, it outlines key focus areas such as scope, goals, testing types, tools, test environment, execution, and analysis by providing a clear plan of action, ensures faster bug detection, promotes better collaboration, and ultimately contributes to shorter time-to-market, enhancing the overall success of the software development project.

Below are the benefits of developing the test automation strategy:

- Clear plan of action: A well-defined test automation strategy offers a clear plan for implementing automation in a structured and effective manner. This approach leads to faster bug detection, improved collaboration, and shorter time to market.

- Faster bug detection: Automation streamlines testing processes, allowing for quicker execution of tests compared to manual methods. This speed facilitates the rapid identification of bugs and issues in the software.

- Better collaboration: The strategy establishes clear objectives, roles, and communication channels for team members involved in testing. This clarity enhances collaboration among developers, testers, and stakeholders, reducing ambiguity and disputes.

- Shorter time to market: Automated tests provide rapid feedback on software quality, enabling timely adjustments. This leads to a more efficient development process with shorter feedback loops, allowing quicker product releases.

A well-planned and executed strategy that emphasizes the objectives, parameters, and results is necessary to implement a successful strategy. Software development teams can establish an efficient process by following the above steps, which can enhance software quality, lower expenses, and shorten time-to-market.

As we learned from the above section, a well-planned strategy can help achieve success quickly. We must also know what frameworks will help these strategies come into play. In the following section, we will learn the types of automation testing frameworks in more detail.

Types of Automation Testing Frameworks

Automation testing frameworks provide guidelines for automated testing processes. These frameworks define the organization and execution of test scripts, making it easier to maintain, scale, and enhance automated testing efforts. Various testing frameworks serve different project requirements and testing objectives based on various advantages in terms of reusability and maintainability.

Below are some automation testing framework types that help developers and testers choose the right framework for their project needs.

- Linear framework (or Record and Playback): This is a basic framework where testers write and test scripts for individual cases, like recording and playing back actions on a screen. It's commonly used by larger teams and those new to test automation. Understand the distinction between scripting testing and record & replay testing with tools like Selenium IDE and Katalon.

- Modular-based framework: Suitable to its name, we use this framework to organize each test case into tiny parts known as modules, where the modules are quite independent. These modules are handled uniformly using a master script, saving time and optimizing workflow. However, you would need prior planning, as well as expertise, to implement this framework.

- Library architecture framework: Built on a modular framework, this approach breaks down test cases and groups tasks within a script into different functions stored in a library. While enhancing reusability and flexibility, it demands more time and expertise to set up. This framework enhances reusability and flexibility but requires more time and expertise.

- Data-driven framework: A Data-driven framework is one in which test cases are written around a dataset rather than a set of steps. This can be useful if a project is under time pressure or has enormous test cases. With this framework, the test cases can be executed by anyone with access to the data as long as they can import it into their platform. The typical data-driven framework will include a suite of test steps, each containing all the information needed to execute that step against a dataset.

- Keyword-driven framework: A Keyword-driven framework is a software design pattern in which the implementation of a system is separated from its specification and implementation. The specification is expressed as an interface that contains method names without any associated implementation. The implementation of the specification is represented as an interface stub (or proxy) that contains method implementations but does not expose the code that implements the methods. The code that uses the interfaces to access data and services does not depend on a particular implementation, so it can be changed later.

- Hybrid testing framework: This test automation framework combines manual and automated tests to achieve higher-quality software. The Hybrid Framework builds upon the foundation of Behavior-Driven Development (BDD), Test-Driven Development (TDD), and Acceptance Test-Driven Development (ATDD). Rather than focusing on a single approach, it utilizes BDD's human-readable feature files, TDD's unit tests, and ATDD's acceptance tests.

To learn more about how BDD differs from TDD in what aspects, explore this guide on TDD vs BDD: Selecting The Suitable Framework and see how these frameworks help make your automation testing easier. The guide provides detailed insights into what TDD and BDD entail and practical guidance on implementing both frameworks in your testing process.

In the following section, let's cover some of the most popular automation testing frameworks and tools based on different aspects, such as web-based, performance-based, API-based, and more.

Popular Automation Testing Frameworks and Tools

In this section, we will look into some of the best test automation frameworks and tools to execute your tests. However, the choice of an automation tool depends on the specific testing requirements of your project.

Web-Based Automation Framework

Web-based automation testing framework allows the simulation of user interactions, ensuring thorough testing across various browsers and platforms. This approach enhances efficiency in software development by identifying issues early in the development lifecycle and supporting the delivery of reliable web applications.

Some of the web-based automation testing frameworks are mentioned below.

Selenium

Selenium is a widely used open-source suite of tools that allows the creation of test scripts in multiple programming languages, offering flexibility for users proficient in Ruby, Java, Node.js, PHP, Perl, Python, JavaScript, C#, and more.

Primarily designed for browser automation, Selenium excels in cross-browser testing, ensuring consistent website performance across various browsers. It simplifies functional testing on different browsers to validate proper website functionality. Selenium seamlessly integrates with other tools and frameworks, including TestNG, JUnit, and Cucumber, enhancing its adaptability to various testing environments.

The introduction of Selenium 4 brings notable features, such as relative locators. These relative locators offer a faster method to identify elements on a web page by comparing them to the previous element, providing increased robustness when dealing with dynamic web content.

Selenium 4 also significantly improves the NetworkInterceptor, a crucial component for achieving comprehensive browser automation, presenting new possibilities for web app testing.

If you want to explore Selenium 4's features, a comprehensive video tutorial is available to guide you through its capabilities and enhancements.

Some of the features of Selenium are given below.

- Cross-browser compatibility: It ensures smooth testing across Chrome, Firefox, Safari, and Edge.

- Multilingual support: Supports Java, Python, C#, and Ruby, promoting language flexibility.

- Framework integration: Seamlessly integrates with JUnit, TestNG, Maven, and Jenkins, enhancing testing workflows.

- Parallel testing: Enables concurrent execution for faster test runs.

- Recording and playback: Selenium IDE allows newcomers to create test scripts easily.

- Adaptability: Easily extends functionality with plugins and extensions.

Cypress

Cypress is an open-source end-to-end testing framework designed for web applications. Known for its user-friendly interface and robust feature set, Cypress has quickly gained popularity in the automation testing field. It is a preferred choice among developers for quickly and effectively testing applications directly within their web browsers.

Explore this tutorial on Cypress and get valuable insights with examples and best practices.

Some of the features of Cypress are given below.

- Local test runner: It provides a local test runner for quick feedback during test development.

- Parallel test execution: Supports running tests in parallel, significantly reducing test suite completion time.

- CI/CD integration: Integrates seamlessly with CI/CD tools like CircleCI and AWS CodeBuild for automated test execution.

- Automatic waiting for elements: It automatically waits for elements, reducing manual waiting in test scripts.

- Screenshots on failure: Automatically preserves screenshots when a test case fails, helping with quick issue identification and resolution.

Playwright

Playwright, an end-to-end testing automation framework developed by Microsoft, has gained increasing popularity and is the preferred choice for automation testing. It offers a single API to automate Chromium, Firefox, and WebKit browsers. Playwright testing uses Node.js, JavaScript, and TypeScript, making it versatile and accessible to many users.

Some of the features of Playwright are given below.

- Cross-browser compatibility: It supports test execution on Chromium, WebKit, and Firefox, enhancing versatility in web application testing.

- Operating system compatibility: Playwright is compatible with Windows, Mac, and Linux, making it adaptable across major operating systems.

- Web element interaction: Enables seamless interaction with website elements, a valuable tool for automating diverse aspects of web development.

- Real-time alerts: It provides real-time alerts for network requests and page load events, eliminating the need for unreliable sleep timeouts.

Puppeteer

Puppeteer is a test automation framework designed for direct interaction with Chrome-based browsers such as Chrome and Chromium, with additional compatibility for Firefox (Nightly Build). Its advanced capabilities and easy installation using npm or Yarn empowers users to seamlessly access and manage the DevTools Protocol.

Moreover, Puppeteer enables the creation of a testing environment that facilitates easy test updates and execution in the latest Chrome version. This ensures compatibility with the most recent JavaScript, browser features, and APIs.

Some of the features of Puppeteer are given below.

- Cross-browser and cross-platform testing: Supports testing across browsers and platforms.

- Integration with CI and Agile tools: Seamlessly integrates with CI and Agile tools like Jenkins and TravisCI.

- Screenshot generation: Captures and documents web application behavior through screenshot generation.

- Automation of website actions: Facilitates easy automation of website actions, including form submissions and UI testing.

Mobile-based Automation Framework

Mobile-based automation uses automated testing frameworks to assess mobile applications' functionality, performance, and user experience. It enables the creation of automated scripts to simulate interactions on mobile devices, ensuring comprehensive testing across different platforms and devices. This approach is crucial for delivering high-quality mobile applications that meet user expectations and perform reliably in diverse environments.

Some of the best mobile app testing frameworks are mentioned below.

Appium

Appium is an open-source test automation framework designed for testing native, hybrid, and mobile web applications across diverse devices and platforms. It operates based on the WebDriver protocol, a standard API widely used for automating web browsers. Appium's flexibility allows users to write tests in their preferred programming language, Java, Python, or JavaScript.

Some of the features of Appium are given below.

- Language and framework flexibility: Appium offers flexibility, allowing testing of any mobile application using various programming languages and test frameworks with full access to back-end APIs.

- Cross-platform compatibility: Appium stands out with cross-platform compatibility, enabling the same API to run tests on multiple platforms and operating systems. This promotes code reusability and efficiency.

- No recompilation required: With Appium, testers are not required to recompile the mobile application every time they run automation tests, streamlining the testing process and saving time.

Watch this complete video tutorial to start mobile app testing with Appium, get valuable insights and practical knowledge, and boost your testing process.

Espresso

Espresso is a mobile test automation framework specifically designed for Android applications. Developed by Google, it seamlessly integrates with the Android SDK. Espresso offers a range of features that simplify the process of writing, running, and maintaining UI tests for Android applications.

Some of the features of Espresso are given below.

- Simple API: It offers an easy-to-use API for UI test creation.

- Fast and reliable: Tests run quickly and reliably on various devices and operating systems.

- Powerful capabilities: Supports complex UI tests with features like data binding and custom matchers.

- Android SDK integration: Integrated with the Android SDK, Espresso ensures easy setup and execution of tests.

XCUITest

XCUITest is a mobile test automation framework specifically designed for iOS applications. Developed by Apple, it is seamlessly integrated with Xcode. XCUITest comes equipped with features that simplify the process of writing, running, and maintaining UI tests for iOS applications.

Some of the features of XCUITest are given below.

- Simple API: XCUITest offers a straightforward and user-friendly API for writing UI tests.

- Fast and reliable tests: Tests written with XCUITest are known for speed and reliability. They can be executed on various simulators and devices.

- Powerful testing capabilities: XCUITest provides robust features for crafting complex UI tests, including support for gestures, custom matchers, and test hooks.

- Xcode integration: Integrated with Xcode, XCUITest ensures seamless setup and execution of tests within the iOS development environment.

Selendroid

Selendroid, often called Selenium for Android mobile apps, is a testing tool for native and hybrid mobile applications. It allows testers to conduct comprehensive mobile application testing on Android platforms. Like Selenium's cross-browser testing capabilities, Selendroid can execute parallel test cases across multiple devices.

Some of the features of Selendroid are given below.

- Native and hybrid Android applications: Enables automated testing for native and hybrid Android applications.

- Web app testing: Extends automation to web app testing on the Android browser and native and hybrid app testing.

- Inspector tool: Provides an inspector tool for inspecting app UI elements and generating test scripts based on the identified elements.

- Gesture support: Supports automated gestures such as swiping, tapping, and pinching, facilitating realistic user interactions in automated tests.

Performance-based Automation Framework

Performance-based automation testing frameworks and tools focus on the testing processes related to the performance aspects of software applications. It involves creating automated scripts to simulate and evaluate the performance of an application under different conditions, such as varying user loads and network conditions. This approach ensures that software functions correctly and performs optimally, meeting the expected levels of responsiveness and scalability.

Some of the performance-based automation testing tools are mentioned below.

Apache JMeter

Apache JMeter is a robust open-source tool developed by the Apache Software Foundation. Widely used for load and performance testing, it proves effective for evaluating the performance of web applications, APIs, databases, and server-based systems. JMeter facilitates the simulation of diverse scenarios and the generation of loads to assess system performance under varying conditions.

JMeter's primary objective is to assess a system's capability to manage a designated workload, measure response times and throughput, and pinpoint potential performance bottlenecks.

Some of the features of Apache JMeter are given below.

- Distributed testing: Facilitates realistic load generation by enabling distributed testing across multiple machines.

- Protocol support: Supports various protocols, including HTTP, HTTPS, SOAP, JDBC, and FTP, providing comprehensive performance testing capabilities for diverse applications and APIs.

- Reporting and analysis: Offers extensive reporting and analysis features with graphical representations to identify and address performance bottlenecks.

- User-friendly interface: Features a user-friendly interface for easy script creation and customization, using either a GUI or scripting with Groovy.

- Plugin ecosystem: The plugin ecosystem enhances JMeter's functionality by introducing advanced features and extending its capabilities.

LoadRunner

LoadRunner plays a crucial role in simulating and evaluating the performance of both web and mobile applications across diverse load conditions. Supporting multiple protocols, LoadRunner is equipped with advanced scripting capabilities and provides extensive reporting and analysis features. Its primary application lies in performance testing, which is invaluable for assessing applications' performance under different loads and stress levels.

Some of the features of LoadRunner are given below.

- Diverse protocol support: It accommodates various protocols and technologies, including web applications, databases, ERP systems, and mobile applications, for comprehensive testing coverage.

- Visual script editor: It features an intuitive visual script editor, enhancing efficiency in creating and editing test cases through a user-friendly graphical interface.

- Robust reporting and analysis: It offers powerful analysis tools and detailed reports, empowering testers to thoroughly examine and interpret test results for actionable insights.

- Concurrency simulation: It helps stimulate many concurrent users, ensuring effective large-scale performance testing to identify system behavior under heavy loads.

k6

k6 is an open-source performance testing tool created explicitly for load-testing web applications and APIs. Engineered with a developer-centric approach, it enables developers and testers to assess the performance and scalability of their systems across diverse load conditions.

It is known for its user-friendly design, adaptability, and seamless integration with the contemporary development ecosystem. k6 empowers teams to measure and optimize system performance efficiently.

Some of the features of k6 are given below.

- JavaScript-focused scripting: k6 utilizes JavaScript for creating test scripts, catering to the ease and familiarity of JavaScript experts.

- Scalable load generation: The tool generates substantial loads and seamlessly scales across multiple virtual machines to replicate real-world user behavior accurately.

- Real-time monitoring and analysis: k6 provides real-time monitoring and live analysis of test results, enabling the swift identification of performance issues during testing.

- Open-source customization: As an open-source solution, k6 allows users to customize and develop custom plugins, enhancing functionality according to specific testing requirements.

- Performance checks and thresholds: k6 supports performance checks and thresholds, facilitating the validation of system behavior and the establishment of the pass or fail criteria.

To explore k6 and its features, as well as to learn how to write your first k6 testing script, refer to the k6 testing tutorial for step-by-step guidance and instructions and get detailed insights on k6.

BlazeMeter

BlazeMeter is a cloud-based load-testing platform designed to facilitate application performance and load testing. Owned by Broadcom (formerly CA Technologies), it encourages developers and testers to create and execute performance tests seamlessly. BlazeMeter supports utilizing popular open-source testing tools such as Apache JMeter, Gatling, and Selenium WebDriver, providing a comprehensive solution for testing and optimizing application performance.

Some of the features of BlazeMeter are given below.

- Scalable load generation: Allows scalable load testing with cloud infrastructure, simulating high user loads for performance assessment.

- Scripting language support: It supports JMeter, Gatling, and Selenium, providing a user-friendly GUI for easy test scenario creation.

- Real-time monitoring: It offers real-time monitoring of key metrics for efficient bottleneck identification.

- Collaboration and team management: Facilitates seamless collaboration among team members in test creation, execution, and analysis.

API-Based Automation Framework

API-based automation testing frameworks and tools streamline software testing by automating processes related to application programming interfaces (APIs), ensuring robustness and reliability in software development. It enables efficient validation of API functionality, early issue identification, and faster delivery of high-quality applications. This approach is integral to modern software development, optimizing testing practices for enhanced efficiency and stability.

Some of the API-based automation testing tools are mentioned below.

Postman

It is a highly utilized automation testing tool for APIs. Its versatility enables users to create various tests, from functional and integration to regression tests, and smoothly execute them in CI/CD pipelines through the command line.

Some of the features of Postman are given below.

- User-friendly interface: It boasts an approachable, easy-to-use interface complemented by convenient code snippets.

- Format support: It supports multiple HTTP methods, Swagger, and RAML formats, providing flexibility in crafting and testing APIs.

- API schema support: It offers extensive support for API schemas, facilitating the generation of collections and API elements.

- Test suite management: Users can create and manage test suites, execute them with parameterization, and debug as needed.

Soap UI

SoapUI is an open-source functional testing tool developed by Smartbear, recognized as a leader in Gartner Magic Quadrant for Software Test Automation. It is a comprehensive API Test Automation Framework for Representational State Transfers (REST) and Service-Oriented Architectures (SOAP).

While SoapUI is not designed for web or mobile app testing, it stands out as a preferred API and services testing tool. Notably, it operates as a headless functional application specializing in API testing.

Some of the features of SoapUI are given below.

- Thorough API testing: It boasts a comprehensive set of tools and features for thorough API testing. It supports testing for REST, SOAP, and GraphQL APIs, providing a unified platform for evaluating various API types.

- Open-source code methodology: Using open-source code methodology, SoapUI allows users to access and modify their source code using open-source code methodology. This flexibility empowers users with customization options to tailor the tool to specific testing requirements.

- One-stop solution: It is a versatile and all-encompassing solution for API testing. From functional to regression and load testing, the tool covers essential aspects of API evaluation, eliminating the need for multiple testing tools.

REST Assured

REST Assured is a widely used testing framework designed for RESTful APIs and is particularly suitable for developers comfortable with Java. It streamlines API testing by offering a user-friendly syntax, enabling testers to construct requests without starting from code basics.

Some of the features of REST Assured are given below.

- Simplified testing process: It simplifies API testing by shielding users from the intricacies of the HTTP protocol. It offers a user-friendly interface, eliminating the need for a deep understanding of HTTP.

- Java compatibility: Explicitly designed for Java developers, REST Assured seamlessly integrates into Java projects. Users only need Java version 8.0 or higher to use the tool effectively.

- Holistic integration testing: It facilitates integration testing, allowing users to comprehensively test API endpoints and their interactions with other components or systems.

Apigee

Apigee serves as a versatile cross-cloud API testing tool, empowering users to assess and test API performance and facilitating the development and support of APIs. Additionally, Apigee offers compliance with PCI, HIPAA, SOC2, and PII standards for applications. Notably, it has consistently been recognized as a leader in the 2019 Gartner Magic Quadrant for Full Lifecycle API Management four consecutive times.

This tool is designed for digital businesses, specifically for service data-rich, mobile-driven APIs and applications. Starting from version 4.19.01 in 2019, Apigee offers enhanced API management flexibility, including features such as Open API 3.0 support, TLS security, self-healing with apogee-monit, and improvements in virtual host management. The latest version includes minor bug fixes and security enhancements, maintaining its reputation as a feature-rich and well-regarded tool among users.

Some of the features of Apigee are given below.

- Comprehensive API management: Enables design monitoring, implementation, and extension of APIs for a holistic approach to API management.

- Multi-step functionality with JavaScript: Uses a multi-step process and leverages the power of JavaScript for efficient and flexible API implementation.

- Performance issue definition: Defines and identifies performance issues by closely tracking API traffic, error rates, and response times for thorough performance assessment.

- Cloud deployment with open API specifications: Facilitates the easy creation of API proxies based on open API specifications, allowing users to deploy them seamlessly in the cloud.

Automated Testing in DevOps

Automating tests involves using software tools to execute predefined test cases on a software application, replacing manual testing efforts. This process is integral to modern software development, enhancing efficiency and accuracy, and is a key component in Agile and DevOps practices for continuous delivery.

Since many organizations have moved on to Agile and DevOps methodologies for software project delivery, people have started demanding high-end efficiency and speed. Hence, automated tests have become unavoidable for modern organizations. As per GMInsights, the overall growth would be between 7%-12% CAGR until the end of 2025.

DevOps streamlines software development workflows, accelerating processes like build, test, configuration, deployment, and release. Automated testing is integral to continuous integration and delivery (CI/CD), enhancing efficiency and enabling frequent software releases.

Quality assurance engineers focus on automated integration and end-to-end tests, while developers conduct unit tests. Early execution of these tests in the DevOps CI/CD pipeline ensures component functionality. Product managers contribute to functional testing for an optimal user experience, utilizing methods like the black-box approach. This collaborative approach enhances the overall efficiency of the DevOps process.

Benefits of Test Automation in DevOps

By automating repetitive testing processes, development teams can achieve faster feedback, improved accuracy, and increased test coverage. This ensures a more robust and streamlined software development lifecycle. Let's delve into the key benefits of automated testing and how it positively impacts the development and delivery of software products.

- Enhanced team collaboration: Quality assurance architects and developers collaborate more efficiently, contributing to an improved software lifecycle.

- Simplified scaling: Decentralized development teams, including QA and DevOps, facilitate easier scaling and streamlining operations within the squad.

- Increased customer satisfaction: Faster and more reliable product releases lead to improved customer satisfaction and quicker resolution of feedback and issues, resulting in more referrals.

- Efficient incident management: DevSecOps teams can swiftly detect vulnerabilities and threat models at various application points, leading to easier incident management.

Common Myths About Test Automation

Myth: Test automation replaces manual testing ultimately.

Reality: Test automation enhances efficiency but cannot entirely replace manual testing, especially in exploratory and user-experience testing scenarios.

Myth: Automating tests is quick and effortless.

Reality: Test automation requires time, effort, and resources for effective implementation. Continuous optimization and improvement are necessary to keep up with changing software requirements.

Myth: No need for human testers with automation.

Reality: Human testers are essential for creating, maintaining, and evaluating automated tests. Automation complements human testing but cannot replace human testers' unique insights and context.

These myths highlight common misconceptions about test automation's scope, effort, and role in the software testing process.

Getting Started with Automation Testing

Manual testing is a traditional approach that involves human testers carefully executing test cases to identify defects, ensure functionality, and assess overall system performance. While manual testing provides a hands-on and intuitive evaluation, it comes with its own set of challenges.

It encounters challenges mainly tasked with testing extensive applications across various browsers. The challenges include:

- Time-consuming: Manual testing is labor-intensive, consuming valuable time, especially for extensive applications.

- Open to errors: Human testers are susceptible to mistakes, introducing the risk of overlooking critical scenarios.

- Incomplete coverage: Manual testing may miss some scenarios due to constraints, compromising defect identification.

- Limited scalability: Scaling manual testing for complex projects becomes challenging and inefficient.

- Cross-browser complexity: Ensuring consistent functionality across browsers is difficult in manual testing setups.

Automation testing with Selenium emerges as a practical solution to address manual testing challenges. Specifically designed for cross-browser testing, it enables parallel testing and more. Selenium, with its local Selenium Grid, allows automated WebDriver tests in remote browsers. This ensures thorough testing at the UI layer, addressing the limitations of manual testing.

For local automated browser testing, the Selenium Grid offers a straightforward solution. This allows running tests against various browser versions and operating systems. Download and install the appropriate browser driver for the desired test browser.

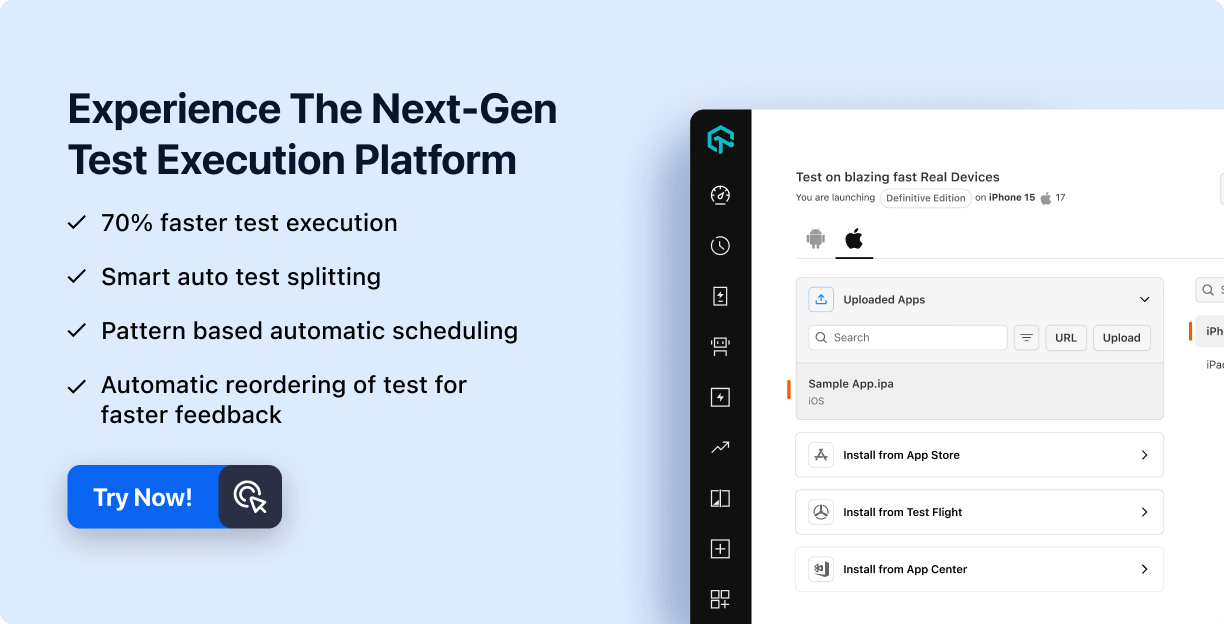

However, for more scalable and extensive browser compatibility testing across various combinations of browsers, devices, and operating systems, automation testing in a cloud-based solution is recommended with one such platform as LambdaTest.

LambdaTest is an AI-Native test orchestration and execution platform that lets you run manual and automated tests at scale with over 3000+ real devices, browsers, and OS combinations.

On LambdaTest, Selenium tests utilize the grid's capabilities to execute tests simultaneously across multiple combinations, enhancing automation testing coverage and providing greater confidence in the product.

To learn more about automation testing with LambdaTest, watch this complete video tutorial below.

LambdaTest supports a wide range of frameworks and tools integration for web testing and app test automation, such as Selenium, Cypress, Playwright, Puppeteer, Taiko, Appium, Espresso, XCUITest, etc.

It supports parallel testing, which enables you to run several tests concurrently across various browsers and devices. This can scale your test efforts and reduce the time duration needed to run automated tests.

LambdaTest lets you access a variety of browsers, operating systems, and devices without setting up and maintaining your infrastructure. This can lower your expenses and make it easier to scale your test efforts.

Some of the advantages of LambdaTest are below.

- Extensive browser and OS coverage: Access a repository of 3000+ browsers and operating systems, enabling comprehensive testing across various devices and browsers.

- Parallel testing capabilities: Execute multiple tests concurrently on different browsers and devices, leveraging parallel testing to reduce test execution time significantly.

- Seamless integration with top automation frameworks: Integrate seamlessly with leading test automation frameworks, streamlining the testing process and enabling swift initiation of tests for your applications.

- Advanced debugging tools: Utilize advanced debugging tools like video recording, network logs, and console logs to efficiently identify and address bugs, ensuring a streamlined debugging process.

- Scalable mobile device lab: Access a scalable lab comprising thousands of real Android and iOS devices, offering a diverse testing environment for mobile applications.

To learn more about the LambdaTest platform, follow this detailed LambdaTest documentation and demo video to get familiar with the features of LamdbaTest that can help you boost your automation testing process.

Subscribe to the LambdaTest YouTube Channel for the latest updates on tutorials around Selenium testing, Cypress testing, Playwright testing, and more.

In the following section, we will see how to test browser automation over cloud testing platforms like LambdaTest to understand its functionalities better.

How to Perform Automation Testing on the Cloud?

In this section, let us conduct browser automation testing on LambdaTest, a platform that facilitates seamless cross-browser testing for enhanced efficiency and comprehensive test coverage. Let's get started by following the step-by-step procedure.

Step 1: Create a LambdaTest account.

Step 2: Get your Username and Access Key by going to your Profile avatar from the LambdaTest dashboard and selecting Account Settings from the list of options.

Step 3: Copy your Username and Access Key from the Password & Security tab.

Step 4: Generate Capabilities containing details like your desired browser and its various operating systems and get your configuration details on LambdaTest Capabilities Generator.

Step 5: Now that you have both the Username, Access key, and capabilities copied, all you need to do is paste it into your test script as shown below.

Note: The below shown code is just the structure to display where to add Username, Access key, and capabilities

import static org.testng.Assert.assertTrue;

import java.net.URL;

import java.util.concurrent.TimeUnit;

import org.openqa.selenium.By;

import org.openqa.selenium.WebElement;

import org.openqa.selenium.chrome.ChromeOptions;

import org.openqa.selenium.remote.LocalFileDetector;

import org.openqa.selenium.remote.RemoteWebDriver;

import org.testng.annotations.AfterClass;

import org.testng.annotations.BeforeClass;

import org.testng.annotations.Test;

public class LamdaTestUploadFileRemotely {

private RemoteWebDriver driver;

@BeforeClass

public void setUp() throws Exception {

//LambdaTest Capabilities.

ChromeOptions capabilities = new ChromeOptions();

capabilities.setCapability("user","<username>");

capabilities.setCapability("accessKey","<accesskey>");