AI-Powered JUnit

Testing Cloud

Trusted by 2 Mn+ QAs & Devs to accelerate their release cycles

- Testing Basics

- Home

- /

- Learning Hub

- /

- 90 Junit Interview Questions

- -

- April 06 2023

70 JUnit Interview Q&A : Sharpen Testing Skills!

Get ready to ace your JUnit interviews in 2023 with our comprehensive list of 90 questions and answers that will help you sharpen your testing skills.

- Share:

- Testing Framework Interview Questions

- Selenium Interview Questions

- Appium Interview Questions

- Cypress Interview Questions

- JUnit Interview Questions

- TestNG Interview Questions

- Cucumber Interview Questions

- Jest Interview Questions

- Mocha Interview Questions

- Pytest Interview Questions

- SpecFlow Interview Questions

- Robot Framework Interview Questions

- Jasmine Interview Questions

- Nunit Interview Questions

- Protractor Interview Questions

- Playwright Interview Questions

- Laravel Interview Questions

- WebdriverIO Interview Questions

- Testing Types Interview Questions

- General Interview Questions

- CI/CD Tools Interview Questions

- Programming Languages Interview Questions

- Development Framework Interview Questions

- Automation Tool Interview Questions

OVERVIEW

Software engineering is a dynamic field that requires a solid understanding of programming languages, data structures, algorithms, and system design. As technology evolves, the demand for skilled software engineers continues to grow, making it essential for candidates to be well-prepared for interviews.

Preparing for software engineering interviews is crucial because these discussions not only assess your technical knowledge but also evaluate your problem-solving abilities and communication skills. A comprehensive set of software engineering interview questions can help you grasp core concepts and articulate your thoughts clearly, increasing your chances of success in landing your desired role.

Download Software Engineering Interview Questions

Note : We have compiled all Software Engineering Interview Questions for you in a template format. Feel free to comment on it. Check it out now!

Fresher-Level Software Engineering Interview Questions

Here are some essential software engineering interview questions for freshers. These questions cover fundamental concepts across various software engineering principles and practices, helping you build a solid foundation in the field.

By preparing for these questions, you can enhance your understanding and effectively showcase your skills during interviews.

1. What Is Software Engineering?

Software engineering is the branch of computer science that functions with a disciplined and quantifiable approach to software development, deployment, and maintenance. It focuses on methodologies, tools, and techniques for building reliable, scalable, and efficient software, making it one of the most frequently asked questions in software engineering interview questions.

2. What Are the Characteristics of Software?

Here are some of the key characteristics of software:

- Functionality: In order to be functional, the software has to satisfy certain predefined requirements and be able to perform the tasks for which it was developed. It includes characteristics, capabilities, and the completeness of tasks. Functionality can be rated using such criteria as accuracy, suitability, and interoperability with other systems.

- Usability: Usability is the ease with which users can interact with the software. It includes other factors like learnability, efficiency, and error handling.

- Performance: Performance attributes are responsiveness, processing efficiency, and resource usage. Some key performance indicators include throughput, latency, and scalability.

- Reliability: Reliability refers to the degree to which the application software is able to consistently repeat or perform its intended function within the correct acceptable limits. Reliability includes availability, fault tolerance, and recoverability.

- Maintainability: Maintainability refers to how easily the software can be modified to correct defects, improve performance, or adapt to changing requirements. It encompasses modularity, readability, and testability.

3. Define SDLC

SDLC stands for Software Development Life Cycle. It is a precise plan that outlines how to plan, analyze, design, implement, test, and maintain software. The life cycle describes methods to enhance software quality and the overall development process.

It plays a key role in the structured approach to software development, ensuring projects are completed efficiently and with high standards. Understanding the SDLC is crucial for developers, making it one of the most commonly asked software engineering interview questions.

4. What Stages Are in the SDLC?

SDLC states the tasks a software engineer or developer needs to do at each step. It involves 6 major stages:

- Planning: The initial stage involves determining the project’s scope, objectives, and feasibility. It comprises allocating resources, assessing risk, and developing a project strategy.

- Defining requirements: During this stage, stakeholders provide detailed requirements. This includes understanding user needs, system functionalities, and constraints for ensuring the final product satisfies expectations.

- Design: In this stage, requirements are translated into a blueprint for the software. This involves architectural design, interface design, and data modeling to develop a detailed implementation strategy.

- Development and Testing: This is the stage where the code is written based on the developed specifications through the design process. Also, testing is done simultaneously at this stage to fix bugs so that the application works correctly and up to quality standards.

- Deployment: In this stage, the software is deployed to production. This involves activities such as installation, configuration, and user training.

- Maintenance: Following deployment, the software enters the maintenance stage. This includes regular updates, bug fixes, and additions to keep the application working and up to date.

5. What Are Some Software Development Models?

Some of the software development models are:

- Waterfall Model

- V-Model

- Incremental Model

- RAD Model

- Iterative Model

- Spiral Model

- Prototype Model

- Agile Model

6. Why Is the Spiral Model Popular?

The spiral model is popular in software engineering due to its versatility and risk management approach. It combines aspects of both the Waterfall and Iterative models, allowing for continuous refinement through multiple iterations or "spirals."

Each iteration includes phases like planning, risk analysis, engineering, and evaluation, helping to identify and mitigate risks early in the development process.

This risk-focused approach is one reason why the spiral model is often asked as one of the software engineering interview questions, as it demonstrates a balanced method of handling complex projects.

7. Mention Some Disadvantages of the Incremental Model

Some of the disadvantages of the incremental model are:

- Complexity

- Huge cost

- Time-consuming

- Difficulty in accommodating changes

8. What Is Debugging?

Debugging is the process of identifying, analyzing, and fixing errors or bugs in a software application. It involves systematically reviewing code, either manually or using specialized tools, to pinpoint the cause of issues like incorrect logic, runtime errors, or unexpected behavior.

After identifying the problem, necessary corrections are made to ensure the program runs as expected. It is a crucial part of software development and testing, improving the quality and reliability of applications.

This software engineering interview question is often asked, highlighting its importance in maintaining robust software.

9. What Steps Are Taken While Debugging?

Major steps in debugging are:

- Identifying the Bug: Recognizing that there is a problem with the code.

- Isolating the Source: Determining which part of the code contains the bug.

- Analyzing the Bug: Understanding the reason for the bug and how it impacts the software.

- Fixing the Bug: Changing the code to correct the issue and making sure the fix works.

- Testing the Fix: Ensuring that the fix works and does not cause new problems.

10. How Do You Test Your Software System?

Testing a software system involves verifying that each component performs as intended and that the entire system runs seamlessly together.

The following are some popular types of testing methodologies used to verify software quality:

- Unit Testing: Testing individual components or modules of software.

- Integration Testing: Ensuring that different services work well together.

- System Testing: Testing the complete software to verify whether it meets all requirements.

- Acceptance Testing: Validating the software against user requirements.

- Cross-browser Testing: Evaluating web applications across different browsers and devices to ensure consistent functionality and appearance.

11. What Are the Different Categories of Software?

Software can be broadly classified into numerous categories, some of which are mentioned below:

- Scientific Software

- System Software

- Application Software

- Development Software

- Embedded Software

- AI Software

12. What Are Some Software Engineering Tools?

Software engineers use tools to help with development, testing, and maintenance. Some of the software engineering tools are mentioned below:

- Version Control: Git and SVN.

- IDEs: Visual Studio, IntelliJ IDEA, and Eclipse.

- Build Tools: Maven, Gradle, and Ant.

- Testing Tools: Selenium, JUnit, and TestNG.

- CI/CD Tools: Jenkins, Travis CI, CircleCI.

- Project Management: Jira, Trello, and Asana.

- Code Review: Crucible, GitHub Pull Requests.

- Collaboration: Slack and Microsoft Teams.

13. What Are the Software Engineering Categories?

Software engineering is classified into different groups based on distinct features of the development process, some of which are:

- Requirement Engineering: Collecting and specifying what software should perform.

- Design Engineering: Planning the architecture and design of software.

- Development: Writing and compiling code.

- Testing: Ensuring that the software functions as intended.

- Maintenance: Updating software that has been released.

- Project Management: Planning, execution, and completion of projects.

14. What Are Some Software Engineering Standards?

- IEEE 830: for software requirements specification

- IEEE 1016: for software design description

- IEEE 1028: for software reviews and audits

- IEEE 12207: for software life cycle processes

- ISO 9000: for quality management systems

- ISO 9126: for software quality characteristics

- ISO 25000: for software quality requirements and evaluation

- ISO 29119: for software testing

- CMMI Framework: defines best practices and methods for software engineering

15. What Are Some Software Engineering Principles?

Some software engineering principles are:

- Modularity

- Abstraction

- Encapsulation

- Coupling

- Cohesion

- Inheritance

16. Define Abstraction

Abstraction simplifies interaction with complex systems by highlighting only the most important characteristics while concealing intricate details. This approach allows developers to focus on higher-level concepts without needing to worry about the underlying implementation.

Additionally, abstraction enhances security by restricting access to an object's internal workings, ensuring that only approved operations can be performed. It is a fundamental concept often covered in software engineering interview questions, as it plays a key role in designing efficient and secure software systems.

17. Mention Some Popular Project Management Tools

In software engineering, some popular project management tools are:

- Jira

- Asana

- Trello

18. What Are the Key Elements to Be Considered When Constructing a System Model?

When creating a system model, consider the type and size of the software, previous experience, difficulty in gathering user needs, development techniques and tools, team situation, development risks, and software development methodologies. These factors are critical for developing an appropriate and effective software development strategy.

19. What Is Agile SDLC?

Agile SDLC is an iterative approach to software development that emphasizes flexibility, collaboration, and customer feedback. It breaks the project into small units or iterations, typically lasting 2-4 weeks.

It encourages continuous customer involvement throughout the development process and allows for changes to requirements, even in later stages of development.

This adaptability makes Agile a popular topic to appear in software engineering interview questions, as it reflects the industry's growing focus on responsive and efficient project management.

20. Which SDLC Model Is the Best?

There isn't a universally "best" SDLC model, as the choice depends on the specific needs and goals of a project. While the annual State of Agile report identifies Agile as a leading and widely used approach, the most suitable model will vary based on factors like project size, complexity, team structure, and customer requirements.

Ultimately, selecting the right SDLC model is about aligning it with the project's unique objectives to achieve optimal results.

This question about SDLC model selection often appears in software engineering interview questions to assess a candidate's understanding of various methodologies.

21. Which Model Is Also Known As the Classic Lifecycle Model?

The Waterfall model is often referred to as the classic lifecycle model. It is a linear and sequential approach to software development, where each phase must be completed before the next one begins.

This model is characterized by its structured process, making it easy to understand and manage, but it is less flexible in accommodating changes once a phase is completed.

22. State the Difference Between Verification and Validation

Verification and validation are critical components of the software development process, each serving distinct purposes.

Understanding the differences between verification and validation is essential, and this topic often appears in software engineering interview questions, as it highlights a candidate's grasp of quality assurance practices in software development.

Let’s look at the differences in detail:

| Basis | Verification | Validation |

|---|---|---|

| Nature | Static practice. | Dynamic mechanism. |

| Execution | Does not involve code execution. | Involves code execution. |

| Focus | Human-based checking of files and documents. | Computer-based execution of the program. |

| Methods | Inspections, reviews, walkthroughs. | Black box testing, white box testing, and gray box testing. |

| Purpose | Makes sure that the software meets specifications. | Makes sure that the software meets user expectations. |

| Responsibility | QA team. | Testing team. |

| Order | Performed before validation. | Performed after verification. |

| Level | Low-level exercise. | High-level exercise. |

23. What Is the Waterfall Method?

The Waterfall model is the most straightforward approach to the Software Development Life Cycle (SDLC) in software development. In this strategy, the development process is linear, with each phase completed sequentially, one after the other. As the name suggests, development flows downward, much like a Waterfall.

This model is often discussed in software engineering interview questions, as it provides a clear framework for understanding the structured progression of software development.

24. Mention Some Use Cases of the Waterfall Method

Some of the use cases of the Waterfall method are:

- Clear and Fixed Needs: When project needs are predictable and unlikely to alter dramatically.

- No Confusing Criteria: For projects with clear requirements.

- Well-understood Technology: When the development team is conversant with the technological stack.

- Minimal Risk: When the risk is low.

- Short Tasks with Low Costs: Ideal for smaller-scale tasks.

25. What Is Black Box Testing?

Black box testing is a technique that evaluates an application's functionality without examining its internal code structure. Testers interact with the software as if it were a mysterious "black box," focusing on analyzing inputs and outputs. Unlike white box testing, which considers internal code logic, black box testing does not require knowledge of implementation details.

Instead, it aims to identify vulnerabilities, simulate real-world usage, and ensure that the program meets both functional and non-functional requirements. This approach to testing is often asked as one of the software engineering interview questions, as it reflects the importance of user-centric testing in software development.

26. What Is White Box Testing?

White box testing is a technique that provides testers with insight into and validation of the internal mechanisms of a software system. Unlike black box testing, which primarily focuses on functionality, it requires a thorough understanding of coding, logic, and structure. Testers have access to the source code and design documents, allowing them to examine the application in detail.

This method ensures complete code coverage, identifies hidden errors, and aids in optimization by inspecting the software from an internal perspective. Understanding white box testing is often a relevant topic asked in software engineering interview questions, as it demonstrates a candidate's knowledge of various testing methodologies.

27. What Is Gray Box Testing?

Gray box testing is a hybrid approach that combines elements of both white box and black box testing. In this method, the tester has partial knowledge of the internal workings of the system under test, including access to some internal code and design documentation, while also evaluating the application's functionality without full access to its internals.

This allows for a more comprehensive assessment of the software, as testers can design test cases that target both internal logic and user interactions.

Understanding gray box testing is a relevant topic for developers and appears in software engineering interview questions, as it highlights a candidate's ability to apply various testing methodologies effectively.

28. What Is Smoke Testing?

Smoke testing, also known as build verification testing or confidence testing, is an initial check to determine whether a newly deployed software build is stable and ready for further testing. Its primary goal is to identify critical issues that could hinder future testing or deployment.

There are two types of smoke testing:

- Formal Smoke Testing: In this approach, the development team sends the application build to the test lead, who instructs the testing team to conduct smoke tests. After completing the tests, the testing team prepares a report, which is then returned to the test lead for review and further action.

- Informal Smoke Testing: This occurs when the test lead indicates that the application is ready for additional testing without explicitly stating that smoke testing will be conducted. In this case, the testing team naturally begins testing the application with smoke tests.

Understanding the concept of smoke testing is important for developers and testers and has frequently appeared in software engineering interview questions, highlighting a candidate's familiarity with various testing methodologies and their role in ensuring software quality.

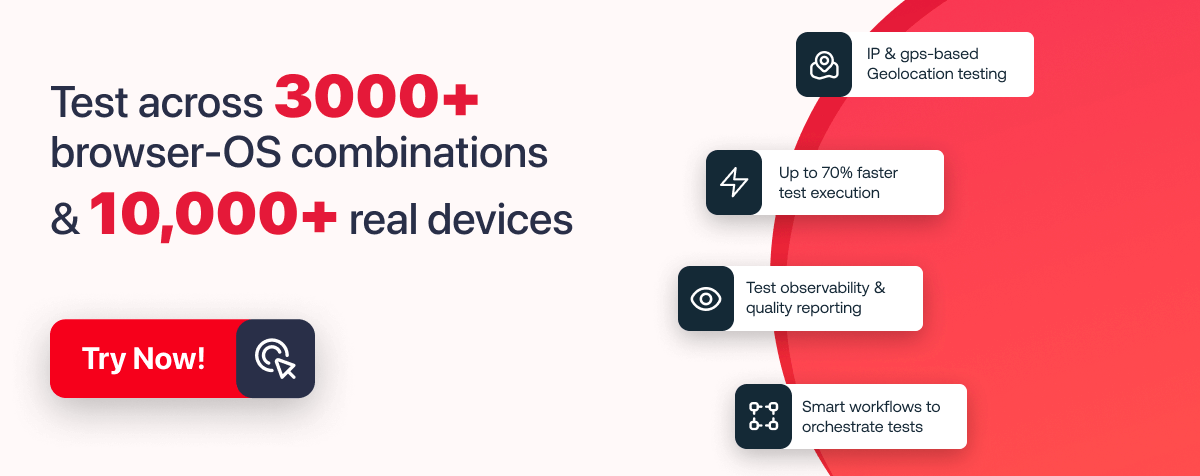

Note : Run tests and validate the functionality of your application across 3000+ browsers and OS combinations. Try LambdaTest Now!

29. What Are the Benefits of Smoke Testing?

Here are some of the benefits of smoke testing:

- System Stability: It helps quickly identify issues, reducing the effort needed for bug hunting later in the development cycle.

- Simple Process: Smoke tests ensure all application components function properly without straining server resources.

- Improved End-Product Quality: By reducing bugs, smoke testing enhances product quality and user satisfaction.

- Accessibility for QA Teams: Smoke tests allow QA teams to detect key errors quickly, enabling them to focus on more critical tasks.

30. Differentiate Between Alpha and Beta Testing

Alpha testing and beta testing are part of software testing that occurs before a product’s final release. The goal is to discover errors and enhance product quality.

Here are the differences between the two:

| Basis | Alpha Testing | Beta Testing |

|---|---|---|

| Testing involved | It involves both white box and black box testing. | It commonly involves black box testing. |

| Testers | It is performed by testers, who are usually internal employees of the organization. | It is performed by end-users who are not part of the organization. |

| Environment | It typically requires a controlled testing environment. | It doesn’t require a specific testing environment. |

| Focus | Reliability and security are not the primary focus. | Reliability and security are the primary focus. |

| Execution cycle | It may require a long execution cycle. | It lasts only a few weeks. |

| Quality assurance | It ensures the quality of the product before forwarding it to beta testing. | It also focuses on product quality but collects user feedback. |

Therefore, alpha testing validates the product within the organization, whereas beta testing involves external users who evaluate real-world readiness.

31. Define Framework

A framework is a structured and well-established method for creating and deploying software applications. It provides a set of tools, libraries, and best practices to assist developers in building software by offering a general, reusable design for a specific type of application. By standardizing the development process, a framework increases efficiency and ensures consistency, providing predefined modules that can be customized to meet individual requirements.

Understanding the concept of a framework is often relevant and is often asked as a software engineering interview question.

32. State the Difference Between Library and Framework

A library is a collection of helper functions, classes, and modules that your application can use for specific functionality, often specializing in limited areas. In contrast, a framework defines open and unimplemented functions or objects that guide users in building a custom application.

While a library is a set of reusable components, a framework provides a broader structure and tools necessary for creating custom applications. Understanding this difference is beneficial for developers as this often appears in software engineering interview questions.

33. What Does a Software Project Manager Do?

A software project manager oversees the software product management department, focusing on the product's specialization, goals, structure, and expectations. They plan and create a roadmap to ensure the delivery of high-quality software.

Their role is crucial, and understanding their responsibilities is important for project managers, developers, and testers. This has often appeared in software engineering interview questions, highlighting a candidate's knowledge of project management in software development.

34. Explain Software Re-engineering

Software re-engineering is the process of analyzing and modifying existing software systems to enhance their quality, maintainability, and functionality. It involves transforming old systems into more efficient, adaptive, and modern versions.

Key activities in software re-engineering include:

- Reverse Engineering: Studying an existing system to understand its structure and functionality, often to recover lost information or clarify system components.

- Restructuring: Updating the system's architecture, programming, and database schema to improve maintainability and performance.

- Forward Engineering: Creating a new version of the system that incorporates enhancements, new features, and modern technologies to improve functionality.

- Migration: Adapting a system to remain relevant in changing environments.

- Documentation Enhancement: Improving documentation to support system maintenance and growth, often using documentation generators and collaborative platforms.

- Testing and Validation: Ensuring that re-engineered components meet requirements through unit tests, integration tests, and system tests.

Understanding software re-engineering and its processes helps build a strong foundation in software engineering concepts. This topic frequently arises in software engineering interview questions, as it demonstrates a candidate's familiarity with software development life cycle practices.

35. Define Software Prototyping

A software prototype is an early version of a system or application, serving as a working model with limited functionality. It may not include the precise logic of the final software program, representing additional labor to consider in the overall computation. Prototyping allows users to review and test developer proposals before implementation.

Understanding software prototyping is essential, as it enables consumers to assess and interact with a proposed system prior to final development. It helps identify user-specific details that may have been overlooked during the initial requirements gathering, lowering risks by detecting problems early in the development process.

Prototyping also facilitates efficient communication of ideas and concepts among developers, making it one of the frequently asked software engineering interview questions.

36. What Is the Software Scope?

The scope of a software project is a defined boundary that encompasses all activities involved in developing and delivering software products.

It specifies what the software can and cannot do, clearly outlining all capabilities and features that will be included in the product.

Understanding the software scope is vital, as it helps manage expectations and guides the development process. This concept often appears in software engineering interview questions, assessing a candidate's ability to articulate project boundaries and requirements effectively.

37. Define Data Dictionary

A data dictionary, also known as metadata, is a repository for information about data within a system. It organizes names, references, and attributes of various objects and files, along with their naming conventions.

This structured approach aids in maintaining consistency and clarity in data management. Understanding data dictionaries is important, as this concept often appears in software engineering interview questions to assess a candidate's knowledge of data management practices.

38. State the Difference Between Computer Programs and Computer Software

While the terms are often used interchangeably, they refer to different aspects of software development. Computer programs are specific sets of instructions executed by a computer to perform a particular task, whereas computer software encompasses a broader range of programs and related data that enable the overall functionality of a computer system.

Below are the key pointers that will help you understand the difference better:

- Computer Programs:

- A computer program is a set of instructions that direct a computer to do specific tasks.

- It is a set of instructions meant to perform a given function or purpose.

- Programs are smaller in scope and can consist of as few lines of code.

- Examples of programs include web browsers, word processors, and video games.

- Computer Software:

- Computer software refers to more than just programs.

- It contains the data, programs, and other components required for a computer system to function.

- The software serves as a link between the user and the computer hardware, converting commands into action.

- Software falls into three categories: application software, system software, and computer programming tools.

The distinction between computer programs and computer software is essential for understanding how applications function within computing systems.

This differentiation is not only vital for developers to strengthen their foundational knowledge; hence, that is why this question appears in most software engineering interview questions.

39. Define Software Quality Assurance

Software Quality Assurance (SQA) is a systematic procedure for ensuring the reliability and quality of software products throughout the development lifecycle.

It involves creating an SQA management plan, establishing checkpoints, participating in requirement gathering, conducting formal technical reviews, and developing a multi-testing strategy to produce high-quality software.

Understanding SQA principles is essential for developers, making it a common topic in software engineering interview questions.

40. Explain API to a Non-technical Individual

API stands for Application Programming Interface. It is essentially an interface that allows two programs or systems to communicate, transferring requests from one to another and delivering responses.

This enables developers to use the functionality of other systems or applications without needing to understand their internal workings. Understanding APIs is a fundamental concept in software development, which is why it often appears in software engineering interview questions.

41. State the Difference Between API and SDK

An API (Application Programming Interface) enables your program to interact with external services through a simple set of commands. It serves as an interface through which various software components can communicate.

It enables developers to add certain functionalities to their apps, hence speeding up development. Common API use cases include integrating location services, payment processing, SMS, and financial services into applications.

On the other hand, SDK (Software Development Kit) is a set of tools, code libraries, and resources that help software development for a given platform, framework, or device. It contains APIs (or many APIs), IDEs, documentation, libraries, code samples, and other tools. SDKs simplify the process of developing programs by providing robust features and functionality.

42. What Are Internal Milestones?

Internal milestones are measurable and significant aspects of processes. They are regular, systematic methods that demonstrate that the engineers are on the correct track.

These milestones can be used to evaluate the development team's progress, identify difficulties and risks, and make changes to the project plan.

They can be related to any area of the project, such as finishing a specific feature, testing and debugging the code, or achieving a specified level of performance or functionality.

43. Which Model Can Be Chosen if the User Gets Involved in All Phases of the SDLC?

The correct model to choose if the user is involved in all phases of the SDLC is the Agile model, which emphasizes continuous collaboration and feedback from the user throughout the development process.

The RAD (Rapid Application Development) model also involves user participation but is more focused on quickly developing prototypes and iterations rather than involving the user in every phase.

44. Define RAD Model

The Rapid Application Development (RAD) model is an adaptable software development process that prioritizes quick iteration and user participation. RAD attempts to produce excellent software solutions in a fast-paced setting while addressing the limitations of the classic Waterfall methodology.

It accomplishes this by taking a more flexible and adaptable approach to software engineering. The RAD process consists of numerous steps, including requirements planning, user description, construction, and cutover.

45. What Are the Limitations of the RAD Model?

The limitations of the RAD model are:

- RAD involves setting up many teams that work in parallel. However, this demands an adequate amount of trained human resources. If there are insufficient team members, the RAD process may suffer.

- RAD depends significantly on user participation and input throughout the development process. If users or stakeholders are not committed or actively involved, the system may fail to achieve their requirements.

- RAD fosters quick development by dividing the project into smaller components. However, if these components are not properly modularized, integrating them may become difficult.

- RAD is most effective when the requirements are clearly understood, and technical risks are low. However, if there is a significant likelihood of technical challenges (for example, complicated integrations or unique technologies), RAD may not be appropriate.

46. What Is OOP in Software Engineering?

Object-Oriented Programming (OOP) is a type of programming paradigm that utilizes 'objects' to create applications and computer programs, where data structures contain variables of data types and methods. It eases the development and maintenance of software by providing a structured approach based on the following principles.

There are four principles of OOP:

- Inheritance: It enables child classes or subclasses to inherit data fields and functions from their parent class.

- Encapsulation: It is the process of storing information within an object.

- Abstraction: It refers to exposing high-level public methods to access an object.

- Polymorphism: It enables various methods to perform the same task in unique ways.

OOP is frequently utilized in languages like Python, Java, and C++. Understanding these principles is essential, as they often appear in software engineering interview questions.

47. What Are the Building Blocks of OOP?

The building blocks of OOP are:

- Class: A class is a template for producing objects in object-oriented programming (OOP). It specifies the properties (data fields) and behaviors (methods) that objects in that class will have.

- Objects: Objects are instances of classes. When you construct an object based on a class definition, you are effectively establishing a distinct entity with its own set of data. Objects have values for their properties and can use methods provided in their class.

- Methods: Methods are functions associated with a class. They specify the behavior or actions that objects in that class can execute. Methods work with the data in the class and allow you to communicate with objects.

- Attributes: Attributes (properties or fields) represent the data associated with an object. They describe the current state of an object. It can be instance variables (unique to one object) or class variables (shared by all objects in the class).

48. State the Difference Between a Compiler and an Interpreter

The compiler and interpreter both have similar tasks to complete. Interpreters and compilers translate source code (HLL) to machine code (computer-readable).

A compiler transforms source code into machine code before execution. This results in faster execution because no translation is required during runtime. However, the initial compilation process may take longer. Examples: C++ and Java.

Whereas, interpreters translate code line by line during execution. It facilitates error detection and debugging. However, interpreted code may run slower than compiled code. Examples: Python, JavaScript.

In conclusion, we can say that compilers conduct a one-time translation, whereas interpreters operate line by line, which helps with debugging but may reduce speed.

49. What Is SRS?

Software Requirement Specification (SRS) is a document that provides a detailed specification and description of the requirements needed to successfully develop a software system. These requirements can be functional (specific features or behaviors) or non-functional (performance, security, etc.), depending on the nature of the system.

The SRS is created through interactions between clients, users, and contractors to fully understand the software's needs. Understanding how to structure and interpret an SRS is a common topic in software engineering interview questions, as it reflects the candidate's grasp of software planning and requirements management.

50. Testing Software Against SRS Is Referred to As…?

The testing of software against SRS is referred to as acceptance testing. It ensures that the developed system meets the specified requirements outlined in the SRS. This type of testing is crucial to verify if the system aligns with user expectations and is a common focus in software engineering interview questions, as it demonstrates a solid understanding of quality assurance and validation processes.

51. What Are CASE Tools?

Computer-Aided Software Engineering (CASE) tools are a collection of automated software applications that help support and accelerate key tasks in the Software Development Life Cycle (SDLC).

These tools assist software project managers, analysts, and engineers in building software systems, covering the entire SDLC from requirements analysis to testing and documentation.

CASE tools enhance consistency, productivity, and quality in software projects. This question related to CASE tools is often highlighted in software engineering interview questions to assess a candidate's familiarity with development processes and toolsets.

52. Name Various CASE Tools

Some examples of CASE tools include those for requirement analysis, structure analysis, software design, code generation, test case generation, document production, and reverse engineering. These tools simplify software development by improving quality, consistency, and collaboration.

Knowledge of the various uses of CASE tools is often assessed in software engineering interview questions, as it demonstrates a candidate’s understanding of software development efficiency.

53. Define DevOps

DevOps is a combination of software development (dev) and operations (ops). It is a software engineering methodology that integrates the work of development and operations teams within a culture of collaboration and shared responsibility. The advantages include shorter time to market, improved software quality, and enhanced team communication.

Having knowledge of DevOps practices is highly beneficial and is commonly evaluated in software engineering interview questions, as it demonstrates a candidate's ability to thrive in modern development environments.

54. State the Difference Between a Queue and a Stack

A queue and a stack differ primarily in their operating principles. A queue follows the First-In-First-Out (FIFO) principle, which means that the first element inserted is the first to be withdrawn. Elements are added in the back and deleted from the front. Queues are often utilized for breadth-first searching and sequential processing.

In contrast, a stack follows the Last-In-First-Out (LIFO) principle, which states that the last element put is the first to be deleted. Elements can be added and removed from the top. Stacks are commonly used for depth-first searches, recursive programming, and backtracking.

55. What Is Your Method for Learning a New Codebase or Unfamiliar Technology?

This question is significant in demonstrating your growth mindset and capacity to learn quickly. These are critical skills for entry-level software engineers; hiring managers do not expect you to be a software engineering expert but that you have the basic understanding of skills and the capabilities to rapidly learn new ones.

56. Why Do You Want to Go Into Software Engineering?

Discuss what interests you in the field! For example, why are you interested in software engineering? Is it because of a project you have worked on, a technology that fascinates you, or because you enjoy problem-solving? Try to make your response personal rather than generic, and include any relevant experience or learnings that inspired your search for a position in software engineering.

57. What Programming Languages Do You Know?

Based on trends, a developer must have strong knowledge in at least one programming language, such as Python or Java, while having a basic understanding of other languages like C, C++, or C#. This breadth of knowledge would be beneficial in various development contexts.

58. What Software Development Tools Do You Know?

Be honest about the software development tools you use and those you do not know.

Some common software development tools are:

- Integrated Development Environments (IDEs): Visual Studio Code, IntelliJ IDEA, PyCharm

- Version Control: GitHub, GitLab, Bitbucket

- Package Managers: Pip, Maven, Homebrew, npm, Gradle

- Debugging Tools: Chrome DevTools, Xcode Instruments, Visual Studio Debugger

- Test Frameworks: Selenium, Cypress, Playwright

Check the job description to see what the employer is looking for, and then indicate any tools you are familiar with.

The software engineering interview questions covered above are fundamental and essential for any fresher to know, as they form the basic foundation of software development and engineering principles. Understanding these basics is crucial for building a strong software engineering skill set and performing well in interviews.

As you progress, you will further learn intermediate-level software engineering interview questions to deepen your knowledge and enhance your expertise in software development. This will help you tackle more complex scenarios and advance your skills in the field.

Intermediate-Level Software Engineering Interview Questions

These software engineering interview questions cover advanced topics and are ideal for candidates with some experience in software development.

They are designed to test your ability to tackle complex engineering problems, implement best practices, and optimize performance, helping you further enhance your skills in the field.

59. What Are the Most Recent Projects You Worked on?

When discussing the most recent projects you've worked on, focus on those that are relevant to the role you're applying for. Highlight your latest project by mentioning the team you were part of, the technologies you utilized, and the specific challenges you faced during development.

Explain how you addressed those challenges and conclude with the key learnings you gained from the experience. This approach demonstrates your technical skills and your ability to navigate real-world project scenarios effectively.

60. When to Use the Waterfall Method?

The Waterfall method is best suited for the following scenarios in software development, which is often asked as one of the software engineering interview questions:

- Well-understood Requirements: Accurate, trustworthy, and completely documented requirements are available before beginning development.

- Very Few Changes Expected: During development, minimal changes or additions to the project's scope are anticipated.

- Small to Medium-Sized Projects: It is suitable for more manageable projects with a defined development path and low complexity.

- Predictable: Projects that are predictable and low-risk can be addressed early in the development life cycle with known and controllable risks.

- Regulatory Compliance is Critical: This method is appropriate in situations where documentation is essential and strict regulatory compliance is required.

61. What Is QFD?

QFD, or Quality Function Deployment, is part of the software engineering process that bridges the gap between client requirements and product development. It involves translating customer requirements into engineering specifications while ensuring that they align with user expectations.

The process consists of product planning, part planning, process planning, and production planning. QFD is beneficial because it is customer-focused, which aids in competitive analysis, provides structured documentation, lowers development costs, and reduces development time.

Understanding QFD can be crucial for candidates preparing for software engineering interview questions, as it demonstrates an awareness of customer-centric development practices.

62. What Are the Advantages of Software Prototyping?

Software prototyping provides numerous significant advantages in the development process:

- Better Understanding of Requirements: Prototyping helps in refining and clarifying the requirements as you have the tangible model of the software. This guarantees that developers and stakeholders have a good knowledge of the final product's design and functionality.

- Enhanced User Involvement: Prototypes provide valuable input by including users early in the development process. Users can engage with the prototype, providing feedback that can be utilized to improve the final product.

- Early Detection of Issues: Prototyping enables the discovery and solutions of potential issues early in the development cycle. This lowers the likelihood of costly adjustments and rework later on.

- Better Communication: Prototypes are visualized tools used to ensure that developers, stakeholders, and users can communicate much better. It ensures a common understanding regarding project objectives and stages of progress by all.

63. Describe the Software Prototyping Methods

Software prototyping provides numerous significant advantages in the development process:

- Better Understanding of Requirements: Prototyping helps refine and clarify requirements by offering a tangible model of the software. This ensures that developers and stakeholders have a clear understanding of the final product's design and functionality.

- Enhanced User Involvement: Prototypes engage users early in the development process, allowing them to interact with the prototype and provide feedback that can be utilized to improve the final product.

- Early Detection of Issues: Prototyping enables the discovery and resolution of potential issues early in the development cycle, reducing the likelihood of costly adjustments and rework later on.

- Better Communication: Prototypes serve as visual tools that enhance communication among developers, stakeholders, and users. They ensure a shared understanding of project objectives and stages of progress.

Understanding the advantages of software prototyping is beneficial for candidates preparing for software engineering interview questions, as it highlights the importance of iterative development and user feedback in creating effective software solutions.

64. State the Purpose of User Interface Prototyping

The major purpose of UI prototyping is to provide a visual impression of what the user interface design will look like in the software product. It allows designers and developers to create a mock or working model of the user interface that is testable and refinable before the actual product is built.

By prototyping, designers and developers gain insights into how users interact with the system, which helps identify any usability issues early in the process. This approach ensures that the final product is usable and well-suited for its intended audience.

Understanding the significance of UI prototyping is essential for candidates facing software engineering interview questions, as it highlights the role of user experience in software development.

65. How Will You Define Change Control?

Change control is a systematic approach to managing all changes made to a software system. It ensures that changes are implemented in a controlled and planned manner, minimizing disruptions and maintaining the integrity of the system.

This process involves documenting change requests, assessing the impact of changes, obtaining necessary approvals, and tracking changes throughout their implementation.

Understanding change control is vital for candidates preparing for software engineering interview questions, as it reflects an ability to maintain quality and consistency in software development.

66. State the Difference Between Functional and Imperative Programming

Functional programming and imperative programming are two distinct concepts in software engineering, each with its approach to writing and executing code.

Functional Programming:

- Declarative Nature: This paradigm focuses on what problems to solve rather than how to solve them, conveying the logic of computation without detailing the control flow.

- Pure Functions: It relies on pure functions, which produce the same output for the same input without side effects, making the code more predictable and testable.

- Immutability: Once a data structure is created, it cannot be changed, which helps maintain reliability and reduces unintended consequences in code.

- Statelessness: Functional programming avoids mutable states, leading to a stateless approach where the state is not maintained between function calls.

Imperative Programming:

- Procedural Nature: This paradigm specifies the processes required to solve a problem, outlining step-by-step instructions.

- State Changes: It involves modifying the program's state using statements that change variables, which can complicate debugging due to increased dynamic behavior.

- Mutable Data: Data structures can be modified after creation, offering flexibility but also potential side effects.

- Control Flow: It utilizes control flow statements, such as loops, conditionals, and branches, to dictate the order of operations.

Understanding the differences between these paradigms is crucial for candidates facing software engineering interview questions, as it showcases their grasp of diverse programming methodologies.

67. Define Timeline Chart

A timeline chart is a visual representation that displays the rate at which a project or its components have been completed against targeted completion times. The length of the line illustrates the time taken for completion, while the color coding indicates whether the completion was successful.

The objectives of a timeline chart include:

- Visual Representation of Time: It aids individuals in understanding the sequence of events and helps them visualize the duration of each process, as well as the total time required for project completion.

- Strategizing for Future Events: By illustrating how long certain activities will take, the timeline chart assists individuals in planning and allocating material resources effectively for upcoming tasks.

Understanding the significance and application of timeline charts can be essential for candidates facing software engineering interview questions, as it demonstrates their ability to manage project timelines and resources effectively.

68. Discuss the Concept of Threads

In software engineering, a thread is the smallest execution unit within a process. Threads enable software to perform multiple activities concurrently while sharing the same memory space and resources as the parent process, making them more efficient than processes.

This concurrent execution enhances the efficiency and responsiveness of applications, particularly those requiring real-time processing or handling multiple user interactions, such as web servers or graphical user interfaces.

Threads are often referred to as "lightweight" because they require fewer resources than processes. They can be managed and scheduled by the operating system or through threading libraries.

69. What Do You Understand by Concurrency?

Concurrency in software engineering refers to the capability of a system to support or manage several tasks or processes that occur nearly simultaneously or overlap in time.

This is achieved through techniques such as multithreading, which involves splitting a single process into smaller units of threads working on different tasks simultaneously. Languages like C++ and Java support threading techniques that facilitate concurrent programming.

Concurrency enhances a system's efficiency and responsiveness, making it a common topic in software engineering interview questions. However, it requires careful control to avoid issues related to deadlocks and resource contention.

70. What Is a Deadlock? How Can You Avoid It?

A deadlock occurs in a multi-threaded environment when two or more threads are waiting for each other to release resources, resulting in the complete halting of the system's activity.

This situation often arises when each thread holds a resource that the other needs, creating a circular dependency.

To avoid deadlocks, several strategies can be employed:

- Deadlock Detection and Recovery: Monitor the system for deadlocks and recover by terminating or resuming the affected threads.

- Avoid Nested Locks: Prevent circular dependencies by ensuring that threads acquire locks in a consistent order.

- Use Timeout Mechanisms: Implement timeouts for lock requests to prevent threads from waiting indefinitely.

- Resource Allocation Strategies: Utilize methods such as the Banker's algorithm to ensure secure resource allocation.

- Synchronization Primitives: Employ synchronization objects like mutexes or semaphores to manage access to resources effectively.

71. State the Difference Between a Bug and an Error

A bug is a flaw in a software system that causes it to behave unexpectedly, leading to incorrect responses, failures, or crashes. Bugs typically arise from coding issues like syntax, logic, or data processing errors and are identified before the software is released.

An error, on the other hand, refers to a specific coding mistake, often resulting from incorrect syntax or logic. Errors manifest in the source code due to developer oversights or misunderstandings.

Below are the details differences between bugs and errors.

| Basis | Bugs | Errors |

|---|---|---|

| Cause | Shortcomings in the software system | Mistakes or misconceptions in the source code |

| Detection | Typically found before the software is pushed to production | Detected when the code is compiled and fails to do so |

| Origin | Can result from human oversight or non-human causes like integration issues | Primarily caused by human oversight |

72. What Is CMM in Software Engineering?

Capability Maturity Model (CMM) was developed to support improvement in software development processes. This model gives organizations a systematic approach to improve their existing practices and suggest areas of enhancement.

73. What Do You Understand by Baseline?

In software development, a baseline is a milestone that indicates the completion of one or more software deliverables. This helps to control vulnerability, which might cause the project to spiral out of control or increase damage.

Baselines can include code, documentation, and other elements, and they are frequently used to measure progress, track changes, and maintain version control.

74. What Is Equivalence Partitioning?

Equivalence Partitioning is a testing technique that divides a program's input domain into data classes known as equivalence classes, which are then used to generate test cases. Each class specifies a group of inputs that the software should treat similarly.

The links within an equivalence class are symmetric, transitive, and reflexive, meaning that all components in the class are treated equally in terms of how the software processes them.

Testing one representative from each equivalence class allows testers to detect flaws efficiently without having to test every potential input.

This strategy ensures that the various input possibilities are sufficiently handled while reducing the number of test cases, making it a valuable concept to appear in software engineering interview questions.

75. State the Difference Between OOD and COD

In software engineering, Object-Oriented Design (OOD) and Component-Oriented Design (COD) are two distinct techniques for designing software systems:

OOD

- Focus: Centers on building software with objects that are instances of classes, representing both data and behavior.

- Principles: Utilizes inheritance, polymorphism, and encapsulation to generate modular and reusable code.

- Process: Involves identifying the system's objects, defining their interactions, and organizing them into a cohesive structure.

- Tools: Often employs Unified Modeling Language (UML) diagrams to visualize the system.

COD

- Focus: Emphasizes designing software using components, which are largely independent units providing added value and capable of being deployed and replaced independently.

- Principles: Encourages the use of interfaces and contracts to define component interactions, promoting loose coupling and good cohesion.

- Process: Includes discovering reusable components, specifying their interfaces, and assembling them into a complete system.

- Tools: Uses component diagrams and other architectural models to represent the system's structure.

OOD focuses on developing systems with objects and their interactions, whereas COD stresses the usage of reusable, self-contained components. Both techniques seek to develop modular, manageable, and scalable software systems, but they approach the design process from distinct perspectives.

Understanding these differences is beneficial for designers and developers, as it is an important concept that is often asked in software engineering interview questions. It helps explore various design methodologies effectively.

76. Black Box Testing Is Always Focused on Which Software Requirement?

Black box testing is indeed focused on the software's functional requirements, assessing how the system behaves based on inputs without considering its internal workings.

77. What Are Functional Requirements?

Functional requirements refer to the specific features and functions an application must deliver, as defined by the end user. These requirements are crucial for the system's operation, encompassing tasks such as user authentication, data processing, and user interface adjustments like providing a dark mode.

Understanding functional requirements is core for developers, making it an essential topic to appear in software engineering interview questions.

78. What Are Non-functional Requirements?

Non-functional requirements specify the quality and performance standards that the system must achieve, as defined by stakeholders.

These requirements are essential for the system's overall performance and user experience and include aspects such as usability, reliability, performance under load, security, and maintainability.

Unlike functional requirements, non-functional requirements describe how the system performs its functions rather than the specific functions it must perform.

79. What Do You Understand by Function Point?

A function point is a metric that expresses how much business functionality an information system gives to a user. The metrics provide a consistent technique for evaluating the various functionalities of a software program from the user's perspective.

This measurement is based on what the user demands and receives in return, with an emphasis on the functionality provided rather than the technical details of the implementation.

80. State the Difference Between Fixed Website Design and Fluid Website Design

Fixed website designs use fixed pixel widths to simplify launch and operation; however, they are less user-friendly. Their designs have a fixed width that does not change depending on the screen or browser window size.

This means that the design may look different on different screen sizes or resolutions, and users may have to scroll horizontally to see the text on smaller screens.

However, fluid websites use percentages as relative indicators of widths. This allows the content to extend or contract to fit the screen, resulting in a more adaptable and user-friendly interface.

However, building a fluid layout can be more difficult and necessitates careful consideration of the content and how it will adapt to different screen sizes.

81. Name Some Design Patterns

Design patterns are reusable solutions for common software design issues, making them an essential topic in software engineering interview questions.

Here are some common design patterns:

- Singleton design pattern

- Factory design pattern

- Observer design pattern

- Strategy design pattern

Subscribe to the LambdaTest YouTube Channel and get more videos on design patterns.

82. What Is a Singleton Pattern?

The singleton pattern ensures that a class has only one instance and provides a global interface to that instance. This is beneficial in situations where only one object is required to coordinate actions throughout the system, such as logging, configuration settings, or connection pooling.

The design typically includes a private constructor to prevent direct instantiation, a static method for accessing the instance, and a static variable that holds the sole instance.

Understanding design patterns like the singleton is important for developers, as they enhance the ability to create efficient and maintainable code.

Consequently, these patterns-related questions often appear in software engineering interview questions, demonstrating a candidate's grasp of software architecture principles and their ability to solve common design challenges effectively.

83. What Is the Factory Pattern Used for?

The factory pattern is used to create objects without specifying the specific class of object to be created. It provides a means to encapsulate the instantiation logic, making the code more flexible and scalable. This technique is especially useful when the kind of object to be produced is determined at runtime.

Understanding design patterns like the factory pattern is essential for developers, as it helps in creating more flexible and maintainable code by decoupling object creation from its usage. This concept is often discussed in software engineering interview questions to assess a candidate's knowledge of object-oriented design principles.

84. Explain Bottom-Up and Top-Down Design Models

The top-down design model provides an overview of the system without delving into the details of its components. Each component is subsequently refined, defining it with increasing depth until the overall specification is thorough enough to validate the model.

In contrast, the bottom-up design model specifies various system components in detail. These components are then integrated to form larger components, which are linked together until a complete system is created. Object-oriented languages, such as C++ or Java, often utilize a bottom-up approach, starting by identifying each object first.

Understanding these design models is essential for developers, as it helps in selecting the appropriate approach for system development and optimizing the design process.

This question is frequently covered in software engineering interview questions, as it illustrates different methodologies for system architecture and design.

85. What Do You Understand by WBS?

A Work Breakdown Structure (WBS) is a project management method for breaking down large and complex projects into smaller, more manageable, and independent jobs. It uses a top-down approach in which each node is systematically broken into smaller sub-activities until the tasks are undivided and independent.

This hierarchical structure makes it easier to organize and manage the project by offering a clear overview of all tasks and their relationships.

86. Define a System Context Diagram (SCD)

A SCD is a diagram that depicts the boundary between the system under development and its external environment. It defines the data boundary and demonstrates how the system communicates with external entities.

The SCD describes all external producers, external consumers, and entities that connect via the customer interface, giving a comprehensive picture of how the system interacts with its surroundings. This aids in comprehending the scope of the system and identifying critical interfaces and data flows.

87. Explain COCOMO Model

The Constructive Cost Model (COCOMO) estimates the work, time, and cost of developing software by taking into account project size, complexity, necessary software reliability, team experience, and the development environment.

COCOMO provides estimations by applying a mathematical formula based on the size of the software project, which is commonly quantified in lines of code (LOC). This model assists in predicting the performance of a software project and is extensively used for project planning and management.

88. What Do You Understand by Blocking Calls?

Blocking calls refer to operations that prevent further execution of code until the specific task is completed. In languages like JavaScript, these blocking calls are avoided by design, as JavaScript is asynchronous.

However, in other programming languages (like Java or Python), blocking calls can occur during tasks such as network requests or file I/O operations, where the program waits for the operation to finish before proceeding. In asynchronous programming, non-blocking calls are preferred to avoid this kind of execution halt.

89. What Is Asynchronous Programming?

Asynchronous programming allows tasks to operate independently, resulting in non-blocking execution. This means that while one job waits for a response (such as a network request), others might continue to run.

This programming boosts application responsiveness and resource utilization, making it especially useful for I/O-bound operations and scenarios that require high concurrency.

90. State the Purpose of Testing in the SDLC

Testing is a significant aspect of SDLC, ensuring the software quality. Here are the main purposes of testing:

- Bug Detection and Prevention: It detects defects and issues in software before it is deployed, avoiding potential failures.

- Quality Assurance: It ensures that the program performs as intended and satisfies user expectations.

- Cost Efficiency: Early bug discovery and resolution are less expensive than correcting issues after launch, saving time and money.

91. What Are the Steps Involved in STLC?

The Software Testing Life Cycle (STLC) involves six steps, and each step/phase ensures thorough testing and quality assurance throughout the development process.

Below are the key steps followed in a software testing life cycle:

- Test Requirement Analysis: Review what needs to be tested.

- Test Planning: Create a plan that specifies the scope, objectives, resources, and testing methodologies.

- Test Design: Create test cases and test scripts based on requirements.

- Test Environment Setup: Prepare the testing environment.

- Test Execution: Run the test cases.

- Test Closure: Complete testing tasks and create a test summary report.

By Sathwik Prabhus

92. What Is Regression Testing?

Regression testing is a form of software testing that ensures recent changes or modifications to the code or program don't affect its functionalities. It involves careful test case selection of all or some that have been executed previously.

These test cases are rerun to confirm that the current functionality works properly. This test is run to confirm that new code changes have no adverse effects on existing functionalities.

93. Regression Testing Is Primarily Related to Which Testing?

Regression testing is primarily related to maintenance testing since it is performed to ensure that changes or updates in the software do not negatively affect its existing functionalities.

94. How Will You Define Maintenance?

Maintenance refers to the process of modifying a software system after it has been delivered to correct faults, improve performance, or adapt the system to a changed environment. It includes activities such as bug fixes, performance enhancements, and updating software to accommodate changes in hardware, operating systems, or other external systems.

95. What Are Several Types of Software Maintenance?

The types of software maintenance are as follows:

- Corrective Maintenance: This involves fixing software flaws and problems to guarantee that the system functions properly.

- Adaptive Maintenance: This modifies the program to meet changes in the environment, such as new operating systems or equipment.

- Perfective Maintenance: This involves enhancing or modifying the system to meet new requirements or better performance.

- Preventive Maintenance: This makes improvements to improve future maintainability and avoid potential problems.

96. What Do You Understand by Coupling?

In software engineering, coupling is the degree of interdependence between software units, often discussed in software engineering interview questions. It evaluates how closely integrated certain modules are within a system.

Lower coupling is generally preferred because it means that changes in one module are less likely to require changes in another, making the system more adaptable and easier to manage.

High coupling, on the other hand, suggests that modules are tightly connected, making the system more complex and difficult to adapt.

97. What Is Stamp Coupling?

Stamp coupling arises when part of a data structure is passed through the module interface instead of using simple data types, which can create unnecessary dependencies between components. This concept is important in software engineering and is often addressed in software engineering interview questions, as it emphasizes the need for modularity and low coupling in system design.

98. What Is Common Coupling?

Common coupling occurs when multiple modules have access to the same global data area, making the system more complex to comprehend and maintain. Changes to the global data can affect all modules that reference it.

This concept is significant in software engineering and often appears in software engineering interview questions, highlighting the importance of managing dependencies to enhance modularity and maintainability.

99. Define Cohesion

In software engineering, cohesion is a measure of the closeness of the relationship between the various elements of a module. High cohesion means that a module performs one task or a set of related tasks and has minimal dependency on other modules, making it simpler to understand, maintain, and reuse.

High cohesion is crucial because it enhances the modularity and quality of software, a concept frequently addressed in software engineering interview questions to evaluate a candidate's understanding of effective design principles.

100. What Is Temporal Cohesion?

Temporal cohesion refers to a circumstance in which a module comprises tasks that are related because they must be completed within the same time frame.

This type of cohesion is determined by the scheduling of tasks rather than their functional relationships, making it an important concept often explored in software engineering interview questions to assess a candidate's understanding of module design and organization.

101. State the Difference Between Coupling and Cohesion

Coupling refers to the degree of interdependence between software modules, with lower coupling indicating less reliance on one another, making the system easier to maintain.

In contrast, cohesion measures how closely related the functions within a module are, with higher cohesion suggesting that a module performs a specific task effectively and independently.

Below are the differences between coupling and cohesion.

| Basis | Coupling | Cohesion |

|---|---|---|

| Definition | Refers to the level of interdependence between software modules. | Refers to the degree to which all the elements of a module fit together. |

| Focus | Measures how closely connected modules are within a system. | Measures a module’s functional strength. |

| Desirability | Low coupling is desirable. | High cohesion is desirable. |

| Example | Low coupling occurs when two modules communicate via well-defined interfaces and have few dependencies. | High cohesion occurs when a module handles all user authentication processes. |

102. Cohesion Is an Extension of Which Concept?

Cohesion is a measure of how closely related and focused the responsibilities of a single module are, reflecting its functionality and ease of maintenance.

It is an important concept in software design that is often explored in software engineering interview questions, as high cohesion leads to better modularization and code quality.

103. In Modular Software Design, Which Combination Is Considered for Cohesion and Coupling?

In modular software design, high cohesion, and low coupling are essential for creating maintainable and adaptable systems.

This combination not only enhances code readability and reusability but is also a common topic in software engineering interview questions, as it reflects key principles of effective software architecture.

104. What Are Metrics?

Metrics are quantitative measures that define the degree to which a system, component, or process has a given attribute. They facilitate objective analysis of various aspects of software development, including performance, quality, efficiency, and reliability.

By measuring progress and identifying areas for improvement, metrics play a crucial role in informed decision-making throughout the SDLC and are often discussed in software engineering interview questions to evaluate a candidate's understanding of project assessment and management.

105. What Do You Understand About ERD?

An Entity-Relationship Diagram (ERD) is a graphical representation of database design used to illustrate how entities are interrelated. An ERD depicts the structure of data and its flow, helping to organize data requirements and create a proper database design in accordance with business rules.

Essentially, ERDs present entities (tables) and their relationships, such as one-to-many or many-to-many, providing a clear overview of the logical structure of the database.

Understanding ERDs is crucial for candidates, as they frequently appear in software engineering interview questions, reflecting the candidate's grasp of data modeling concepts.

106. Mention Some Good Practices for Writing Clean and Maintainable Code

Writing clean and maintainable code is essential for long-term project success. Here are some key practices to follow:

- Meaningful Naming: Use descriptive names for variables, functions, and classes to enhance readability and understanding.

- Keep Functions Short and Focused: Ensure each function performs a single task. This adheres to the Single Responsibility Principle, making your code more testable and maintainable.

- Consistent Formatting: Apply standard formatting conventions throughout the codebase to maintain consistency.

- Comment Where Necessary: While code should ideally be self-explanatory, comments can clarify complex logic or critical decisions. Avoid redundant comments that do not add value.

107. Define Risk Management

Risk management is a crucial concept in project management and software engineering, involving the detection, evaluation, prioritization, and mitigation of risks to minimize their impact on project goals.

Understanding risk management is essential for software testers, as it helps them identify potential issues early, implement effective solutions, and ensure project success. This question often appears in software engineering interview questions, reflecting a candidate's ability to navigate project challenges effectively.

108. What Do You Understand About Continuous Integration (CI)?

Continuous Integration (CI) is a software development method in which developers regularly integrate code changes into a shared repository. Each integration is automatically validated by performing automated builds and tests to identify integration errors as soon as possible.

The primary goals of CI are to enhance software quality, eliminate integration issues, and accelerate the delivery of new features and bug fixes. Understanding CI is also important for candidates, as it frequently appears in software engineering interview questions, reflecting a candidate's familiarity with modern development practices.

109. Mention Some Software Analysis and Design Tools

Here are some common software analysis and design tools:

- Data Flow Diagrams

- Unified Modeling Language (UML)

- Entity-Relationship Diagrams (ERD)

- Flowcharts

- Structure Charts

- Pseudo-Code

- HIPO Diagrams (Hierarchy plus Input-Process-Output)

Familiarity with these tools is essential for software engineers, as they help in visualizing and structuring software systems effectively. These concepts are crucial, as they often come up in software engineering interview questions, reflecting a candidate's grasp of the software development process.

110. How Will You Optimize the SQL Query?