ChatGPT’s launch was revolutionary, quickly attracting over a million users in just five days. This AI-driven chatbot, known for its ability to understand and respond to natural language queries, has shown immense versatility. From crafting poems and movie scripts to generating social media content and writing essays, ChatGPT’s capabilities are vast. Recognizing this potential, our team set out to explore how ChatGPT could be a game-changer in the realm of automation testing, particularly for web and mobile app testing.

In this blog post, we delve into how ChatGPT can be utilized for test automation. Our journey began with test case generation. ChatGPT demonstrated its proficiency in creating detailed test scenarios for various applications, including functionality testing for Google and evaluating login forms. However, ChatGPT’s functionality in test automation extends far beyond creating test cases. This powerful tool is also adept at writing code in multiple programming languages, supporting various popular test automation frameworks across different technologies.

In this blog post, we’re excited to share our detailed experience developing an automation testing pipeline using ChatGPT. For those new to this technology, ChatGPT is an advanced chatbot built using the GPT-3.5 framework. It’s designed to interact and respond in a way that mimics human conversation, making it a valuable tool in various applications, including automation testing.

Let’s dive in!

TABLE OF CONTENTS

- What is ChatGPT?

- How to create test cases using ChatGPT?

- Unit test generation with ChatGPT

- Utilizing ChatGPT for code adjustment and troubleshooting

- Starting with a simple Automation Test Script using ChatGPT

- Let’s up the stakes: ChatGPT for a complex Automation Test Case

- Adding Negative Test Cases with ChatGPT

- Let’s Mix Positive and Negative Test Cases in Cucumber

- It’s time to Playwright!

- What if we want to Run The Test on LambdaTest Cloud?

- Can we do this for every Microservice?

- Verify Test Entity Creation in the Database

- Refining the MySQL Touch with ChatGPT

- Let’s add Resiliency to the Code

- Make it more Secure through Environment Variables

- Schedule a GitHub Action and fetch Test Results with LambdaTest API

- A Package.json file with the above steps

- Let’s put all of this on the GitHub Repo

- A Bash Script to run everything locally on Mac

- Now finally let’s modify the Cucumber Definitions

- What we learned when we created Automation Tests with ChatGPT

- Future of testing with AI: Will it replace testing teams?

What is ChatGPT?

ChatGPT is a chatbot developed by OpenAI, released on November 30, 2022. This advanced chatbot is based on the latest version of OpenAI’s language model, GPT-3.5.

ChatGPT is designed to give clear and well-thought-out answers to various questions.

The key technology behind ChatGPT is the GPT-3.5 language model. This model is a type of auto-regressive language model. It works by predicting the next words in a sentence using the context of the preceding words. It employs the most current techniques in natural language processing and deep learning. This makes the chatbot’s responses so refined that they often seem like they were written by humans.

If you’re looking for ready-to-use prompt ideas to accelerate your testing tasks, check out these ChatGPT prompts for software testing that can help you get started instantly.

For more understanding, especially on how ChatGPT, based on GPT-3.5, can be utilized in Test Automation, watch the following video.

How to create test cases using ChatGPT?

ChatGPT is an AI-driven language model that can assist in creating test cases and scripts for automated testing. It’s a tool to support, not replace, human testers, enhancing the speed and efficiency of the testing process.

For example, here’s a simplified guide on using ChatGPT to generate test cases and Selenium test scripts:

1. Define the Test Scenario: First, decide what you want to test, like a user action or a specific function in your application. For instance, if you’re testing a website’s search feature, outline the key steps for this function.

2. Select a Programming Language: Selenium supports various languages like Java, Python, and C#. Choose one that suits your skills or your team’s expertise.

3. Prepare Your Development Environment: Install Selenium WebDriver and the bindings for your chosen programming language. This setup allows you to automate browser interactions in your tests.

4. Input Details into ChatGPT: Approach ChatGPT with your test scenario. For example, you might say, “I need to test a website’s search feature. Can you suggest some test cases?”

5. Receive Test Cases from ChatGPT: ChatGPT will process your request and provide relevant test cases. A typical test case for a search function might include navigating to the search page, entering a query, clicking the search button, and verifying the results. Here’s an example of the same:

|

1 2 3 4 5 6 |

Test case 1: 1. Navigate to the search page 2. Enter a search query 3. Click the search button 4. Verify that the search results page is displayed 5. Verify that the search query is displayed in the search results |

6. Transform Test Cases into Selenium Scripts: Use ChatGPT’s suggestions to write a Selenium script in your chosen language. For instance, a Java script for the provided test case would involve coding steps to open the browser, perform the search actions, and check the results. Here’s an example of a Selenium Java test script for the test case generated above:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 |

import org.openqa.selenium.By; import org.openqa.selenium.WebDriver; import org.openqa.selenium.WebElement; import org.openqa.selenium.chrome.ChromeDriver; public class SearchTest { public static void main(String[] args) { // Set the path to the chromedriver executable System.setProperty("webdriver.chrome.driver", "path/to/chromedriver"); // Create a new instance of the ChromeDriver WebDriver driver = new ChromeDriver(); // Navigate to the search page driver.get("https://example.com/search"); // Enter a search query WebElement searchField = driver.findElement(By.name("q")); searchField.sendKeys("example query"); // Click the search button WebElement searchButton = driver.findElement(By.xpath("//button[@type='submit']")); searchButton.click(); // Verify that the search results page is displayed WebElement searchResultsPage = driver.findElement(By.xpath("//h1[contains(text(), 'Search Results')]")); assert searchResultsPage.isDisplayed(); // Verify that the search query is displayed in the search results WebElement searchQuery = driver.findElement(By.xpath("//div[contains(text(), 'example query')]")); assert searchQuery.isDisplayed(); // Close the browser driver.quit(); } } |

7. Execute the Test Script: Run your script to check whether it passes or identifies any issues.

Incorporating ChatGPT in test case generation can streamline your testing workflow. Yet, it’s crucial to review the AI-generated cases to ensure they meet your testing needs accurately and comprehensively.

Unit test generation with ChatGPT

Creating automatic unit tests in a specific programming language is a valuable skill. Using tools like ChatGPT, teams can efficiently generate code in various languages, including Python and JavaScript. This approach is particularly useful for those involved in software testing, as it can significantly reduce the time spent on developing test cases. For example:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 |

# Import the unittest module and the function to be tested import unittest from my_math_module import add_numbers # Define a test class inheriting from unittest.TestCase class TestAddNumbers(unittest.TestCase): def test_add_positive_numbers(self): """Test the addition of two positive numbers.""" result = add_numbers(10, 15) self.assertEqual(result, 25) def test_add_negative_numbers(self): """Test the addition of two negative numbers.""" result = add_numbers(-10, -5) self.assertEqual(result, -15) def test_add_zero(self): """Test the addition of a number and zero.""" result = add_numbers(5, 0) self.assertEqual(result, 5) # Run the tests if the module is executed as a script if __name__ == '__main__': unittest.main() |

In this example, three tests are defined:

1. “test_add_positive_numbers” checks if the function correctly adds two positive numbers.

2. “test_add_negative_numbers” ensures that adding two negative numbers works as expected.

3. “test_add_zero verifies” the function’s behavior when one of the numbers is zero.

Utilizing ChatGPT for code adjustment and troubleshooting

In typical testing scenarios, specific test values like the URL, username, and password are often directly embedded in the code. However, in real-world applications, these details, such as “http://www.example.com/login” or common username and password combinations like “username” and “password,” may not always apply. A more flexible approach involves using Cucumber, a tool that inserts variables directly from feature files into your test scenarios.

Sometimes, though, you might find the format of these variables challenging to understand, or you may not have the time to update your entire codebase manually. In such instances, ChatGPT emerges as a practical solution. You can leverage its capabilities to modify and rectify your code, saving time and effort in adapting test scenarios to more realistic and varied inputs.

Starting with a simple Automation Test Script using ChatGPT

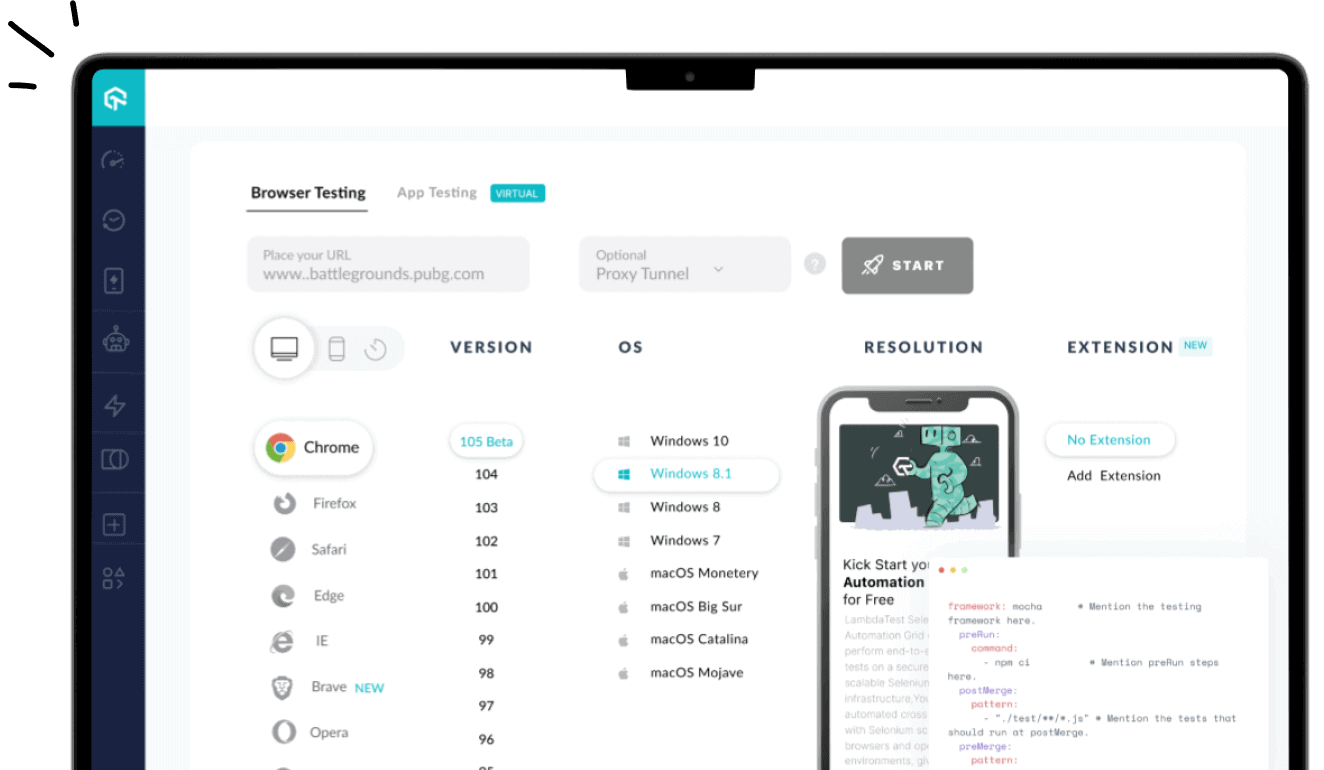

We started with a very simple prompt. Let’s create a simple code using Selenium with Java that can open Google.com and print out its Title. And most importantly, the code should be able to run on the LambdaTest cloud Selenium Grid. So we opened out the platform and typed in our first question.

Note

NoteRun Selenium testing on cloud with LambdaTest. Execute Selenium scripts in parallel on multiple configurations, simultaneously with LambdaTest. Try LambdaTest Today!

Q: Write a Selenium and Java code to open google.com and print its title. The code should run on LambdaTest

ChatGPT: To write a Selenium test in Java to open Google.com and print its title, which will run on LambdaTest, you can use the following code:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 |

import org.openqa.selenium.WebDriver; import org.openqa.selenium.chrome.ChromeDriver; import org.openqa.selenium.remote.RemoteWebDriver; import org.openqa.selenium.remote.DesiredCapabilities; public class Test { public static void main(String[] args) { // Set your LambdaTest username and access key String username = "YOUR_USERNAME"; String accessKey = "YOUR_ACCESS_KEY"; // Set the desired browser and operating system DesiredCapabilities caps = new DesiredCapabilities(); caps.setCapability("browserName", "chrome"); caps.setCapability("version", "80.0"); caps.setCapability("platform", "WIN10"); // Create a webdriver instance using the LambdaTest grid WebDriver driver = new RemoteWebDriver( new java.net.URL("https://" + username + ":" + accessKey + "@hub.lambdatest.com/wd/hub"), caps ); // Open Google.com driver.get("https://www.google.com"); // Get the title of the page String title = driver.getTitle(); // Print the title System.out.println(title); // Close the webdriver instance driver.quit(); } } |

This test will open Google.com in a Chrome browser on a Windows 10 operating system, get the title of the page, and print it to the console. To run the test on LambdaTest, ensure you have the Selenium Java library installed, and then compile and run the code using a Java compiler such as javac and java. The test will be executed on the LambdaTest grid, and the results will be displayed in your LambdaTest Dashboard.

The above response shows that the platform automatically added complex LambdaTest capabilities like hub URL, desired capabilities, and the prompt for adding access keys and user names. All of which is required to run the test at LambdaTest.

Let’s up the stakes: ChatGPT for a complex Automation Test Case

Now we know that writing simple automation scripts is a cakewalk for ChatGPT. However, simplicity doesn’t always cut it. When we’re performing web automation, we are often forced to face a complex automation testing scenario, be it around testing APIs or testing microservices. Can ChatGPT, a fine-tuned version of the GPT-3.5 series, make life easier if we hand over a complex automation test case? Let’s find out!

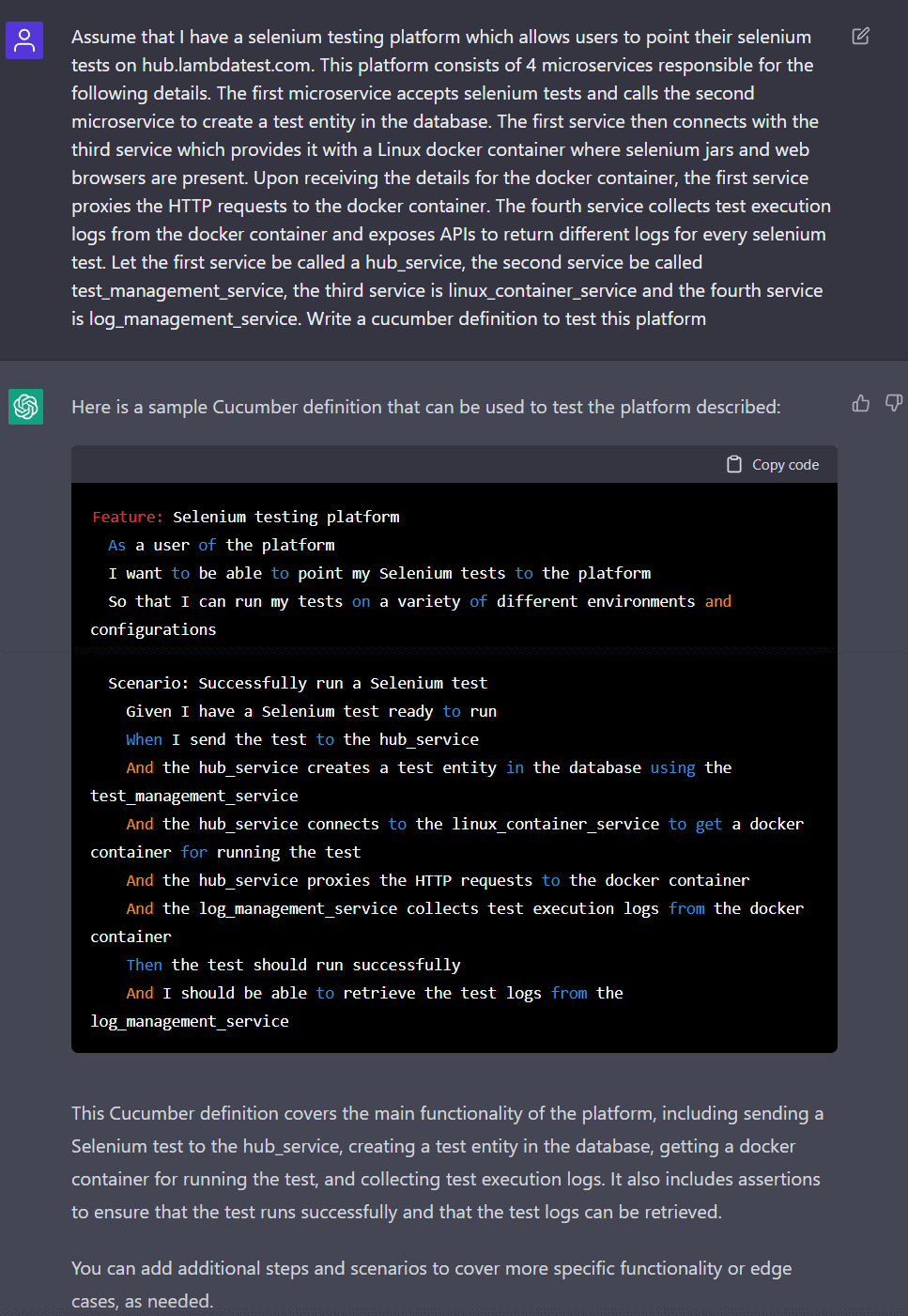

Q: Assume that I have a Selenium testing platform that allows users to point their Selenium tests on hub.lambdatest.com. This platform consists of 4 microservices responsible for the following details.

- The first microservice accepts Selenium tests and calls the second microservice to create a test entity in the database.

- The first service then connects with the third service, which provides it with a Linux docker container where Selenium jars and web browsers are present.

- Upon receiving the details for the docker container, the first service proxies the HTTP requests to the docker container.

- The fourth service collects test execution logs from the docker container and exposes APIs to return different logs for every Selenium test.

Let the first service be called a hub_service, the second service be called test_management_service, the third service is linux_container_service, and the fourth service is log_management_service. Write a Cucumber definition to test this platform.

Note

NoteLambdaTest’s Selenium cloud supports Cucumber framework scripts as well.That way you can scale Cucumber scripts execution. Run them in parallel on multiple configurations, simultaneously with LambdaTest. Start for free!

So we asked this test scenario as a question to ChatGPT and this is what we got:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

Feature: Selenium testing platform As a user of the platform I want to be able to point my Selenium tests to the platform So that I can run my tests on a variety of different environments and configurations Scenario: Successfully run a Selenium test Given I have a Selenium test ready to run When I send the test to the hub_service And the hub_service creates a test entity in the database using the test_management_service And the hub_service connects to the linux_container_service to get a docker container for running the test And the hub_service proxies the HTTP requests to the docker container And the log_management_service collects test execution logs from the docker container Then the test should run successfully And I should be able to retrieve the test logs from the log_management_service |

ChatGPT: This Cucumber definition covers the main functionality of the platform, including sending a Selenium test to the hub_service, creating a test entity in the database, getting a docker container for running the test, and collecting test execution logs. It also includes assertions to ensure that the test runs successfully and that the test logs can be retrieved.

As needed, you can add additional steps and scenarios to cover more specific functionality or edge cases.

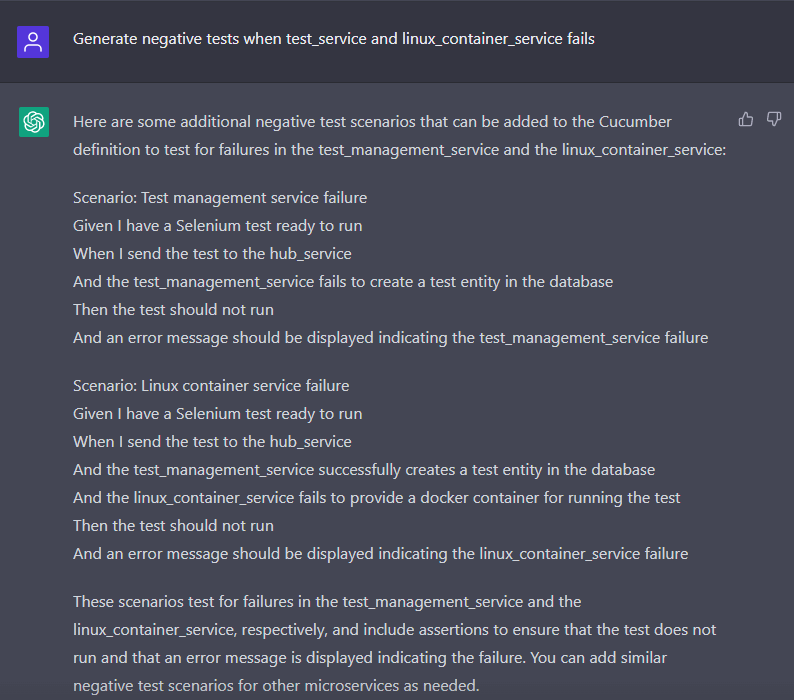

Adding Negative Test Cases with ChatGPT

Speaking of edge cases, it’s important to note that the Cucumber definition we received from ChatGPT for our complex test scenario doesn’t consider the negative test cases. Let’s ask ChatGPT, which is built on top of ChatGPT-3.5, to include negative test cases well.

Q. Generate negative tests when test_service and linux_container_service fail

ChatGPT: Here are some additional negative test scenarios that can be added to the Cucumber definition to test for failures in the test_management_service and the linux_container_service:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

Scenario: Test management service failure Given I have a Selenium test ready to run When I send the test to the hub_service And the test_management_service fails to create a test entity in the database Then the test should not run And an error message should be displayed indicating the test_management_service failure Scenario: Linux container service failure Given I have a Selenium test ready to run When I send the test to the hub_service And the test_management_service successfully creates a test entity in the database And the linux_container_service fails to provide a docker container for running the test Then the test should not run And an error message should be displayed indicating the linux_container_service failure |

These scenarios test for failures in the test_management_service and the linux_container_service, respectively, and include assertions to ensure that the test does not run and that an error message is displayed indicating the failure. You can add similar negative test scenarios for other microservices as needed.

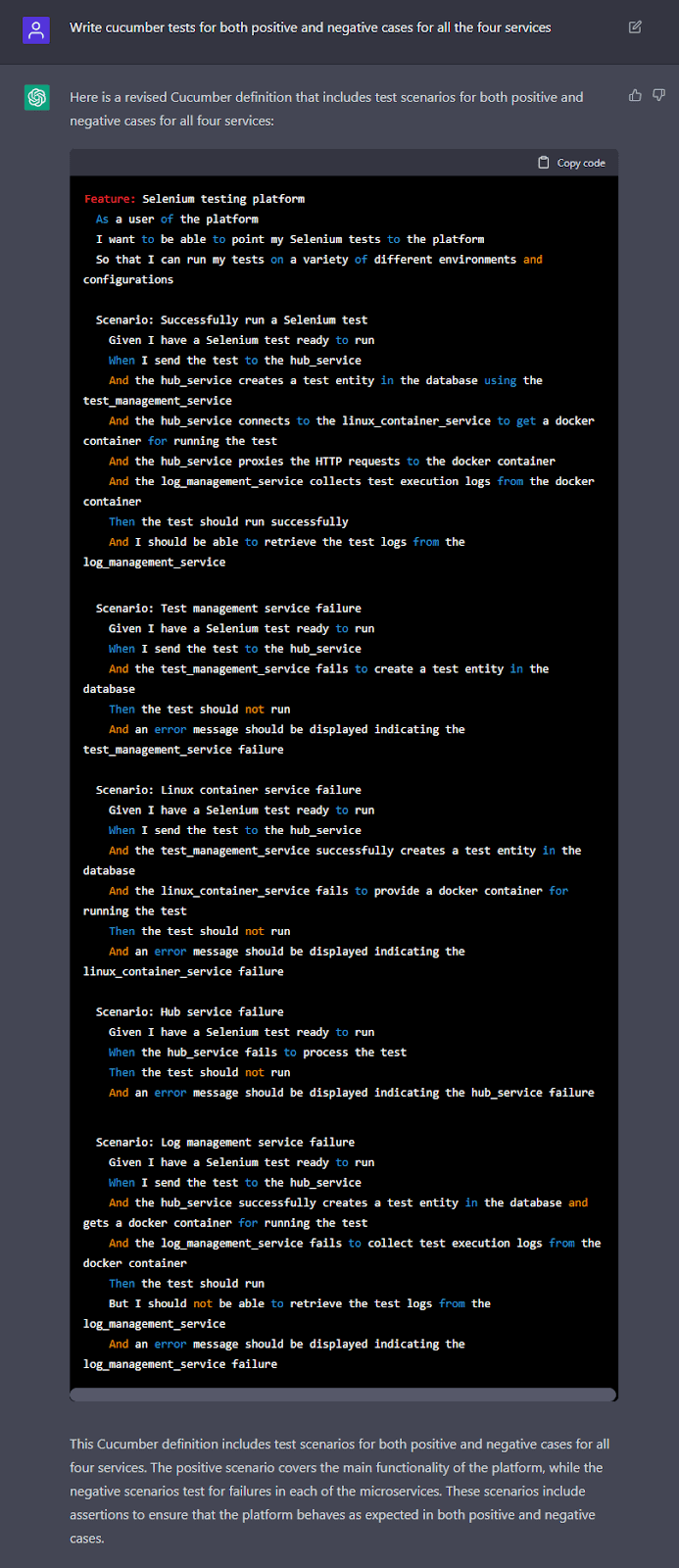

Let’s Mix Positive and Negative Test Cases in Cucumber

Courtesy of ChatGPT, we now have both positive and negative test cases for a complex automation test scenario. We can go one step further now and create revised Cucumber test definitions for positive and negative test cases. So we asked ChatGPT (built on top of ChatGPT-3.5) to do the same.

Q. Write Cucumber tests for both positive and negative cases for all four services

ChatGPT: Here is a revised Cucumber definition that includes test scenarios for both positive and negative cases for all four services:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 |

Feature: Selenium testing platform As a user of the platform I want to be able to point my Selenium tests to the platform So that I can run my tests on a variety of different environments and configurations Scenario: Successfully run a Selenium test Given I have a Selenium test ready to run When I send the test to the hub_service And the hub_service creates a test entity in the database using the test_management_service And the hub_service connects to the linux_container_service to get a docker container for running the test And the hub_service proxies the HTTP requests to the docker container And the log_management_service collects test execution logs from the docker container Then the test should run successfully And I should be able to retrieve the test logs from the log_management_service Scenario: Test management service failure Given I have a Selenium test ready to run When I send the test to the hub_service And the test_management_service fails to create a test entity in the database Then the test should not run And an error message should be displayed indicating the test_management_service failure Scenario: Linux container service failure Given I have a Selenium test ready to run When I send the test to the hub_service And the test_management_service successfully creates a test entity in the database And the linux_container_service fails to provide a docker container for running the test Then the test should not run And an error message should be displayed indicating the linux_container_service failure Scenario: Hub service failure Given I have a Selenium test ready to run When the hub_service fails to process the test Then the test should not run And an error message should be displayed indicating the hub_service failure Scenario: Log management service failure Given I have a Selenium test ready to run When I send the test to the hub_service And the hub_service successfully creates a test entity in the database and gets a docker container for running the test And the log_management_service fails to collect test execution logs from the docker container Then the test should run But I should not be able to retrieve the test logs from the log_management_service And an error message should be displayed indicating the log_management_service failure |

This Cucumber definition includes test scenarios for both positive and negative cases for all four services. The positive scenario covers the main functionality of the platform, while the negative scenarios test for failures in each of the microservices. These scenarios include assertions to ensure that the platform behaves as expected in positive and negative cases.

It’s time to Playwright!

Just like that, we got out Cucumber test files generated instantly for a highly complex test scenario. Let’s get an assist from ChatGPT on automating the Cucumber cases with the Playwright framework. And so we asked:

Q. Generate Playwright test implementation for the above Cucumber cases

ChatGPT: Here is a sample Playwright implementation of the Cucumber definition provided in the previous response:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 |

const { playwright } = require('playwright'); // define test variables const TEST_URL = 'https://www.example.com'; const TEST_LOGS_API = 'https://www.logs.com/api'; describe('Selenium testing platform', () => { let browser; let context; let page; beforeEach(async () => { // launch browser and create new page browser = await playwright.chromium.launch(); context = await browser.newContext(); page = await context.newPage(); }); afterEach(async () => { // close browser await browser.close(); }); it('should successfully run a Selenium test', async () => { // send test to hub_service await page.goto(TEST_URL); // create test entity in database using test_management_service // connect to linux_container_service to get docker container // proxy HTTP requests to docker container // collect test execution logs using log_management_service const logs = await page.evaluate(() => fetch(TEST_LOGS_API).then(res => res.json())); // assert that test ran successfully and logs are retrieved expect(logs).toBeDefined(); }); it('should not run test if test_management_service fails', async () => { // send test to hub_service await page.goto(TEST_URL); // simulate failure of test_management_service const errorMessage = await page.evaluate(() => { try { // create test entity in database using test_management_service throw new Error('test_management_service failure'); } catch (error) { return error.message; } }); // assert that test did not run and error message is displayed expect(errorMessage).toEqual('test_management_service failure'); }); it('should not run test if linux_container_service fails', async () => { // send test to hub_service await page.goto(TEST_URL); // create test entity in database using test_management_service // simulate failure of linux_container_service const errorMessage = await page.evaluate(() => { try { // connect to linux_container_service to get docker container throw new Error('linux_container_service failure'); } catch (error) { return error.message; } }); // assert that test did not run and error message is displayed expect(errorMessage).toEqual('linux_container_service failure'); }); it('should not run test if hub_service fails', async () => { // simulate failure of hub_service const errorMessage = await page.evaluate(() => { try { // send test to hub_service throw new Error('hub_service failure'); } catch (error) { return error.message; } }); // assert that test did not run and error message is displayed expect(errorMessage).toEqual('hub_service |

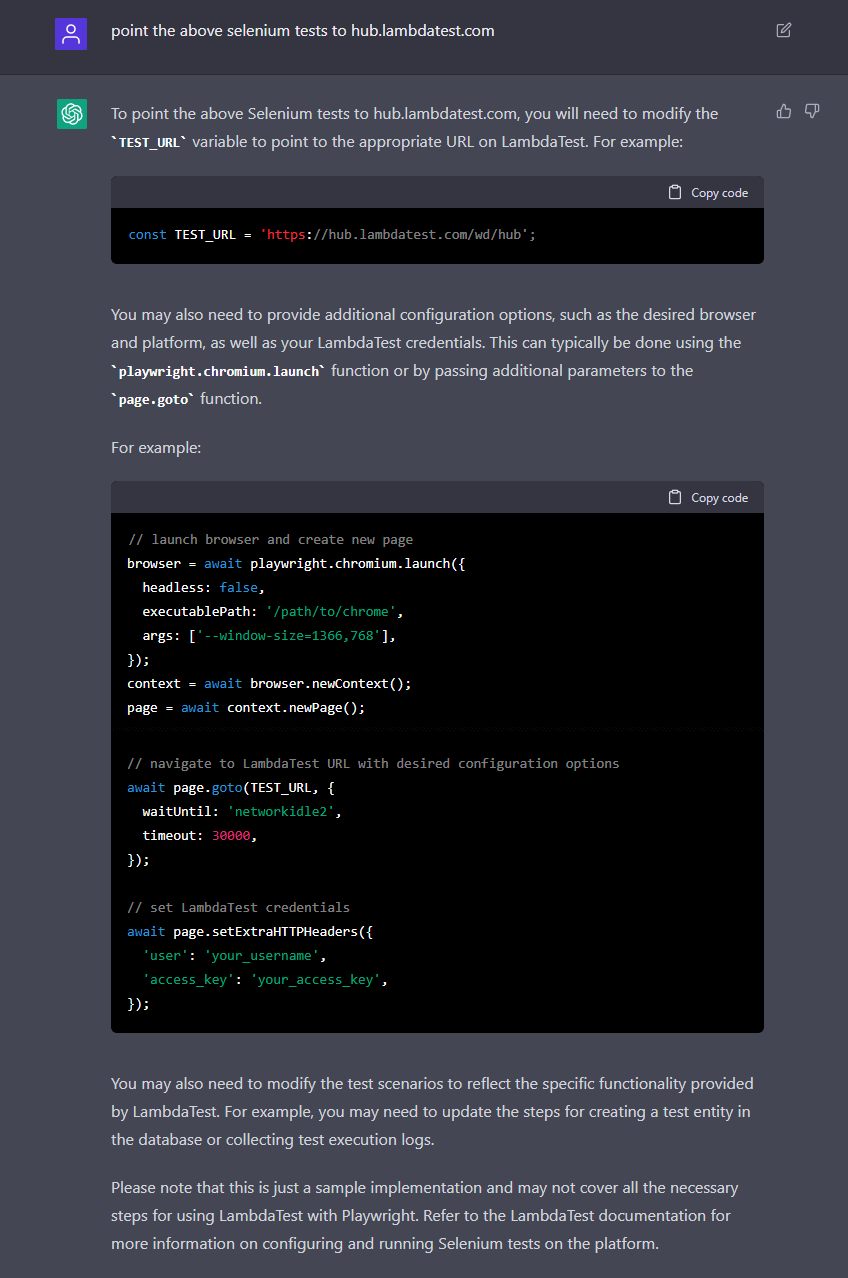

What if we want to Run The Test on LambdaTest Cloud?

Kudos to ChatGPT for handing out a ready-to-use Playwright test script based on Cucumber test cases. However, this Playwright script might be less useful to someone using a cloud-based test execution platform such as LambdaTest. Why? The script might need changes in declaring capabilities and authenticating to the LambdaTest cloud.

So it would be interesting to find out if ChatGPT is capable of helping us out with a ready-to-use Playwright script for the LambdaTest cloud. And my goodness, we were stunned.

Q. Point the above Selenium tests to hub.lambdatest.com

ChatGPT: To point the above Selenium tests to hub.lambdatest.com, you will need to modify the TEST_URL variable to point to the appropriate URL on LambdaTest. For example:

|

1 |

const TEST_URL = 'https://hub.lambdatest.com/wd/hub'; |

You may also need to provide additional configuration options, such as the desired browser and platform, as well as your LambdaTest credentials. This can typically be done using the playwright.chromium.launch function or by passing additional parameters to the page.goto function.

For example:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

// launch browser and create new page browser = await playwright.chromium.launch({ headless: false, executablePath: '/path/to/chrome', args: ['--window-size=1366,768'], }); context = await browser.newContext(); page = await context.newPage(); // navigate to LambdaTest URL with desired configuration options await page.goto(TEST_URL, { waitUntil: 'networkidle2', timeout: 30000, }); // set LambdaTest credentials await page.setExtraHTTPHeaders({ 'user': 'your_username', 'access_key': 'your_access_key', }); |

You may also need to modify the test scenarios to reflect the specific functionality provided by LambdaTest. For example, you may need to update the steps for creating a test entity in the database or collecting test execution logs.

This is a sample implementation and may only cover some of the necessary steps for LambdaTest for Playwright automation testing. Refer to the LambdaTest documentation for more information on configuring and running Playwright tests on the platform.

Can we do this for every Microservice?

So far, so good. However, wouldn’t it be great if we could get the above test script modified for all the four microservices we discussed in our complex test scenario, i.e., hub_service, test_management_service, linux_container_service, and log_management_service.

Q: Modify the above test cases to query directly all four microservices

ChatGPT: To modify the above test cases to query the four microservices directly, you will need to update the TEST_URL and TEST_LOGS_API variables to point to the appropriate URLs for each microservice.

For example:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 |

const HUB_SERVICE_URL = 'https://www.hub.com'; const TEST_MANAGEMENT_SERVICE_URL = 'https://www.test-management.com'; const LINUX_CONTAINER_SERVICE_URL = 'https://www.linux-container.com'; const LOG_MANAGEMENT_SERVICE_URL = 'https://www.log-management.com'; describe('Selenium testing platform', () => { let browser; let context; let page; beforeEach(async () => { // launch browser and create new page browser = await playwright.chromium.launch(); context = await browser.newContext(); page = await context.newPage(); }); afterEach(async () => { // close browser await browser.close(); }); it('should successfully run a Selenium test', async () => { // send test to hub_service await page.goto(HUB_SERVICE_URL); // create test entity in database using test_management_service await page.goto(TEST_MANAGEMENT_SERVICE_URL); // connect to linux_container_service to get docker container await page.goto(LINUX_CONTAINER_SERVICE_URL); // proxy HTTP requests to docker container // collect test execution logs using log_management_service const logs = await page.evaluate(() => fetch(LOG_MANAGEMENT_SERVICE_URL).then(res => res.json())); // assert that test ran successfully and logs are retrieved expect(logs).toBeDefined(); }); it('should not run test if test_management_service fails', async () => { // send test to hub_service await page.goto(HUB_SERVICE_URL); // simulate failure of test_management_service const errorMessage = await page.evaluate(() => { try { // create test entity in database using test_management_service throw new Error('test_management_service failure'); } catch (error) { return error.message; } }); // assert that test did not run and error message is displayed expect(errorMessage).toEqual('test_management_service failure'); }); // ... additional test scenarios ... }); |

This revised implementation queries the four microservices directly using the URLs specified in the “HUB_SERVICE_URL”, “TEST_MANAGEMENT_SERVICE_URL”, “LINUX_CONTAINER_SERVICE_URL”, and “LOG_MANAGEMENT_SERVICE_URL” variables. You can update these variables to the appropriate URLs for your specific implementation.

You may also need to update the test scenarios to reflect the specific functionality provided by each microservice. For example, you may need to add additional steps to create a test entity in the database or collect test execution logs.

Remember that this is just a sample implementation and may not cover everything necessary.

Verify Test Entity Creation in the Database

As pointed out by ChatGPT, we need to specify a URL for the test_management_service. Let’s point that to testservice.lambdatestinternal.com. Also, it would be interesting to see if we can lean on ChatGPT, built on top of ChatGPT-3.5 to verify if we’re getting a hit in the database by adding a touch of MySQL.

Q: Assume that test_management_service is pointed at testservice.lambdatestinternal.com. Add additional test cases to verify if the test entity is successfully created inside the database. Assume that test_management_service uses MySQL

ChatGPT: Here are some additional test cases that can be added to verify if the test entity is successfully created inside the database by the test_management_service:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

it('should create test entity in database', async () => { // send test to hub_service await page.goto(HUB_SERVICE_URL); // create test entity in database using test_management_service const response = await page.goto(TEST_MANAGEMENT_SERVICE_URL); // assert that test entity was created successfully expect(response.status()).toEqual(200); }); it('should verify test entity in database', async () => { // send test to hub_service await page.goto(HUB_SERVICE_URL); // create test entity in database using test_management_service await page.goto(TEST_MANAGEMENT_SERVICE_URL); // query database to verify test entity was created const result = await page.evaluate(() => { // connect to MySQL database and execute query to retrieve test entity // return result of query }); // assert that test entity was found in database expect(result).toBeDefined(); }); |

These test cases cover the creation and verification of the test entity in the database using the test_management_service. The first test case sends a request to the test_management_service and asserts that the response is successful, indicating that the test entity was created.

The second test case queries the database to verify that the test entity was created. You will need to modify the page.evaluate block to include the code for connecting to the MySQL database and executing the appropriate query.

Remember that this is just a sample implementation and may only cover some of the necessary steps for interacting with the test_management_service and the MySQL database. Refer to the test_management_service and MySQL documentation for more information on how to access and query the database.

Refining the MySQL Touch with ChatGPT

We even got a hit in the database for the right microservice. It’s now time to connect the dots with the database using MySQL. And so, we asked the ChatGPT to:

Q: Add logic to connect and query Mysql in the above test cases

ChatGPT: To add logic for connecting and querying MySQL in the above test cases, you can use the mysql npm package to establish a connection to the database and execute queries.

Here is an example of how this can be done:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 |

const mysql = require('mysql'); describe('Selenium testing platform', () => { // ... existing test cases ... it('should verify test entity in database', async () => { // send test to hub_service await page.goto(HUB_SERVICE_URL); // create test entity in database using test_management_service await page.goto(TEST_MANAGEMENT_SERVICE_URL); // query database to verify test entity was created const result = await page.evaluate(() => { // create MySQL connection const connection = mysql.createConnection({ host: 'localhost', user: 'your_username', password: 'your_password', database: 'your_database', }); // execute query to retrieve test entity const query = 'SELECT * FROM test_entities WHERE id = ?'; connection.query(query, [1], (error, results) => { if (error) { throw error; } return results; }); }); // close MySQL connection connection.end(); // assert that test entity was found in database expect(result).toBeDefined(); }); }); |

This implementation uses the mysql.create

Let’s add Resiliency to the Code

Awesome! Now that we have the MySQL query, our immediate concern is ensuring the tests are resilient. In case a microservice becomes temporarily unavailable, the test should automatically retry. So let’s add resilience to it. ChatGPT, would you be so kind?

Q: Add resilience in the above test cases where test_management_service gets unavailable temporarily by adding retries.

ChatGPT: To add resilience to the above test cases where the test_management_service may become unavailable temporarily, you can add retries using a loop and a delay between each retry.

Here is an example of how this can be done:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 |

const mysql = require('mysql'); describe('Selenium testing platform', () => { // ... existing test cases ... it('should verify test entity in database with retries', async () => { let result; let retries = 0; const maxRetries = 3; const retryDelay = 1000; // 1 second while (!result && retries < maxRetries) { // send test to hub_service await page.goto(HUB_SERVICE_URL); // create test entity in database using test_management_service await page.goto(TEST_MANAGEMENT_SERVICE_URL); // query database to verify test entity was created result = await page.evaluate(() => { // create MySQL connection const connection = mysql.createConnection({ host: 'localhost', user: 'your_username', password: 'your_password', database: 'your_database', }); // execute query to retrieve test entity const query = 'SELECT * FROM test_entities WHERE id = ?'; connection.query(query, [1], (error, results) => { if (error) { throw error; } return results; }); }); // close MySQL connection connection.end(); if (!result) { retries++; await new Promise(resolve => setTimeout(resolve, retryDelay)); } } // assert that test entity was found in database expect(result).toBeDefined(); }); }); |

This implementation includes a loop that retries the test up to maxRetries times, with a retryDelay of 1 second between each retry. The test will fail if the test entity is not found in the database after maximum retries. This can ensure that the test will still pass even if the test_management_service becomes unavailable temporarily.

Remember that this is just a sample implementation and may only cover some necessary steps for adding retries to the test. You may need to adjust the retry logic and delay based on your specific needs.

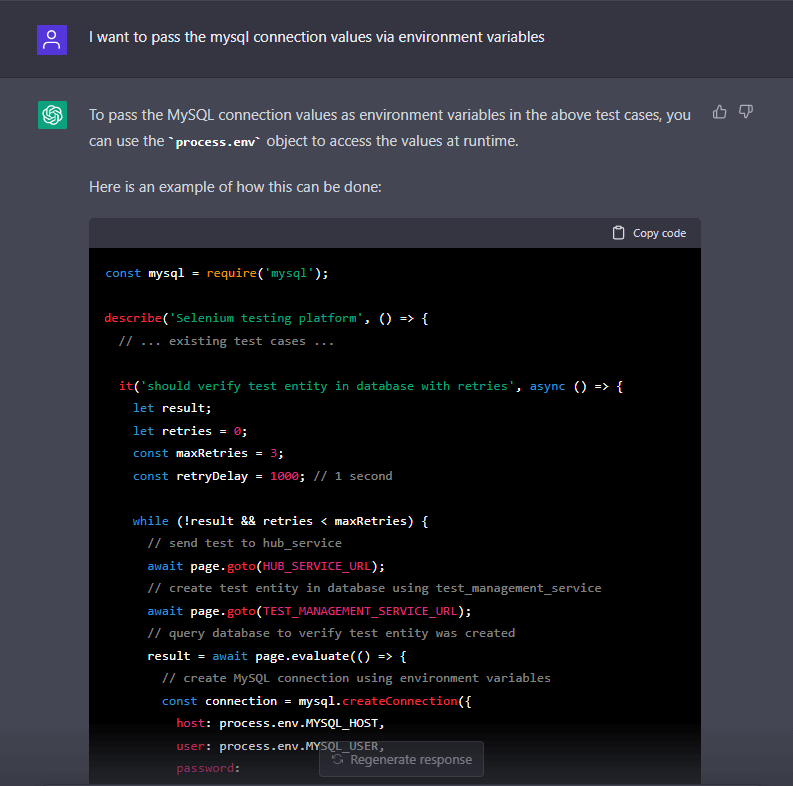

Make it more Secure through Environment Variables

Can ChatGPT (fine-tuned version of ChatGPT-3.5) help us add the MySQL connection values as the environment variables? Let’s see.

Q: I want to pass the mysql connection values via environment variables

ChatGPT: To pass the MySQL connection values as environment variables in the above test cases, you can use the process.env object to access the values at runtime.

Here is an example of how this can be done:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

const mysql = require('mysql'); describe('Selenium testing platform', () => { // ... existing test cases ... it('should verify test entity in database with retries', async () => { let result; let retries = 0; const maxRetries = 3; const retryDelay = 1000; // 1 second while (!result && retries < maxRetries) { // send test to hub_service await page.goto(HUB_SERVICE_URL); // create test entity in database using test_management_service await page.goto(TEST_MANAGEMENT_SERVICE_URL); // query database to verify test entity was created result = await page.evaluate(() => { // create MySQL connection using environment variables const connection = mysql.createConnection({ host: process.env.MYSQL_HOST, user: process.env.MYSQL_USER, password: |

Schedule a GitHub Action and fetch Test Results with LambdaTest API

Interesting how ChatGPT is making life easier across functions. From writing an automation script for front-end testing to generating a database query and connecting the dots. Wouldn’t it be a fairy tale if we could get ChatGPT to help us with continuous testing by configuring a GitHub action for our test scenario, scheduling testing, and fetching test results from the LambdaTest platform using the LambdaTest APIs? So we asked the ChatGPT to:

Q: Write a GitHub action to run all the above tests every day at 9 AM and point them to LambdaTest. Additionally, write a step to fetch the test result from LambdaTest using their APIs

ChatGPT: Here is an example of a GitHub Action that runs the above tests every day at 9 AM and points them to LambdaTest:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

name: Selenium tests on: schedule: - cron: '0 9 * * *' jobs: test: runs-on: ubuntu-latest steps: - uses: actions/checkout@v2 - name: Set up Node.js uses: actions/setup-node@v2 with: node-version: 14.x - name: Install dependencies run: npm install - name: Run tests env: TEST_URL: https://hub.lambdatest.com/wd/hub USERNAME: ${{ secrets.LAMBDATEST_USERNAME }} ACCESS_KEY: ${{ secrets.LAMBDATEST_ACCESS_KEY }} run: npm test |

This action is triggered by a scheduled event every day at 9 AM, as specified on the block. It performs the following steps:

- Check out the repository using the actions/checkout action.

- Set up Node.js using the actions/setup-node action.

- Install dependencies using npm install.

- Run the tests using the npm test.

The TEST_URL, USERNAME, and ACCESS_KEY environment variables are passed to the action to configure the tests to run on LambdaTest. The USERNAME and ACCESS_KEY values are retrieved from the repository secrets, which can be configured in the repository settings.

To fetch the test results from LambdaTest using their APIs, you can use the http-client npm package to make HTTP requests to the LambdaTest API.

Here is an example of how to fetch the test results:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

const { HttpClient } = require('http-client'); async function getTestResults(sessionId) { const client = new HttpClient(); const username = process.env.LAMBDATEST_USERNAME; const accessKey = process.env.LAMBDATEST_ACCESS_KEY; const response = await client.get( `https://api.lambdatest.com/automation/api/v1/sessions/${sessionId}`, { auth: { username, password: accessKey, }, }, ); return response.body; } // example usage const testResults = await getTestResults('12345'); console.log(testResults); |

This function makes a GET request to the LambdaTest API to retrieve the test results for the specified session ID. The username and accessKey values are passed as HTTP basic authentication credentials. The response body is returned by the function and can be accessed to retrieve the test results.

You can include this function in your test script and call it after running the tests to retrieve the test results. You can also add error handling and retries to the function in case

A Package.json file with the above steps

Next step was to make this whole process portable. Make a package.json file that will download all the dependencies and create the environment to run the above-mentioned test scripts.

Q: Write a package.json file with different scripts to run the above tests and fetch the results from LambdaTest APIs.

ChatGPT: Here is an example of a package.json file with different scripts to run the above tests and fetch the results from LambdaTest APIs:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

{ "name": "selenium-tests", "version": "1.0.0", "scripts": { "test": "playwright run --chromium --spec=*.spec.js", "fetch-results": "node fetch-results.js", "run-tests": "npm run test && npm run fetch-results" }, "dependencies": { "playwright": "^2.0.0", "http-client": "^1.0.0" }, "devDependencies": { "jest": "^26.6.3" } } |

This package.json file includes the following scripts:

- test: Runs the tests using Playwright.

- fetch-results: Fetches the test results from LambdaTest using the fetch-results.js script.

- run-tests: Runs the tests and fetch the results in sequence.

To run the tests and fetch the results, you can use the run-tests script by running npm run run-tests. This will execute the test and fetch-results scripts in sequence.

You will need to create the fetch-results.js script to contain the logic for fetching the test results from LambdaTest, as shown in the previous example.

Remember that this is just a sample implementation and may not include.

Let’s put all of this on the GitHub Repo

We now have the beginning of a complete automation testing workflow. As the next steps, we want to take all of this to GitHub with a complete GitHub Actions YAML file to automate the workflow over GitHub.

Q: Create a GitHub repository with all the Cucumber files, test files, nodejs, and GitHub action code

ChatGPT: To create a GitHub repository with all the necessary files for running the Selenium tests, you can follow these steps:

- Create a new repository on GitHub.

- Clone the repository to your local machine.

- Create a directory for the Selenium tests, such as tests/.

- Create the Cucumber feature files and test files in the tests/ directory.

- Initialize the repository as a Node.js project by running npm init in the repository’s root directory.

- Install the necessary dependencies, such as Playwright and the http-client npm package, by running npm install –save-dev playwright and npm install –save http-client.

- Create the package.json file with the scripts for running the tests and fetching the results, as shown in the previous example.

- Create the GitHub Action workflow file in the .github/workflows/ directory, as shown in the previous example.

- Commit and push the changes to the repository.

Once the repository is set up, you can run the tests and fetch the results by pushing a commit or manually triggering the GitHub Action workflow. The tests will be run on LambdaTest, and the results will be fetched using the LambdaTest API.

Remember that this is just a sample implementation and may not include all the necessary steps for setting up a complete Selenium testing platform. You may need to adjust the steps based on your specific requirements.

A Bash Script to run everything locally on Mac

Now that we have a complete workflow setup, we want to run all of that locally on our Mac machine. We needed a Bash script to clone the repo and automate all the above steps. Another challenge here we observed in the ChatGPT is that sometimes it does not create the whole script and misses out on crucial steps. So we had to prompt again to create a complete Bash script.

Q: Write a Bash script to automate the above steps on my Mac

ChatGPT: To automate the steps for creating a GitHub repository with Selenium tests on a Mac, you can write a bash script that performs the following actions:

- Create a new repository on GitHub.

- Clone the repository to the local machine.

- Create a directory for the Selenium tests, such as tests/.

- Create the Cucumber feature files and test files in the tests/ directory.

- Initialize the repository as a Node.js project by running npm init in the repository’s root directory.

- Install the necessary dependencies, such as Playwright and the http-client npm package, by running npm install –save-dev playwright and npm install –save http-client.

- Create the package.json file with the scripts for running the tests and fetching the results, as shown in the previous example.

- Create the GitHub Action workflow file in the .github/workflows/ directory, as shown in the previous example.

- Commit and push the changes to the repository.

Here is an example of a Bash script that performs these actions:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

# create new repository on GitHub curl -u "USERNAME" https://api.github.com/user/repos -d '{"name":"selenium-tests"}' # clone repository git clone https://github.com/USERNAME/selenium-tests.git # navigate to repository directory cd selenium-tests # create tests directory mkdir tests # create cucumber feature files and test files in tests directory touch tests/feature1.feature tests/feature1.spec.js touch tests/feature2.feature tests/feature2 |

Q: Complete the above Bash file and do not generate the explanation

ChatGPT: Here is a completed bash script that automates the steps for creating a GitHub repository with Selenium tests on a Mac:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 |

# create new repository on GitHub curl -u "USERNAME" https://api.github.com/user/repos -d '{"name":"selenium-tests"}' # clone repository git clone https://github.com/USERNAME/selenium-tests.git # navigate to repository directory cd selenium-tests # create tests directory mkdir tests # create cucumber feature files and test files in tests directory touch tests/feature1.feature tests/feature1.spec.js touch tests/feature2.feature tests/feature2.spec.js # initialize repository as Node.js project npm init # install dependencies npm install --save-dev playwright npm install --save http-client # create package.json file with scripts for running tests and fetching results cat > package.json <<EOF { "name": "selenium-tests", "version": "1.0.0", "scripts": { "test": "playwright run --chromium --spec=*.spec.js", "fetch-results": "node fetch-results.js", "run-tests": "npm run test && npm run fetch-results" }, "dependencies": { "playwright": "^2.0.0", "http-client": "^1.0.0" }, "devDependencies": { "jest": "^26.6.3" } } EOF # create GitHub Action workflow file mkdir -p .github/workflows touch .github/workflows/selenium-tests.yml cat > .github/workflows |

Bash Script to GitHub Workflow

Now that we have a complete Bash script to run and set up all our pipeline, we want the same to run over GitHub as well. So we needed a GitHub workflow file to add to the repo to run the setup.

Q: Modify the above Bash script to generate the GitHub workflow file as well

ChatGPT: To modify the Bash script to generate the GitHub Action workflow file as well, you can include the contents of the workflow file in the script and use the echo command to write it to the file.

Here is an example of how this can be done:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 |

# create new repository on GitHub curl -u "USERNAME" https://api.github.com/user/repos -d '{"name":"selenium-tests"}' # clone repository git clone https://github.com/USERNAME/selenium-tests.git # navigate to repository directory cd selenium-tests # create tests directory mkdir tests # create cucumber feature files and test files in tests directory touch tests/feature1.feature tests/feature1.spec.js touch tests/feature2.feature tests/feature2.spec.js # initialize repository as Node.js project npm init # install dependencies npm install --save-dev playwright npm install --save http-client # create package.json file with scripts for running tests and fetching results cat > package.json <<EOF { "name": "selenium-tests", "version": "1.0.0", "scripts": { "test": "playwright run --chromium --spec=*.spec.js", "fetch-results": "node fetch-results.js", "run-tests": "npm run test |

Now finally let’s modify the Cucumber Definitions

So as the final step, now we want to change the Cucumber definitions so that the Log Management microservice can output responses in both XML and JSON.

Q: Modify the Cucumber definitions to include cases where log_management_service can output a response in XML as well as JSON

To modify the Cucumber definitions to include cases where the log_management_service can output the response in XML as well as JSON, you can use the Content-Type HTTP header to specify the desired format.

Here is an example of how this can be done:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

Feature: Selenium testing platform As a user of the platform I want to be able to retrieve test execution logs in different formats So that I can use the logs in different applications Scenario: Retrieve test execution logs in JSON format Given I have sent a selenium test to the hub_service And the test has been executed on a Linux docker container When I request test execution logs from the log_management_service in JSON format Then I should receive a valid JSON response with the test execution logs Scenario: Retrieve test execution logs in XML format Given I have sent a selenium test to the hub_service And the test has been executed on a Linux docker container When I request test execution logs from the log_management_service in XML format Then I should receive a valid XML response with the test execution logs |

To implement the request to the log_management_service; you can use a tool such as a request npm package to send an HTTP request with the Content-Type header set to application/json or application/xml, depending on the desired format.

Here is an example of how this can be done:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 |

const request = require('request'); describe('Selenium testing platform', () => { // ... existing test cases ... it('should retrieve test execution logs in JSON format', async () => { const response = await new Promise((resolve, reject) => { request( { method: 'GET', uri: LOG_MANAGEMENT_SERVICE_URL, headers: { 'Content-Type': 'application/json', }, }, (error, response, body) => { if (error) { reject(error); } else { resolve(response); } }, ); }); expect(response.headers['content-type']).toEqual('application/json'); expect(response.status |

What we learned when we created Automation Tests with ChatGPT

The whole experience was fun. We had goosebumps every time the platform exceeded our expectations. But here’s a realistic learning from all of the experimentation above. Both good and bad.

Pros of ChatGPT for Test Automation

- Adding Resiliency

One of the things that testers miss out on or consider as overburden is adding resiliency to the automation code. It is usually deprioritized as priority2-priority3 item. However, as we saw in our above example, we can easily create code to add resiliency to the automation script

- Ensuring Security

Adding security-oriented best practices should be prioritized from day 1. However, if you have unsecured legacy code or a shortage of time to find security vulnerabilities in the code, ChatGPT – a chatbot built on ChatGPT-3.5 can help out. In addition, we saw in the above examples that some of the code pieces that ChatGPT created were secure by default. For example, leveraged the GitHub Secrets feature. But sometimes, you may have to prompt the platform to create secure code. For example, we had to ask ChatGPT to use environment variables deliberately.

- Enabling Testers to overcome the learning curve

ChatGPT is an excellent tool for overcoming limitations around knowledge of a particular technology. For example, if you are a Selenium expert but are not well-versed in GitHub pipelines, you can use ChatGPT to at least get started and create starter codes to help you create GitHub workflows. We demonstrated the same in the above examples as well.

However, a word of caution is that ChatGPT is not perfect or foolproof. It’s just another tool to make your life easier, but if you want to truly succeed, you cannot be completely dependent on ChatGPT. You would have to do a deep dive into the technology.

- Accelerating debugging of code

When it comes to debugging, ChatGPT is a useful addition to any software developer’s toolkit. There are examples you can find on the Internet where people have copy-pasted their code in ChatGPT and got back the exact reason for failure as an output response. Again this is not 100% foolproof, and ChatGPT may miss out on obvious issues, but still, it can help you get started or give you a new perspective while debugging the code.

Cons of ChatGPT for Test Automation

While ChatGPT, built on ChatGPT-3.5 does have many advantages and uses, there are a few disadvantages to be aware of.

- It is based on statistical patterns and does not understand the underlying meaning

ChatGPT is built on top of GPT-3.5, which is an autoregressive language model. However, one of the biggest challenges in this approach is that it is very heavily dependent on statistical patterns.

This learning model predicts using statistical models on what should be the next words based on what words have been used prior before. However, it does not have an underlying understanding of the meaning of those words.

This means that it cannot be used as effectively in situations where the user’s questions or statements require an understanding of a context that has not been explained before.

While these may seem minor limitations, it’s a big thing if you depend on ChatGPT for testing. For example, the accuracy of ChatGPT will drastically decrease if you have to create test cases that require a prior deep understanding of the System-under-test. This is why kaneAI ChatGPT for testers should be used alongside other helpful resources to get more reliable and well-rounded results.

- Learning gaps

The underlying technology in ChatGPT, the GPT-3.5 language model, is a deep learning language model that has been trained on large data sets of human-generated content. Here we are assuming that it has also learned Code as text; therefore, it has been able to create such accurate codes.

That means it cannot accurately respond to things it has not learned before or may give wrong information if its learning has not been updated.

For example, if its last learning phase was on a framework that has since deprecated half of its methods, then the code it will create will use those deprecated methods. So the user would have to ensure that the final code they are using is up to date.

- Incomplete code

Another challenge of creating code through ChatGPT is that you have to deal with partially written code. This is also evident in our examples above, where we had to deliberately ask for complete code for bash automation.

So if you are dependent on ChatGPT-based code, you would first have to understand the incomplete code, finish it, or modify it to suit your needs. And as you can imagine, this is often a challenging thing to do as there are so many things that could go wrong. Even if you manage to get what you want, the final product will likely not be as good as if you were to write the code from scratch. But on the flip side, sometimes extending the code or debugging the code may be easier than creating repetitive code from scratch.

- Assumptions

ChatGPT is dependent on assumptions. Software testers are trained to identify these hidden factors that could potentially cause an app to fail, and when they do so, they can build into their test cases ways to check for these issues. But what happens when your testers aren’t given enough time to test all their assumptions? What if the information needed to validate an assumption isn’t available? When other teams are involved in building the product, like QA or Development, this can be difficult to control.

This same problem is there with ChatGPT as well. The platform starts with many assumptions about the use case you inputted. Most of the time, these assumptions are evident, and it’s easy to work around them, but often these assumptions lead to very inaccurate code that would not help make your life easier.

ChatGPT is still in its early stages, and constant updates are being made to add features or fix bugs. In addition, it’s a constantly learning model, so as more and more people use it and find out issues in the platform, the better and better it becomes. Its accuracy will continue to increase, and its learning gaps will continue to fill up.

This means users must stay on top of these changes to continue using ChatGPT efficiently.

Future of testing with AI: Will it replace testing teams?

ChatGPT, a chatbot built on top of ChatGPT-3.5 and similar AI technologies have tremendous potential in the field of testing and automation testing. They can make the life of a tester much much easier and have the potential to accelerate their efforts significantly. However, saying that they will replace testing teams is still not possible.

Also, as stated at the start of the post, all the code generated could be better. When you try to run it, they will throw errors. But even with that said, they are good starting points that can be polished and taken further for better implementation.

This tool, if used correctly, will enable the teams to get started with testing tasks much earlier and faster. Proper tooling created with this technology in the background will empower testers to not worry much about automation but focus on test cases themselves. Check out our hub on test automation metrics to examine various metrics for measuring success, the strategies for metric implementation, and its challenges involed.

Also, read about the distinctions between Manual Testing v/s Automation Testing in this Comprehensive Guide.

Author