Predictive Analytics in Software Testing: Enhancing Quality and Efficiency

Devansh Bhardwaj

Posted On: November 29, 2024

![]() 71599 Views

71599 Views

![]() 13 Min Read

13 Min Read

Traditional software testing typically emphasizes technical, business, and functional requirements. This approach addresses many scenarios but often misses a key factor: how real users interact with the application. With many options already available, users expect apps to be not just functional but intuitive and user-friendly as well. To deliver such applications, testing should go beyond traditional methods and focus on real-world user needs.

This is where the need for predictive analytics in software testing emerges. By analyzing data from code repositories, bug reports, user feedback, and live environments, predictive analytics transforms QA.

In this blog, let’s see how predictive analytics revolutionize software testing!

TABLE OF CONTENTS

- What Is Predictive Analytics?

- Why Use Predictive Analytics in Software Testing?

- How Does Predictive Analytics Optimize and Improve Software Testing?

- Critical Components of Predictive Analytics in Software Testing

- Types of Predictive Analytics Models

- Use Cases and Examples of Predictive Analytics in Software Testing

- How Does LambdaTest Test Intelligence Help With Predictive Analytics?

- Frequently Asked Questions (FAQs)

What Is Predictive Analytics?

Predictive analysis is a field within machine learning that allows organizations to use current and past data to anticipate future outcomes and challenges. It leverages the insight that each test case generates valuable data stored in system log files.

Predictive analytics works by examining system files to uncover statistical patterns, helping organizations identify trends and predict potential future scenarios. This method enables teams to anticipate challenges and take proactive steps based on data-driven insights.

Why Use Predictive Analytics in Software Testing?

As fields such as Big Data, Artificial Intelligence, and Machine Learning are constantly evolving, predictive analytics has become very crucial for testers and QAs. Predictive analytics allows teams to anticipate potential issues and address them proactively, unlike traditional methods that typically uncover problems only after they occur.

Here is why predictive analytics in software testing is crucial for QAs:

- Improved Customer Satisfaction: Predictive analytics uses customer data to uncover patterns in product usage. By identifying key usage flows, teams can design test cases that focus on essential functions. This approach aligns testing with real user needs, providing valuable insights into customer preferences and behaviors.

- Early Defect Detection: Identifying defects early enhances quality while reducing both time and costs. By analyzing data from past production failures, analytics can predict potential future issues.

- Faster Time-to-Market: Testing that focuses on real customer use rather than just business requirements improves efficiency and reduces costs. Prioritizing critical areas shortens test cycles, allowing faster releases of key features. Predicting defect-prone areas helps teams address issues quickly, speeding up delivery.

- Better Release Control: Monitoring timelines and using predictive models to anticipate delays helps maintain a smooth release process. Identifying issues early and addressing root causes ensures timely adjustments, keeping projects on track and ensuring reliable releases.

How Does Predictive Analytics Optimize and Improve Software Testing?

Predictive analytics enhances software testing by making it more efficient and user-focused. Instead of testing every feature equally, it helps teams prioritize critical areas, saving time and resources. By analyzing data trends and user behavior, it identifies potential issues, guiding efforts toward what impacts a product’s success most.

Using techniques like machine learning and data analysis, predictive analytics can help uncover defects caused by historical data. This enables QA teams to streamline processes, focus on key tasks, and deliver reliable, user-friendly software. Rather than replacing traditional methods, it complements them, offering a data-driven approach to improving the testing process.

Critical Components of Predictive Analytics in Software Testing

Predictive analytics is rapidly changing the way software testing is approached, allowing teams to forecast potential risks and streamline their processes. For this approach to be effective, certain key elements must be in place:

- Comprehensive Data Collection: Predictive analytics in software testing relies heavily on accurate and diverse data. Gathering historical information, such as previous defects, test results, and usage patterns, is essential. Cleaning and organizing this data ensures reliability and helps reveal meaningful trends.

- Selection of Suitable Analytical Models: The success of predictive analytics depends on choosing the right methods and algorithms. Techniques like regression analysis, decision trees, or machine learning models can be tailored to specific testing needs. Evaluating their effectiveness with historical data ensures their predictive accuracy.

- Integration with Existing Testing Tools: Predictive systems require integration with automation testing tools. This integration allows real-time updates, helps identify areas of high risk, and prioritizes test cases accordingly. A seamless connection with testing tools ensures a smooth workflow and meaningful insights.

- Risk Assessment and Focused Testing: One of the main goals of predictive analytics is to assess risks in different parts of the software. This involves identifying modules or functionalities more likely to have defects. Teams can then focus their testing efforts on these areas, saving time and resources while ensuring better coverage.

- Ongoing Monitoring and Refinement: Predictive models must evolve to remain effective. Regular monitoring of their output and performance is crucial to ensure accuracy. Feedback from testing results can help refine these models, adapting them to new challenges or environments.

- Collaboration Across Teams: Predictive analytics benefits more than just the testing team. Development and operations teams can use these insights to align priorities and improve decision-making, fostering better teamwork and smoother problem-solving.

By incorporating predictive analytics into testing, organizations can reduce defects and improve testing efficiency. Focusing on critical areas helps deliver high-quality software faster, meeting deadlines and user expectations.

Types of Predictive Analytics Models

Predictive analytics models are designed to serve diverse purposes, with each type suited to specific data patterns, business goals, and testing scenarios. Below are some of the key types of predictive models used in software testing:

Classification Model Overview

The classification model is a widely used tool in predictive analytics, designed to organize data into defined categories. It helps answer questions like “Is this feature prone to defects?” or “Which test cases should be prioritized?”

Key Techniques:

- Algorithms: Decision Trees, Random Forests, Naive Bayes, and Support Vector Machines (SVM).

- Applications: Useful for identifying high-risk test cases, predicting defects, and categorizing components based on quality or risk.

By analyzing input variables, such as test parameters, and linking them to outcomes, classification models provide actionable insights. This helps teams focus resources on the most critical areas, improving overall efficiency and quality.

Clustering Model

Clustering models group data based on shared characteristics, making them ideal for uncovering patterns or relationships within datasets. Unlike classification models, clustering does not rely on predefined labels.

Key Techniques:

- Algorithms: K-Means, Hierarchical Clustering, and Density-Based Clustering.

- Applications: Segmenting defects by root causes, grouping similar test cases, and identifying patterns in user behavior for targeted testing.

By organizing data into meaningful clusters, these models enhance the testing process, making it more efficient and targeted.

Forecast Model

Forecasting models predict future trends or outcomes by analyzing historical data. These models are instrumental in planning and resource allocation within software testing.

Key Techniques:

- Time Series Analysis: Autoregressive Integrated Moving Average (ARIMA).

- Machine Learning Models: Random Forests and Support Vector Machines (SVM).

- Ensemble Methods: Bagging and Boosting.

- Applications: Defect prediction, estimating test effort, release planning, and resource management.

By leveraging forecasting models, organizations can proactively address challenges and optimize quality assurance processes.

Outlier Detection Model

Outlier models are used to detect anomalies—data points that differ significantly from the expected pattern. These anomalies often signal potential defects, system issues, or unusual behaviors that need closer examination.

Key Techniques:

- Statistical Methods: Z-Score, Modified Z-Score, and Boxplot.

- Machine Learning Algorithms: Isolation Forest and Local Outlier Factor (LOF).

- Applications: Detecting rare defects, improving data quality, and enhancing model accuracy.

By pinpointing anomalies early, these models can prevent critical issues, ensuring robust software performance.

Time Series Model

Time series models analyze sequential data collected over time to identify patterns and trends. They are particularly useful in predicting temporal events within software testing.

Key Techniques:

- Applications: Predicting test execution times, resource utilization, and defect discovery rates.

- Benefits: Enhanced scheduling, resource optimization, and better planning for test cycles.

These models enable testers to anticipate future outcomes and align testing processes accordingly, improving efficiency and accuracy.

Each predictive analytics model offers unique strengths, allowing teams to address specific challenges in software testing. By selecting and combining the right models, organizations can gain actionable insights, reduce risks, and achieve higher-quality outcomes in their testing efforts.

Use Cases and Examples of Predictive Analytics in Software Testing

Here are some of the use cases and examples of predictive analytics in software testing:

- Release Quality Prediction: In software development, ensuring the quality of each release is essential for maintaining user trust and protecting a company’s reputation. Predictive analytics, driven by AI, provides an innovative way to evaluate release quality. By analyzing historical data from previous releases—such as defect rates, issue severity, and customer feedback—AI can estimate the likelihood of problems in future releases with notable precision.

- Test Case Prioritization: In an environment where resources are often constrained, prioritizing testing efforts becomes essential. Predictive analytics can play a crucial role in identifying which test cases are most likely to uncover defects or validate critical functionalities. By analyzing factors such as historical defect trends, recent code changes, and failure probabilities, AI models can rank test cases intelligently.

- Detection of Performance Bottleneck: The performance of the system under load is critical to the application’s efficiency. Performance testing involves analyzing the load generated by users and evaluating the system’s response time under various conditions. Predictive analytics in software testing is concerned with understanding the future and its demands in real-time. With the use of predictive analytics models it’s possible to even predict application performance under different circumstances.

For example, a development team preparing the next iteration of their software might use AI tools to compare current release metrics with data from past projects. If the predictive model highlights a significant chance of critical defects based on recurring patterns, project managers can take proactive measures.

This can involve shifting around people who are in the testing phase, making the quality assurance phase more time-consuming, or, if the situation calls for it, delaying the launch in order to fix any problems before they emanate. Such a proactive strategy not only increases the standard of the end product but also eliminates the difficulties that would have been experienced after the product has been released ensuring that the interface to be used is rather smooth and efficient.

For example, before a critical release, a development team could use AI to prioritize test cases based on their likelihood of failure. The model might highlight specific tests associated with recent high-risk code changes or modules with a history of defects.

By focusing on these areas, the QA team can detect potential issues earlier in the testing process, enhancing both efficiency and defect detection. This data-driven prioritization helps deliver high-quality software within tight timelines while maximizing the impact of available testing resources.

For example, an e-commerce platform preparing for a seasonal sale event might use AI to analyze usage patterns and performance metrics from previous sales periods. The model could pinpoint specific application components likely to experience slowdowns or failures under heavy loads.

This insight allows QA teams to focus their efforts on optimizing those areas preventing performance issues before they impact end users. Such a proactive approach ensures smooth application performance during critical times, safeguarding customer satisfaction and business outcomes.

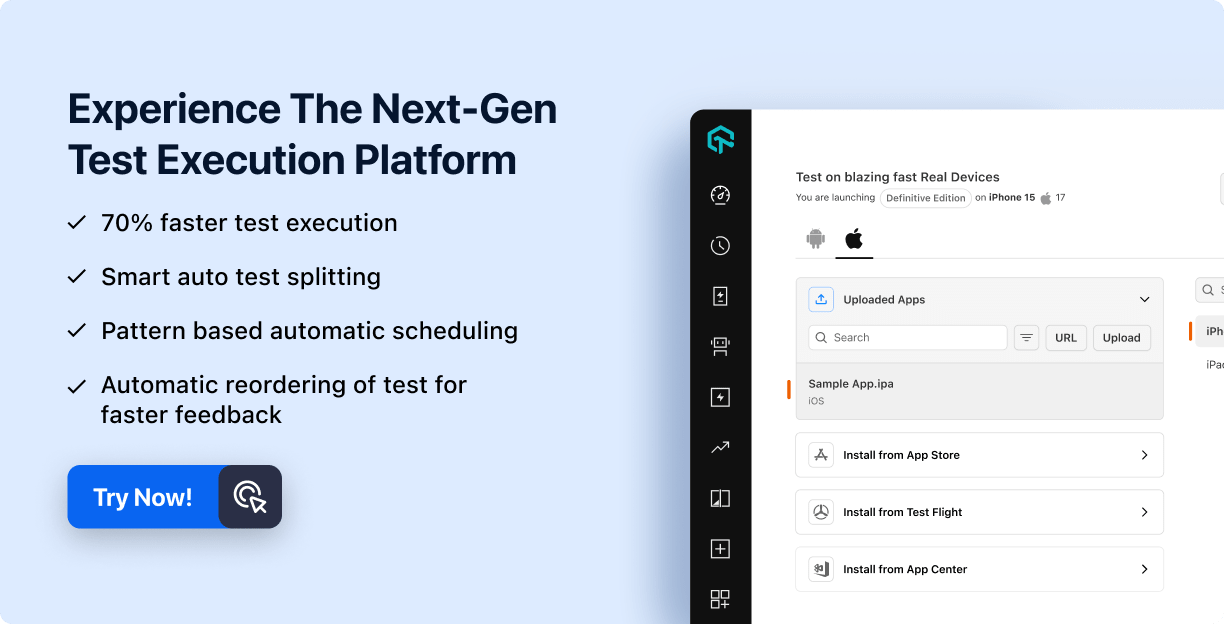

How Does LambdaTest Test Intelligence Help With Predictive Analytics?

The LambdaTest Test Intelligence platform empowers QA teams with AI-native capabilities to uncover, analyze, and resolve testing challenges using AI-native intelligent insights.

Key Features:

- Actionable Test Insights: With a system designed to learn and evolve, LambdaTest provides fine-tuned recommendations for every test execution, helping QA teams optimize efforts and streamline debugging.

- AI-Native Root Cause Analysis (RCA): Categorize errors automatically and access tailored recommendations for fast and effective issue resolution. Reduce the guesswork and spend more time improving software quality.

- Flaky Test Detection: Quickly identify inconsistent test results using AI algorithms, ensuring your test suite remains reliable. Customize detection settings to suit your test environment and gain actionable insights with flaky test analytics.

- Error Trends Forecasting: Monitor log trends with advanced dashboards to predict potential failures, enabling teams to preemptively address recurring issues.

With the rise of AI in testing, its crucial to stay competitive by upskilling or polishing your skillsets. The KaneAI Certification proves your hands-on AI testing skills and positions you as a future-ready, high-value QA professional.

Note

NoteCheck high-quality impact issues with AI-native Insights. Try LambdaTest Today!

Summing Up!

Predictive analytics in software testing is revolutionary, shifting teams from reactive fixes to proactive strategies. By leveraging data, AI-native insights, and tools like LambdaTest AI-native Insights, organizations can optimize testing, enhance user satisfaction, and deliver high-quality software faster.

Embrace predictive analytics to streamline your software testing, minimize defects, and create user-focused products with unmatched efficiency.

Frequently Asked Questions (FAQs)

What are the 4 predictive analytics?

Predictive analytics is the process of using data to forecast future outcomes. The process uses data analysis, machine learning, artificial intelligence, and statistical models to find patterns that might predict future behavior.

How is predictive analytics different from machine learning?

While predictive analytics and machine learning are often thought to be the same, they are distinct yet complementary technologies. Predictive analytics encompasses a broader approach, combining machine learning, data mining, predictive modeling, and statistical methods to forecast potential outcomes and derive insights from large datasets.

How is predictive analytics beneficial for any business?

Predictive analytics offers valuable benefits across various industries by providing intelligent insights into future trends. For businesses, it can help identify which products or services are likely to resonate with customers and predict which features of an application may drive higher revenue. In the financial sector, this technology plays a crucial role in mitigating risks, enabling organizations to make informed decisions and optimize their strategies.

Got Questions? Drop them on LambdaTest Community. Visit now