A Hybrid Approach to Performance Testing [Testμ 2023]

LambdaTest

Posted On: August 23, 2023

![]() 6739 Views

6739 Views

![]() 8 Min Read

8 Min Read

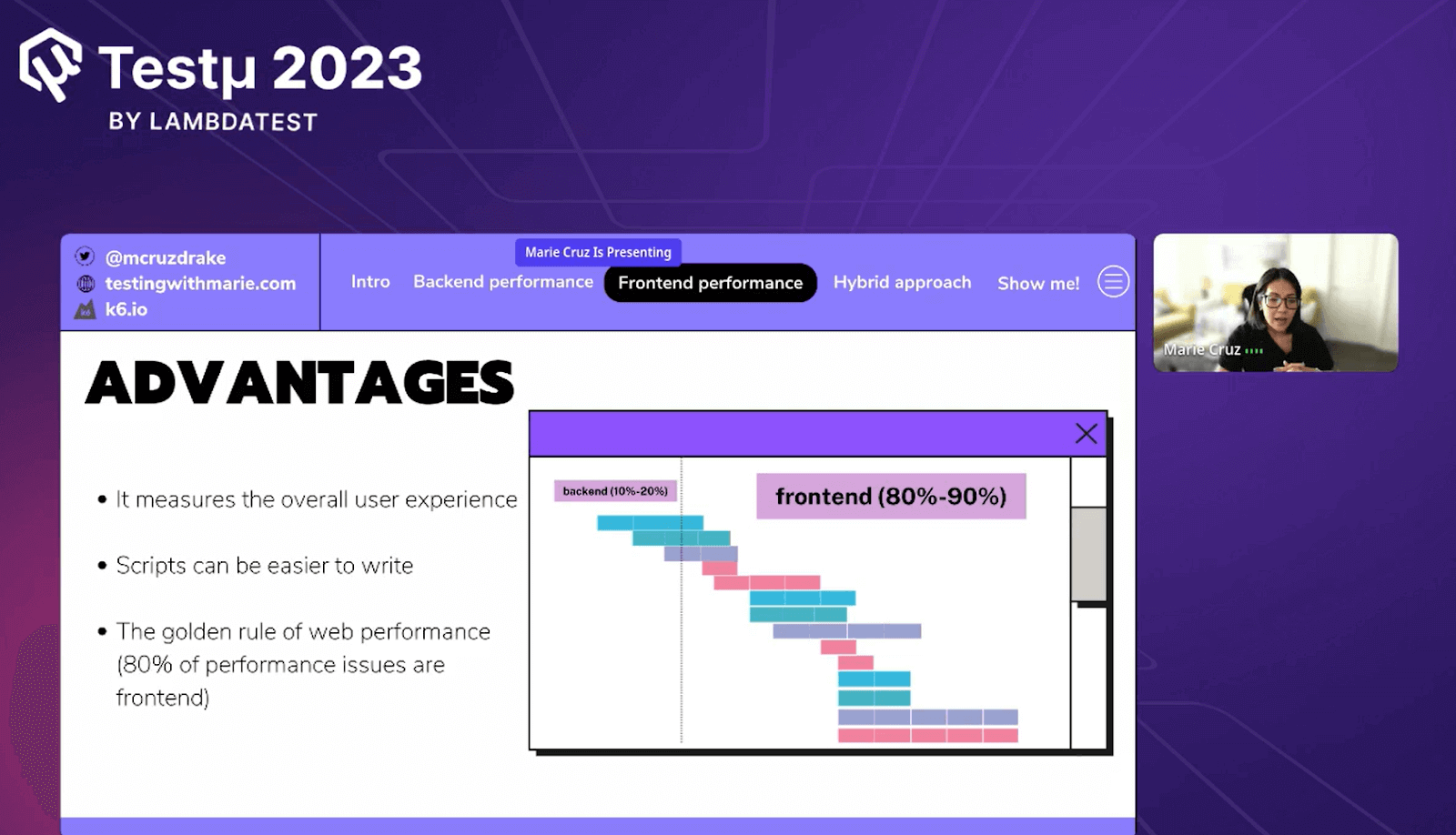

The fundamental principle of web performance emphasizes that, in most web pages, a minor portion, around 10-20%, of the time it takes for a user to receive a response is consumed by transmitting the HTML document from the web server to the browser. In contrast, a majority, approximately 80-90%, of the user’s response time is utilized within the front end of the website.

Regarding performance testing, one common practice often revolves around subjecting our back-end servers to load tests. It becomes evident that our application showcases good performance once we’ve optimized our servers and databases.

The fact that our servers have responded to specific requests doesn’t ensure that these responses are promptly visible to users on the web page. Numerous variables play a pivotal role in front-end performance, underscoring the significance of thorough testing to ensure an optimal user experience.

In this insightful session of Testμ 2023, Marie Cruz, Developer Advocate at k6, talks about front-end and back-end performance testing and the role of the hybrid approach to performance testing in ensuring your website’s optimal performance.

If you couldn’t catch all the sessions live, don’t worry! You can access the recordings at your convenience by visiting the LambdaTest YouTube Channel. If you are preparing for an interview you can learn more through Performance Testing interview questions.

What is the Hybrid Approach?

Maries starts the session by explaining the hybrid approach in performance testing. The hybrid way of doing performance testing lets testers find problems with how well a software system works right from the beginning.

They can make sure that the software applications meet the performance goals they’re supposed to. It’s also really good at lowering the chance of having performance problems when the software is actually being used by people. This happens because the testing creates situations that are like what happens in the real world but in a safe and controlled environment.

Back-End Performance: Uncovering the Foundation

Marie then goes on to explain the back-end performance.

When we talk about back-end performance testing, we’re targeting the core infrastructure of an application. This could include microservices, databases, and other hidden components. It’s not just about response times; back-end performance sheds light on scalability, availability, elasticity, reliability, and resiliency.

Marie Cruz touches upon the various factors that constitute to the backend performance of a document (website/application).

Join Now: https://t.co/Mm1TtIWN3m pic.twitter.com/uMUf4SUqwX

— LambdaTest (@lambdatesting) August 22, 2023

Let’s dive deeper into a few of these attributes:

- Scalability: Scalability measures an application’s ability to adjust hardware or software resources to manage usage fluctuations. It ensures your system can handle increased or decreased loads.

- Elasticity: Elasticity reflects a system’s automatic scaling to accommodate user demands. It ensures your application can dynamically adapt to varying traffic.

- Resiliency: Resiliency involves a system’s capacity to remain functional despite failures. It’s about your application’s ability to react and recover from adverse events.

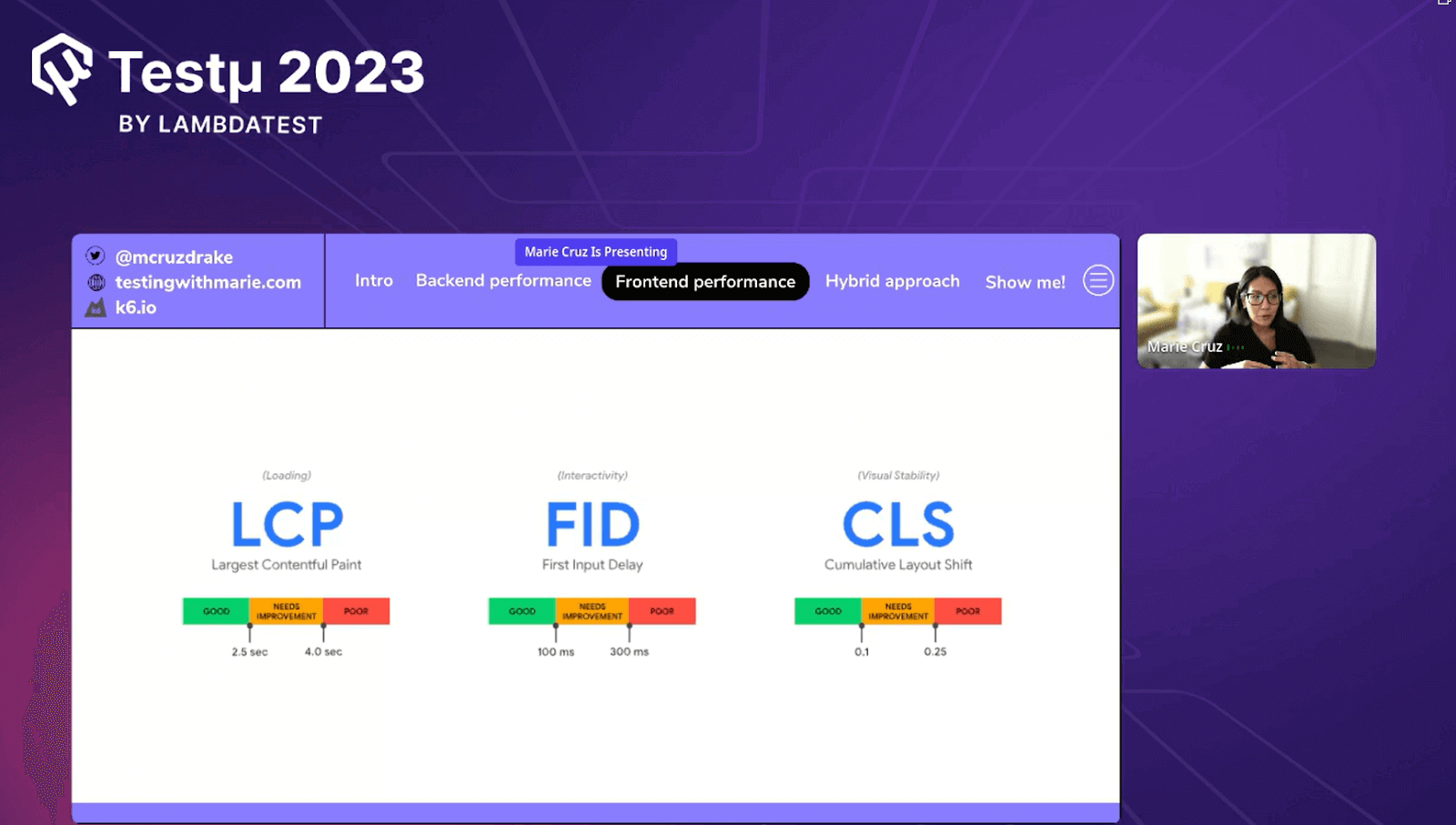

Front-End Performance: Bridging to User Experience

Marie then talks about front-end performance that focuses on user experience at the browser level. It measures loading times, interactivity, and visual stability. Core Web Vitals, like LCP, FID, and CLS, define front-end performance metrics.

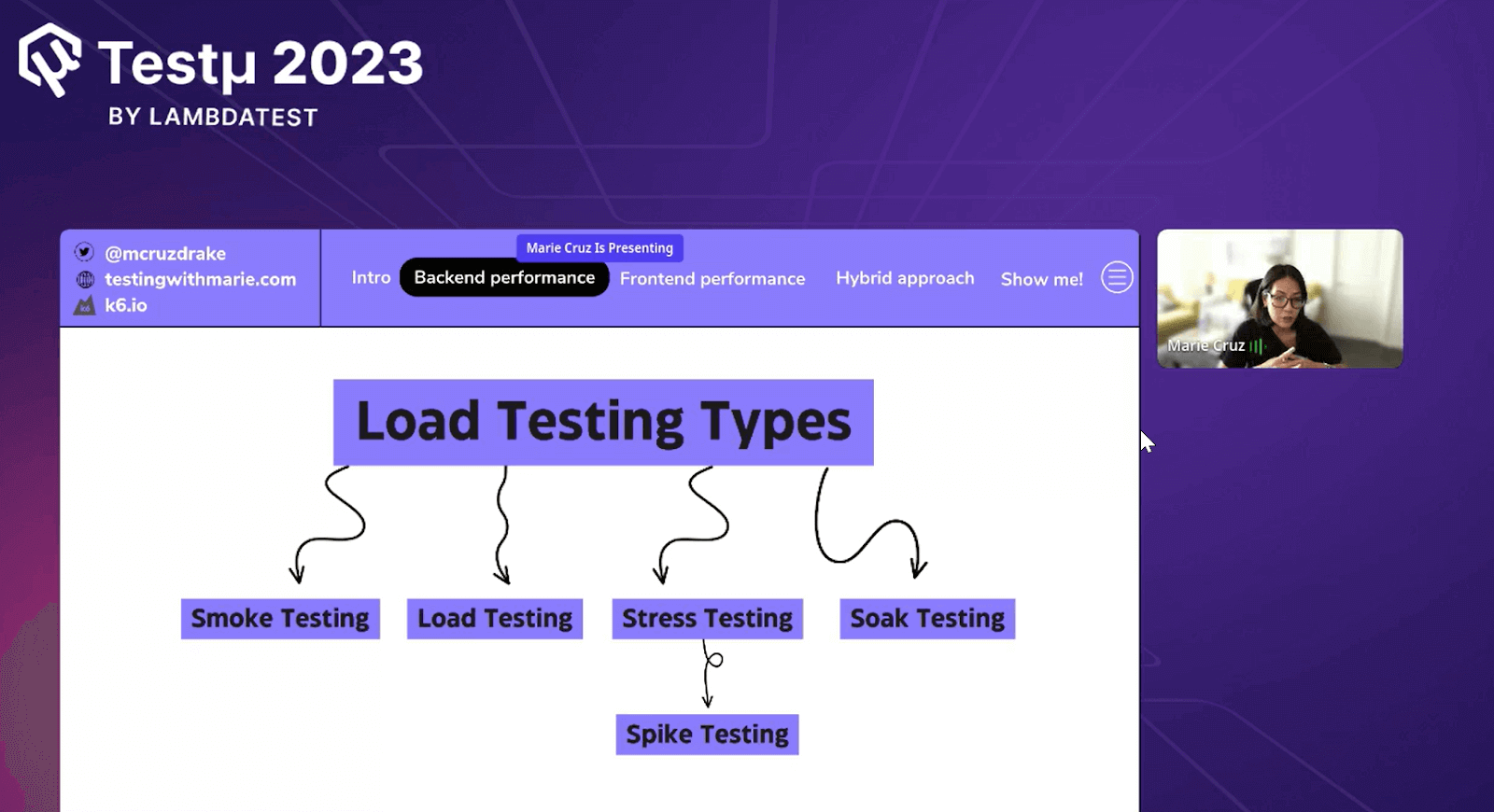

Unveiling Load Testing Types

Marie then discusses some common misconceptions about performance vs. load testing. She then talks about types of testing that come under load testing.

- Smoke testing: Smoke testing gauges your system’s ability to handle minimal loads without issues. It’s useful for verifying script accuracy and basic functionality.

- Load testing: Load testing examines an application’s behavior under typical or expected loads. It helps identify performance degradation during load fluctuations.

- Stress testing: Stress testing evaluates a system’s performance at maximum expected loads. It’s essential for confirming your application’s ability to handle peak traffic.

- Spike testing: Spike testing simulates abrupt, intense traffic spikes to test system survivability. It’s crucial for events like Black Friday sales or high-demand situations.

- Soak testing: Soak testing extends testing periods to assess reliability over extended durations. It’s effective for uncovering memory leaks or intermittent issues.

She then lists down a few performance testing tools such as Gatling, K6, JMeter, Artillery, and Locust. These tools simulate user traffic via requests, often using HTTP.

However, using different tools for front-end and back-end performance testing might create hassles related to test case creation, test maintenance to name a few. This is where a hybrid approach to ‘Performance Testing’ can bring-in significant benefits to the table🚀 k6 is one… pic.twitter.com/3t9WdEcgX9

— LambdaTest (@lambdatesting) August 22, 2023

Pros and Cons: Back-End and Front-End Performance Testing

Marie highlights the pros and cons of back-end and front-end testing.

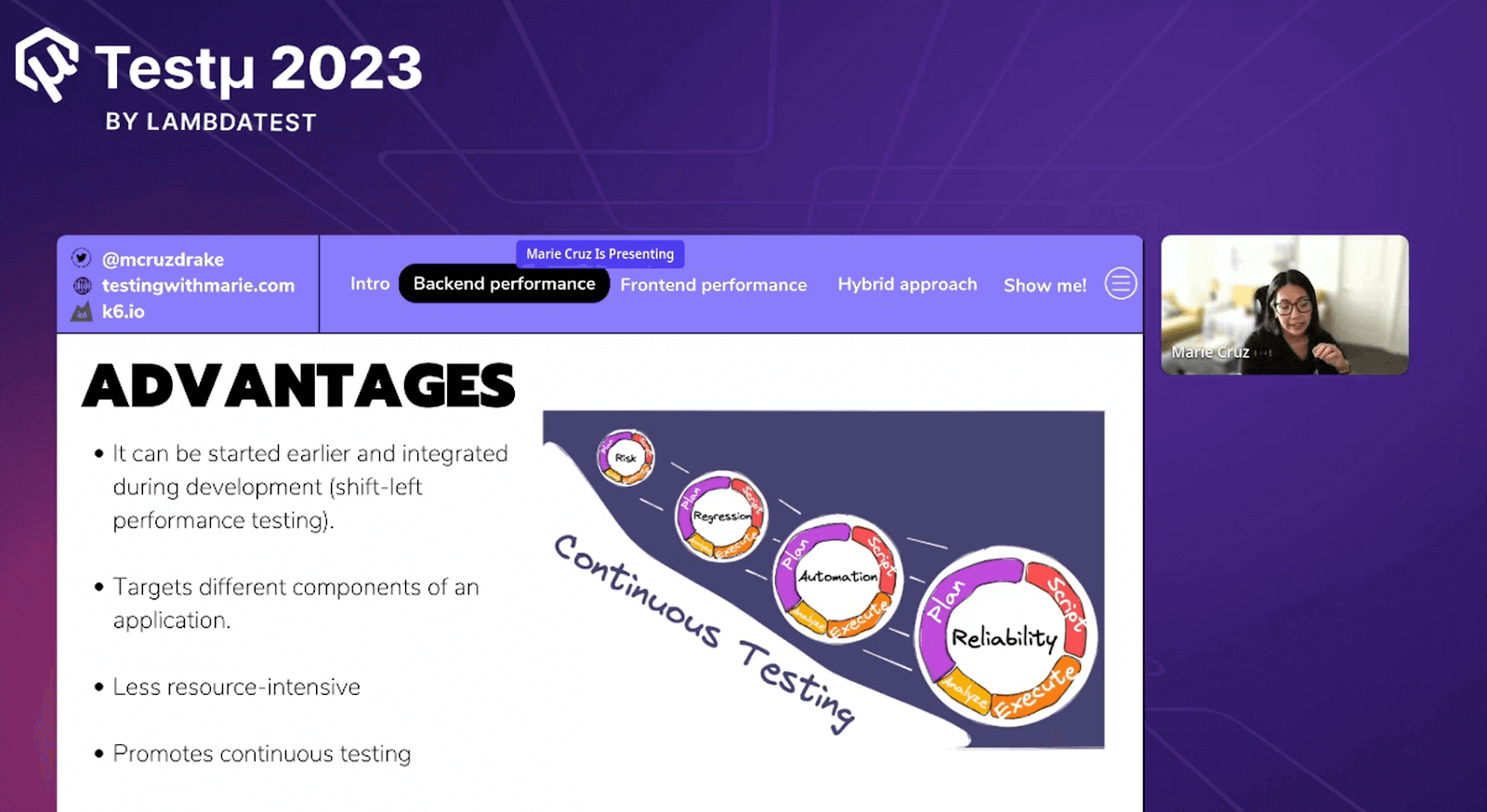

- Pros of Back-End Performance Testing

- Cons of Back-End Performance Testing

- Pros of Front-End Performance Testing

- Cons of Front-End Performance Testing

It offers early integration during development, scalability, and a less resource-intensive process. It promotes a continuous testing approach and tackles issues before they escalate.

Back-end testing may miss front-end issues, require maintenance as your application evolves, and lead to complex scripts for complex user flows.

Front-end testing directly assesses user experience, validates the “80% rule” of performance issues originating from the front end, and can be more accessible for test engineers.

Front-end testing doesn’t delve into back-end mechanics, lacks realistic traffic conditions, and may yield misleading results when scaling.

The Hybrid Approach: Front-End and Back-End

According to Marie, in order to harness the best of both worlds, consider the hybrid approach. This involves simulating most of the load at the protocol level while integrating browser virtual users to interact with the front end.

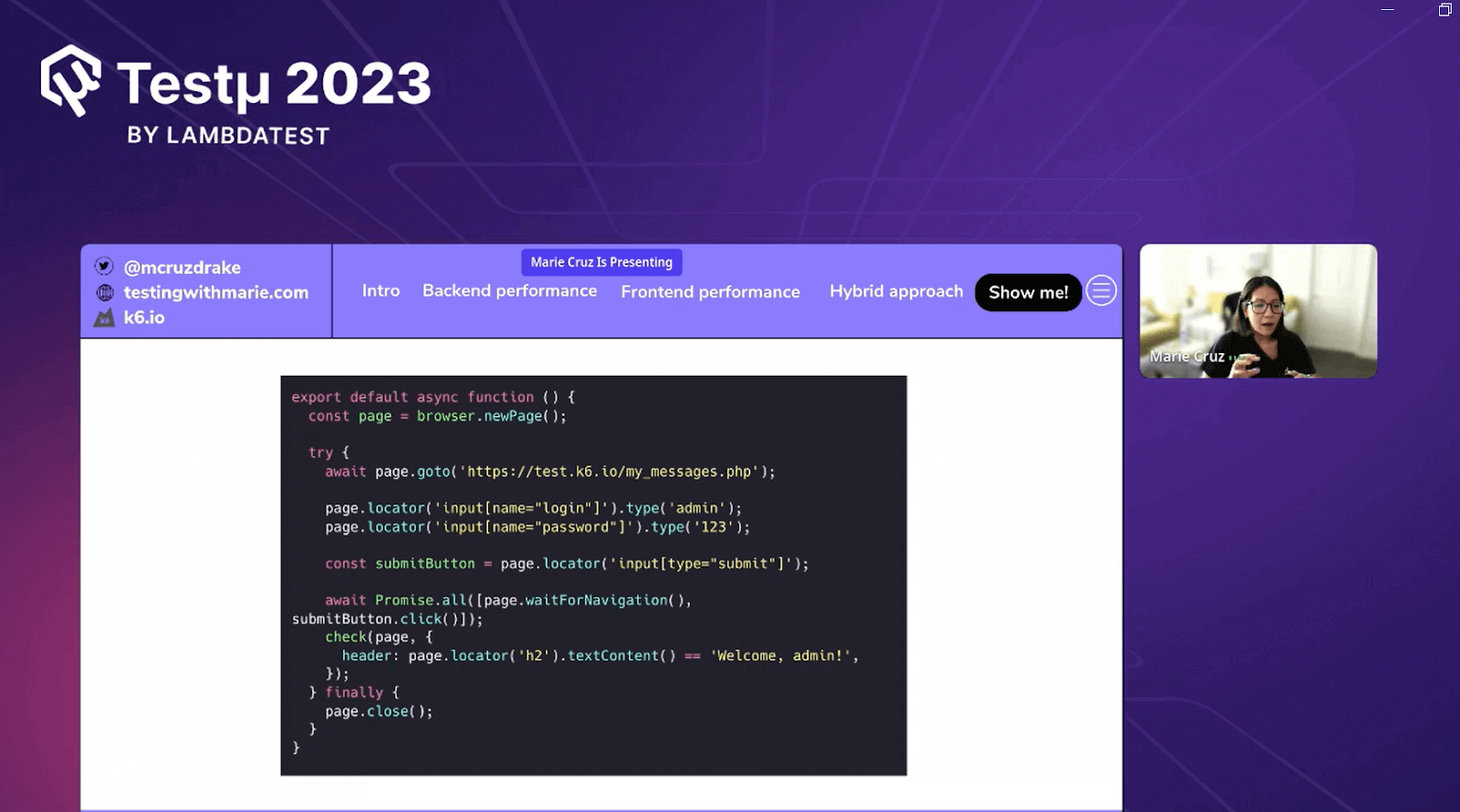

Performance testing tools like k6 aim to provide a seamless developer experience. k6 offers a browser module that blends browser and protocol tests, delivering valuable insights across the application.

Marie then demonstrates a practical example of hybrid performance testing using k6 on how to simultaneously run browser and protocol tests, leveraging scenarios to define unique user interactions. Executing the hybrid performance test with k6 generates an aggregate view of browser and back-end metrics. These metrics give a comprehensive overview of application performance for further analysis and optimization.

Q&A Session!

- Could you delve into the intricacies of how this approach intelligently combines virtual users and real user data to provide a more comprehensive performance analysis?

- Can you please shed some light on using k6 for load testing of SQL or NoSQL databases?

- Is there any 1 to 1 migration possible for migrating existing JMeter test cases? If not, do you plan to incorporate the feature to onboard more users?

Marie: This approach involves a strategic combination of virtual users and real user data for a thorough performance analysis. When employing the k6 browser, the method entails scripting for controlled environments generating synthetic data. k6’s integration with Grafana Labs facilitates the utilization of complementary tools.

Striking a balance is crucial; relying solely on synthetic data is inadequate. Tools like the Chrome User Experience report, or Google Search Console are useful for quick overviews. Yet, real user data has limitations—it’s slower to reflect changes. This underscores the need for synthetic tools like k6 to gauge immediate impacts.

Marie: Utilizing k6 for load testing of databases involves extensions designed for this purpose. On k6’s extensions page, available within k6 Studio, one can explore various sample extensions tailored for load testing different databases.

These extensions empower users to simulate and assess the impact of heavy loads on SQL or NoSQL databases. The community-contributed insights, along with documented scenarios, assist in devising effective load tests and evaluating database performance.

Marie: The objective of minimizing the resource footprint for browser-level replay is indeed a priority. We focus on gradually enhancing the capabilities of the browser API within k6. The ultimate aim is to align closely with the Playwright API, striving for compatibility. This alignment empowers users to replicate user journey flows primarily at the browser level.

The team is committed to refining this process and ensuring it’s seamless. Our GitHub repository hosts an open issue tracking the APIs still in development. Rest assured, our team is diligently working towards achieving Playwright API compatibility, significantly reducing reliance on protocol-level scripting for automated processes.

If you have any further questions about the hybrid approach to performance testing, please reach out to the LambdaTest Community.

Got Questions? Drop them on LambdaTest Community. Visit now