Building and Testing AI-Agent Powered LLM Applications: A Live Demonstration [Spartans Summit 2025]

LambdaTest

Posted On: March 18, 2025

11 Min

The Spartan Summit 2025 brought together AI and testing enthusiasts for an insightful session on AI agent-powered Large Language Model (LLM) applications.

In this session of the LambdaTest Spartan Summit 2025, Srinivasan Sekar and Sai Krishna, Directors of Engineering at LambdaTest, explore AI agent architectures, practical testing strategies, and real-world applications.

If you couldn’t catch all the sessions live, don’t worry! You can access the recordings by visiting the LambdaTest YouTube Channel.

Here is a quick rundown of the entire session!

What Is Retrieval-Augmented Generation (RAG)?

Sai starts the session by explaining how Retrieval-Augmented Generation enhances LLMs to retrieve relevant documents before generating responses.

He illustrates this with an example: If an employee asks about a company’s remote work policy, an AI system using RAG would retrieve the exact policy document and generate a precise response rather than relying solely on pre-trained data.

Sai further elaborates on the evolution of RAG and how businesses are leveraging it to improve AI model accuracy and reduce hallucinations. He discusses how RAG is particularly useful in industries where information is constantly changing, such as finance and healthcare.

He breaks down the process into the following steps:

- User Query: The user submits a question to the AI system.

- Embedding Model: The system converts the query into numerical data (vector format) that helps in searching for similar content.

- Vector Database Search: The AI compares the user’s query with stored document chunks in a vector database to find the most relevant information.

- Retrieved Documents: The system fetches the most relevant chunks of information from the database.

- Prompt Construction: The AI combines the retrieved information with the user’s question to create a detailed prompt for further processing.

- Large Language Model Processing: The AI model, such as GPT-4, uses both its internal knowledge and the retrieved information to generate a response.

- User Response: The AI delivers a well-structured answer based on real-time retrieved data rather than relying solely on pre-trained knowledge.

Limitations of RAG Models

Sai explains why RAG alone isn’t sufficient. According to him, RAG allows LLMs to retrieve external knowledge, but it follows a fixed workflow. It simply fetches relevant documents and synthesizes responses. But what if we need more than just retrieval? What if we need reasoning, action, or interaction with multiple systems?”

RAG models have the following limitations:

- Restricted to available data: They can only retrieve from pre-indexed sources and cannot perform real-time actions.

- Lack of decision-making: They retrieve, but they do not plan or execute actions.

- Limited adaptability: If the queried data is missing or outdated, a RAG model has no way to find new sources dynamically.

Why Do You Need AI Agents?

To overcome these challenges with RAG models, Sai introduces AI agents, explaining that they add a layer of intelligence over basic retrieval models:

AI agents allow an AI system to think, reason, and take action. They decide which module to invoke, which data source to query, and even what steps to take next.

Sai highlights the difference between a compounded and an agentic system. A compounded system retrieves and presents information quickly without reasoning, while an agentic system thinks, plans, and decides dynamically.

Sai Krishna & Srinivasan Sekar discuss balancing Compound vs. Agentic Systems in AI testing. Efficiency | UX | Adaptability | Resources – AI isn’t just automation, it’s smart optimization pic.twitter.com/yK1wRdwjnF

— LambdaTest (@lambdatesting) February 25, 2025

He further explains that AI agents function beyond traditional AI models in the following ways:

- Dynamic decision-making: Agents don’t just retrieve; they decide the next steps based on query complexity.

- External tool integration: Agents can trigger web searches, call APIs, interact with databases, or perform actions autonomously.

- Handling complex workflows: Instead of following predefined scripts, agents can autonomously break down complex queries into subtasks and execute them.

RAG vs. AI Agents: Key Differences

Sai illustrates the difference between RAG and AI agents using a real-world example:

Imagine you are using ChatGPT and ask it about the latest developments in Appium in 2025. If it’s a RAG system, it will search for relevant indexed documents and return what it finds. But if the information is missing or outdated, it cannot help.

Now, with an AI agent, it recognizes the data gap, dynamically triggers a Google search, extracts the latest updates from Appium’s GitHub repository, and integrates it with existing knowledge before responding.

This agentic approach allows AI systems to:

- Retrieve new information instead of relying only on pre-existing data.

- Adapt and take real-time actions instead of following static workflows.

- Handle multi-step problem-solving, making AI more autonomous and less dependent on human intervention.

Meanwhile, Sai also addresses one question asked by an attendee on how to test AI-powered LLM applications. He begins by highlighting the challenges testers face with LLM-based systems. Unlike traditional software, LLMs produce probabilistic outputs that can vary with each run.

This makes conventional assertion-based testing insufficient. Instead, testers need to focus on evaluating the accuracy, contextual relevance, and bias in the responses.

Sai emphasizes that building these applications requires a different mindset-one that embraces human-in-the-loop testing, robust benchmark datasets, and even adversarial testing to identify where the model might falter. The goal is to ensure that the system not only functions correctly but also maintains high quality and reliability in real-world scenarios.

The attendee also asked how KaneAI, a GenAI native test agent by LambdaTest, differs from typical AI agents; Sai explains that while most agents are designed to perform multiple tasks by delegating responsibilities, KaneAI operates as a fully self-contained agent.

Typically, an AI agent is given a task, breaks it down into sub-tasks, and might even hand off parts of the process to another specialized agent or tool. For example, if you build a weather agent, it might first fetch weather reports from external APIs and then pass that information to a dedicated weather analyzer agent for further processing.

In contrast, KaneAI doesn’t delegate responsibilities to other agents. Instead, it integrates multiple tools within its own architecture. When a task is assigned, KaneAI processes the input and directly routes it to an internal tool designed to handle that particular function. This integrated design reduces the communication overhead typically seen in multi-agent systems and streamlines the overall process.

With the rise of AI in testing, its crucial to stay competitive by upskilling or polishing your skillsets. The KaneAI Certification proves your hands-on AI testing skills and positions you as a future-ready, high-value QA professional.

Demo: AI-Powered Chat With PDFs Using RAG and Agents

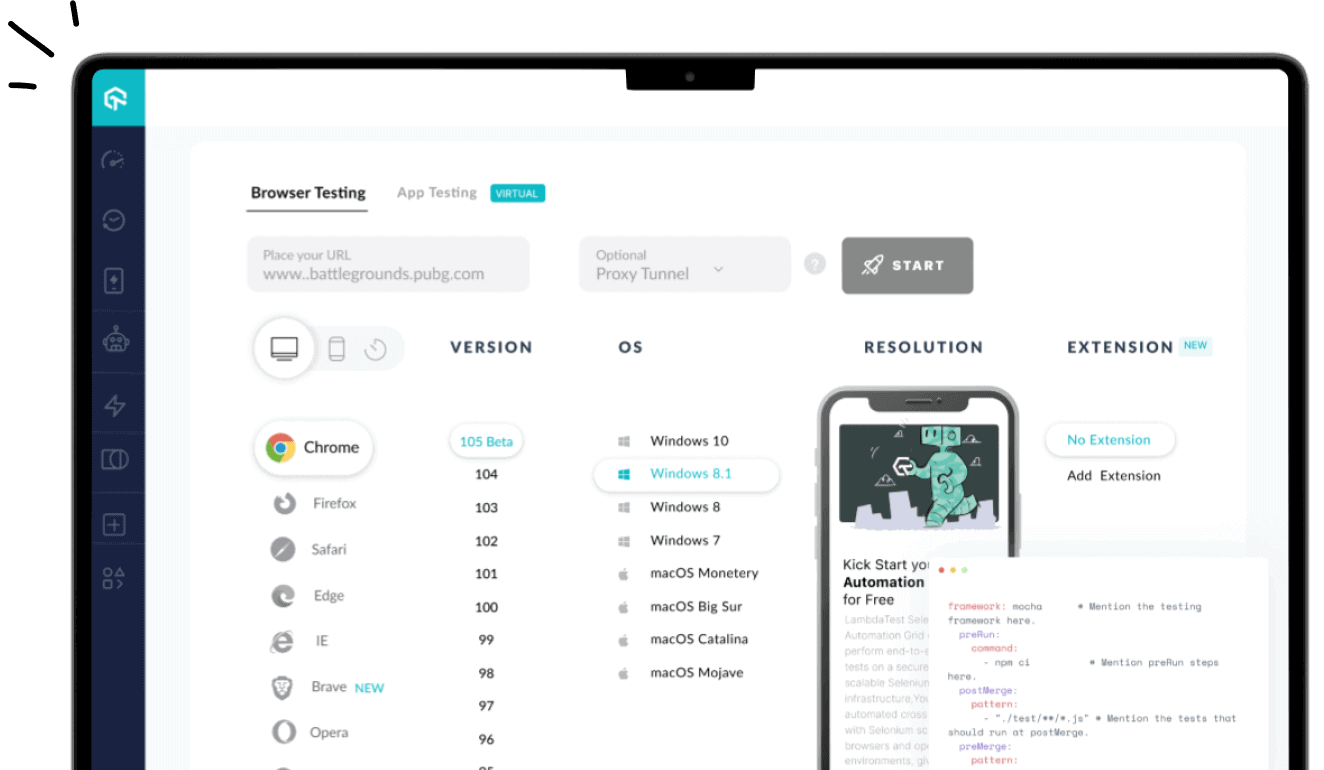

Srinivasan then demonstrates a simple application that enables users to chat with a PDF document. The demo showcases how the application utilizes Retrieval-Augmented Generation techniques to fetch relevant information from a given document and enhance responses using external sources when needed.

The application allows users to interact with a PDF document by querying it for information. A sample document, app.pdf, was used, which contains details about Appium’s architecture from its early versions (Appium 1.0) to its current state (Appium 2.0 and later).

When a user asks a question such as “What is Appium’s architecture?” the system retrieves relevant content from the PDF. To achieve this, the demo used OpenAI’s GPT-4 for reasoning along with the text embedding model text-embedding-small from OpenAI. The RAG model works by indexing additional documents, embedding them, and storing them for efficient retrieval.

The document is read from the data directory, chunked, and stored in a vector store using LlamaIndex. This allows efficient retrieval based on semantic similarity. The system processes queries by searching for relevant document chunks in the vector store and uses similarity search to fetch relevant information.

If the document does not contain updated or relevant information, a Google search agent is triggered. The system utilizes google-this, a Node.js module, for searches and Surfer as a fallback to refine searches. The search is specifically scoped to the Appium GitHub organization to retrieve the latest updates.

Once data is retrieved from both the PDF and Google search, GPT-4 evaluates the relevancy and combines both sources to generate an accurate response. The final response includes citations, indicating whether the information was sourced from the PDF or an external source.

A test case is run with the query, “What are the latest developments in Appium as of 2025?” Since the PDF is outdated, the system leverages the Google agent to fetch recent updates from Appium’s GitHub.

The system evaluates faithfulness, correctness, and relevancy, ensuring a threshold of 70% accuracy to ensure reliable results.

Srinivasan highlights observability as a crucial factor for maintaining system performance. Monitoring includes analyzing query patterns, token consumption, source reliability, error rates, and response accuracy.

Agent-based AI was introduced as an advanced approach where workflows are dynamically determined instead of being hardcoded.

He takes the example of Browser Use, which acts as an agent to automate web interactions. Potential applications of agents in testing include automating repetitive QA tasks, integrating with Jira to generate test cases, and using agents to analyze logs and errors from CI/CD pipelines.

Q&A Session

Here are some questions asked by the attendees:

- How do you ensure the reliability and accuracy of AI agent responses across different scenarios?

- How is a vector database designed and what are the components involved in it?

- Can we create agents that can be used to do all the repetitive tasks and handle the workload so that, as a QA, I can overlook the final reports?

- What are the best practices for testing applications to handle bias and ethical concerns?

Sai: Always ensure you have golden truth data as a reference. We’ve shown only a few metrics, but there are many others to consider. You don’t need to implement all of them-discuss with your team and identify the most important ones for your use case.

For example, if faithfulness is critical, you’ll want to prevent hallucinations. Similarly, correctness and similarity checks can help-if an answer has 80% similarity to the expected response, that might be acceptable.

Always take a holistic evaluation approach rather than relying entirely on an LLM. Different models may generate varying outputs, and switching between them could introduce inconsistencies.

Even when generating test data, ensure human intervention for validation before feeding it into the models. This helps capture and measure the right evaluation metrics effectively.

Srinivasan: Our sample query utilizes an in-memory vector database. Many vector databases are available, but one I’ve worked with extensively is Pinecone.

It offers excellent guidance on database design. I recommend exploring Pinecone’s resources for insights into building an efficient vector database.

Srinivasan: One area that often lacks validation is report analysis. In CI, we run numerous tasks frequently, and someone has to manually check failures and investigate what went wrong, especially in APM server logs.

A useful solution could be a reporting agent that analyzes APM logs, identifies patterns, and, if needed, performs a Google search to interpret specific errors. This would significantly reduce manual effort in diagnosing Appium-related issues.

Sai: Another possibility is an agent integrated with JIRA that can extract relevant details and draft test cases. While it may not be entirely accurate, it could handle around 50% of the work, giving testers a solid starting point.

You can then review, refine, and adjust the scenarios as needed. This is a great example of how these tools can save time-rather than replacing jobs, they enhance efficiency.

Srinivasan: Observability is key. Based on our consulting experience, one of the best practices is closely monitoring system behavior, particularly regarding ethical and bias concerns.

As testers, we might unintentionally introduce bias, which is problematic. We often assume everything is fine before deployment, but real users may interact with the system in unexpected ways.

Understanding how users engage with your application is becoming more critical than ever. In agentic applications, interpreting user instructions correctly while delivering accurate responses is essential.

However, there’s always a risk of altering the intended meaning. Tracking a wide range of instructions and monitoring real-world usage helps build confidence, though perfection isn’t achievable. Instead, the goal is to ensure fairness and reliability across numerous interactions.

Author