Chapters

- AI Testing

- Generate Test Cases With AI

- Generate Tests With AI

- AI Testing Tools

- Open-Source AI Testing Tools

- AI in Test Automation

- NLP Testing

- Best AI/ChatGPT Prompts for Software Testing

- AI in Mobile Testing

- AI in Regression Testing

- AI in Performance Testing

- AI Agents

- Best AI Agents

- Agentic AI Testing

- AI in DevOps

- AI DevOps Tools

- AI and Accessibility

- AI in Software Testing Podcasts

AI in Performance Testing: Ultimate Guide 2025

Tahneet Kanwal

Posted On: July 23, 2025

14 Min

When running performance tests, you might find it challenging to validate different performance parameters like response times, throughput, and resource utilization. Such evaluations can be complicated and time-consuming and often involve a considerable amount of manual work.

However, one solution to overcome this challenge is to use AI in performance testing, which can automatically analyze (or evaluate) various performance parameters. This is a process where intelligent algorithms can simulate realistic traffic patterns of software and predict its behavior under certain conditions to identify performance bottlenecks. This enables quicker and more robust performance testing.

Overview

Performance testing ensures software performs well under varying load conditions, but it’s often manual, slow, and complex. AI brings intelligence and automation to streamline and improve the accuracy of performance testing.

Role of AI in Performance Testing

- Smart Resource Allocation: AI optimizes system resource usage in real-time.

- Deep Root Cause Detection: Identifies the exact component causing performance lags.

- Scalability Insights: Predicts app performance as user load increases.

- Forecasting with Predictive Analysis: Detects potential slowdowns before they happen.

- Live Anomaly Spotting: Catches performance issues as they occur during testing.

- Automation of Repetitive Tasks: Speeds up test cycles by auto-generating tests and reports.

Top AI Tools for Performance Testing

- LambdaTest KaneAI: GenAI-native Software Testing agent which helps to debug, create and evolve tests using plain engling language, increasing the QA efficiency.

- StormForge: Optimizes Kubernetes apps by simulating traffic and recommending configurations.

- Telerik Test Studio: Auto-updates UI test scripts and speeds up test execution using AI.

Current AI Trends in Performance Testing

- AI-Generated Test Scripts: Automatically writes and maintains tests as code evolves.

- Real-World Load Simulation: Predicts software performance using historical user data.

- Instant Issue Detection: Monitors live metrics and alerts teams to unusual patterns.

- Self-Healing Automation: Fixes broken test scripts without manual intervention.

- CI/CD Integration: Embeds AI performance testing in every code commit for continuous feedback.

Limitations of Traditional Performance Testing

- Static Resource Allocation: Can’t dynamically adjust to real-time load changes.

- Rigid Test Cases: Manual scripts often miss evolving user behaviors.

- Weak Traffic Simulation: Fails to mirror realistic usage patterns at scale.

- Scalability Barriers: Testing at high volume is costly and complex.

- Missed User Scenarios: Doesn’t cover unpredictable, real-world workflows.

What Is AI in Performance Testing?

AI in performance testing uses artificial intelligence techniques to make testing more efficient and intelligent in evaluating software performance. If you’re looking for the broader picture, here’s a complete guide to AI testing. It automates the process of analyzing large test data, identifying traffic patterns and providing real-time suggestions to predict how a software application behaves under varying load conditions.

This allows you to quickly spot performance bottlenecks and fix them without doing everything manually. Using AI, you can also automate writing test cases and test scripts, further speeding up performance testing.

Why Leverage AI in Performance Testing?

Artificial intelligence brings significant benefits to performance testing, addressing the challenges of traditional testing methods.

Here is how leveraging AI in performance testing can enhance your entire test process:

- Optimal Resource Automation: AI automates system resources according to the magnitude of their use at a given point in time so that they are optimally used, as well as to avoid all overload during peak periods.

- Root Cause Analysis: By analyzing latency issues from distributed systems, AI can identify that there is latency and drill down to the exact components (i.e., network, database, server) that are the root cause of the issue.

- Scalability Prediction: AI can help simulate and predict how a software application will perform when the number of users increases. It allows more insight into scalability without running performance tests at extreme levels.

- Predictive Analysis: Artificial intelligence can analyze large amounts of historical data to predict how a software application will behave under different loads. It analyzes past software performance and user behavior, and on the basis of that, AI can recognize potential issues that may slow down the software application.

- Real-time Anomaly Detection: AI can detect anomalies in real-time during load testing. AI algorithms can analyze performance metrics, user interactions, and other important data during test execution. It allows early identification of performance issues, such as slow response times, high resource utilization and more.

- Task Automation: AI-powered tools can automate repetitive tasks such as test generation, test reporting, and more, allowing you to run performance tests faster and focus on other parameters.

This predictive analysis helps you plan for capacity and scalability ahead of time. Rather than waiting for issues to occur in real-world scenarios, AI can detect these issues early, making sure the software can handle future expected loads.

Top AI Tools for Performance Testing

QA teams may require AI testing tools to evaluate the performance of software applications in various ways. However, choosing the right tool depends on your project’s particular needs and objectives.

Here are some of the top AI tools for performance testing:

LambdaTest KaneAI

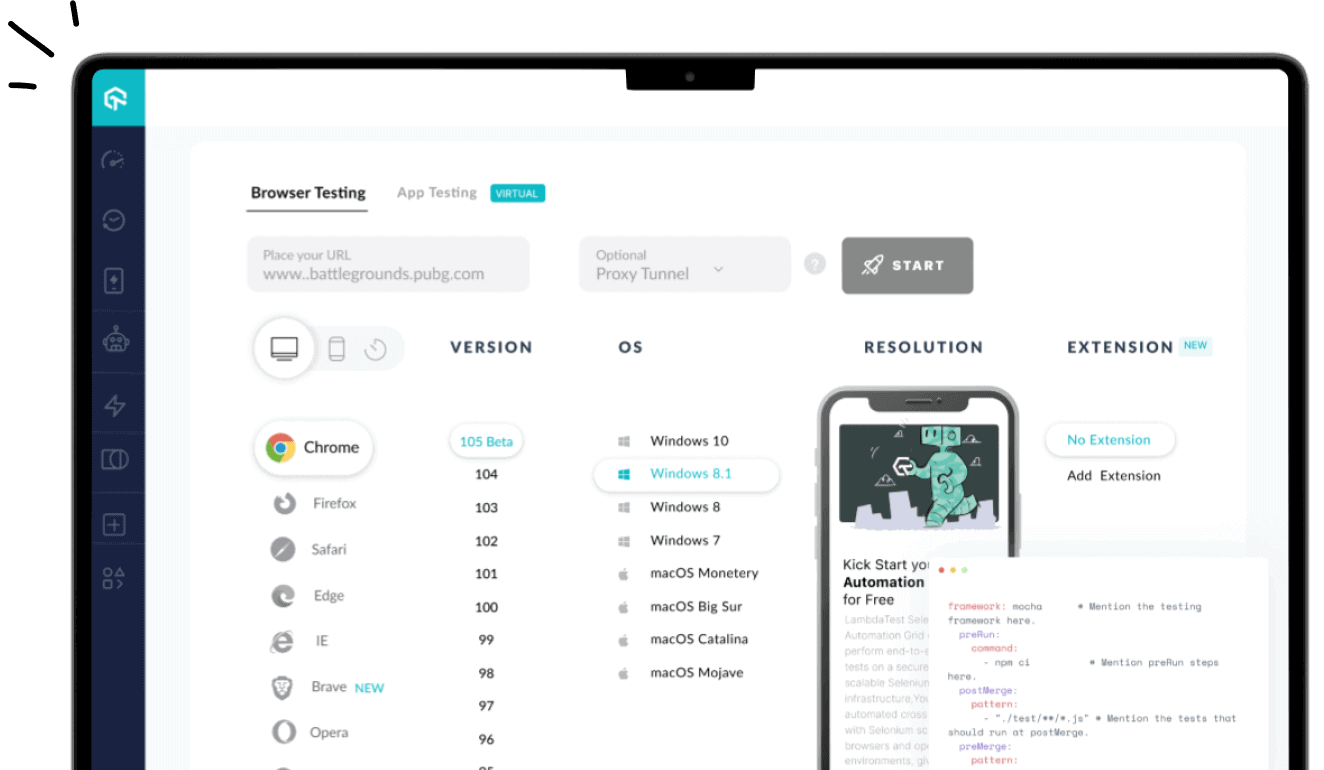

LambdaTest KaneAI is an AI-native automation testing agent designed to support fast-moving AI QA teams. It lets you create, debug, and enhance tests using natural language, making test automation quicker and easier without needing deep technical expertise.

Features:

- Intelligent Test Generation: Automates the creation and evolution of test cases through NLP-driven instructions.

- Smart Test Planning: Converts high-level objectives into detailed, automated test plans.

- Multi-Language Code Export: Generates tests compatible with various programming languages and frameworks.

- Show-Me Mode: Simplifies debugging by converting user actions into natural language instructions for improved reliability.

- API Testing Support: Easily include backend tests to improve overall coverage.

- Wide Device Coverage: Run your tests across 3000+ browsers, operating systems, and devices.

With the rise of AI in testing, its crucial to stay competitive by upskilling or polishing your skillsets. The KaneAI Certification proves your hands-on AI testing skills and positions you as a future-ready, high-value QA professional.

Learn more about AI testing and how it helps reduce manual effort, accelerate releases, and improve test accuracy.

StormForge

StormForge is an AI-driven performance testing tool for optimizing and automating Kubernetes applications. It offers tools for testing application performance, analyzing costs, and optimizing resource usage, helping organizations improve the efficiency and reliability of their containerized applications on Kubernetes.

Features:

- Analyzes data to predict performance issues and recommend proactive optimizations.

- Optimizes resource allocation, reducing cloud costs while maintaining high software performance.

- Simulates real-world traffic conditions, identifying bottlenecks and performance improvement areas.

Telerik Test Studio

Telerik Test Studio is an automated testing tool designed for desktop, web and mobile applications. It supports functional, load, performance, and API testing to ensure software quality. Both technical and non-technical users can use Telerik Test Studio to run and maintain automated tests.

Features:

- Automates UI validation using AI-driven visual checks.

- Integrates with various test management tools and uses AI to speed up test case design, management, and execution.

- Uses AI to automatically detect and fix issues in test scripts when software elements change.

Current AI Trends in Performance Testing

The future of AI in performance testing will focus on improving productivity. According to the Future of Quality Assurance Report, 60.60% of organizations think that manual intervention will still be important in the testing process. However, AI will help make tasks faster and easier, working alongside humans to get better results.

Let’s look at how AI will impact performance testing:

- AI-Generated Test Scripts: AI helps automate the process of writing test scripts. Generating tests with AI improves test coverage and reduces the need for manually writing test scripts. As software evolves, AI can even adjust the test scripts accordingly, ensuring that they remain updated without requiring manual intervention.

- Predictive analytics: AI using predictive analytics will transform load testing by replicating real-world user behavior. Using historical data, AI will estimate software performance under various load conditions, identifying potential issues.

- Instant Issue Detection: AI enables continuous, real-time monitoring of software performance. By analyzing real-time data, AI can instantly detect anomalies or patterns that indicate performance issues.

- Self-Healing Automation: AI helps maintain your test scripts by automatically updating and fixing them. This process of self-healing test automation further expedites your performance testing process. As software applications change, AI will detect broken test scripts and adjust them without human involvement. This self-healing ability will ensure seamless performance testing with minimal manual effort, even as software applications are updated.

- CI/CD Integration: As AI integrates into DevOps and CI/CD pipelines, performance testing will become a continuous and automated process throughout the development lifecycle. AI will run performance tests automatically with each code change, ensuring continuous monitoring and improvement.

It will allow teams to address issues before they impact end users, reducing downtime and improving user experience. AI will also suggest optimizations, providing actionable insights for performance improvement. With real-time analysis, teams can be more proactive in maintaining software health.

Limitationss in Traditional Performance Testing

Before AI was introduced, traditional performance testing faced many challenges and limitations. No matter how experienced the tester was, teams had to handle several common challenges without the help of AI.

Some of these challenges are as follows:

- Static Resource Allocation:Traditional performance testing may not account for dynamic resource allocation, resulting in inefficient resource usage. It often fails to adjust to varying load conditions. This leads to potential overloads or underutilization.

- Rigid Test Cases:Conventional methods often rely on manual test cases written by testers regarding user actions and traffic. However, these may not capture real-time variation in user behavior, leading to inaccurate response time analysis.

- Weak Traffic Simulation: It can be challenging to simulate realistic traffic loads in traditional testing environments. This can lead to testing scenarios that might not accurately reflect the software behavior under high-traffic conditions.

- Scalability Barriers:As software applications grew in size and complexity, performance testing needed to scale accordingly. What started as tests with dozens or hundreds of users might later need to simulate thousands or millions of users.

- Missed User Scenarios:Traditional methods struggle to capture the full range of real user interactions, including varied patterns, dynamic inputs, and unexpected workflows. It results in performance tests that do not accurately reflect actual usage, leaving critical issues undetected until they occur in real-world conditions.

The testing tools capable of handling such a scale were often expensive and difficult to manage. Failing to properly predict and handle traffic spikes or heavy user loads could lead to costly downtime.

Note

NoteRun performance tests up to 70% faster on the cloud. Try LambdaTest Today!

Best Practices for Using AI in Performance Testing

Below are some best practices for effectively using AI in performance testing:

- Customize to Fit: Every software project is different, so it’s important to adjust AI testing parameters to match the requirements needed for performance testing. Customizing tests helps keep up with project goals and changes in software or user behavior. Using AI to tweak the tests can make sure they stay relevant and effective as things change.

- Use Diverse Test Data: High-quality test data is key to accurate performance testing. To catch issues early, it needs to cover different scenarios such as response time, throughput, resource utilization and more. Having a variety of test data helps simulate real-world conditions and ensures better test coverage.

- Blend AI and Human Input: AI is good for automating repetitive tasks in performance testing, but human testers are still needed. They bring creativity and insights that AI can’t. Working together, AI can handle repetitive work while humans focus on solving complex problems and improving the testing process.

- Keep AI Updated: AI gets better with regular updates. To keep it useful, you need to retrain models with new data and create feedback loops. It helps AI stay up-to-date with software changes and find new performance issues faster.

Conclusion

AI is changing the way performance testing is done, making it faster and more efficient. It can automate tasks, predict issues before they happen, and help teams fix issues quickly. AI is also useful for accurately detecting issues in real-time, adapting to the software changes, and enhancing test coverage.

However, as AI is getting deeper into performance testing processes, it will not just continue to perform the tasks but also simplify the processes to make the testing more efficient as well as require less human input. The role of AI in performance testing is promising and will enhance teams to provide quality software efficiently.

Frequently Asked Questions (FAQs)

What should I consider when choosing an AI tool for performance testing?

What are common performance testing mistakes to avoid?

What types of performance testing can AI be used for?

Citations

Performance Testing Using Machine Learning:

https://www.internationaljournalssrg.org/IJCSE/2023/Volume10-Issue6/IJCSE-V10I6P105.pdf

Author