Generative AI in Testing: Benefits, Use Cases and Tools

Zikra Mohammadi

Posted On: October 25, 2024

![]() 139587 Views

139587 Views

![]() 17 Min Read

17 Min Read

Generative AI is a type of artificial intelligence that creates new outputs based on patterns it has learned from data. When it comes to quality assurance, Generative AI can automate various aspects of the QA process, such as test case and data generation, test suite optimization, and more.

Using Generative AI in testing, developers and testers can spot patterns and find and predict issues that can affect software quality. By catching defects early, QA teams can take action to avoid future issues and enhance software quality.

This blog will look at the role of Generative AI in testing.

TABLE OF CONTENTS

- What Is Generative AI in Testing?

- Benefits of Using Generative AI in Testing

- Use Cases of Generative AI in Software Testing

- How to Use Generative AI in Testing?

- Generative AI Tools for Software Testing

- Impact of Generative AI on QA Job Roles

- Future Trends in Generative AI for Software Testing

- Shortcomings of Generative AI in Testing

- Frequently Asked Questions (FAQs)

What Is Generative AI in Testing?

Generative AI models are trained on huge data sets to discover patterns as well as relationships within them. They can then apply this information to create new, realistic content that mimics human intelligence. In the context of software testing, these models can be used to produce test cases, test data, and even forecast potential issues using predictive models.

There are different types of Generative AI models:

- Generative Adversarial Networks (GANs): They create realistic test scenarios by simulating real-world conditions. It helps to expand test coverage and find edge cases that traditional testing approaches may not be able to handle as effectively.

- Transformers: They deal efficiently with massive datasets and can be used to generate test cases. Transformers also excel at natural language processing tasks, making them ideal for tasks such as creating user scenarios and test scripts.

- Variational Autoencoders (VAEs): They could be helpful in generating varied synthetic datasets or visual data for testing UI elements.

- Recurrent Neural Networks (RNNs): They generate sequential test data, making them ideal for testing software applications with time-series or sequence-based inputs. They can simulate user interactions over time, providing insights into how an application works when used continuously.

Benefits of Using Generative AI in Testing

Generative AI in testing has various benefits, including increased productivity, accuracy, and overall software quality. Here are several significant benefits:

- Improved automation and speed: Generative AI improves the testing process by automating the creation of test scripts, resulting in shorter development cycles and faster time-to-market for software releases.

- Enhanced test coverage: Generative AI provides broader test coverage by creating several scenarios, including edge cases. It lowers the risk of undetected issues, leading to more reliable and robust software.

- Reduced human error: Generative AI reduces human error by automating activities that can be complex and repetitive. When combined with human insights, it leads to more accurate and consistent test results, hence enabling higher-quality software.

- Higher cost efficiency: Generative AI can improve cost efficiency by reducing manual testing efforts in large-scale projects.

- Improved usability: Generative AI, along with human judgement, identifies usability errors through the analysis of end-user interactions to come up with even more intuitive, user-friendly software applications.

- Improved localization: Generative AI can help create test cases across various languages and regions to make the software application accessible and usable to a global audience.

- Reduced test maintenance effort: Generative AI updates test cases automatically as the software application evolves or its codebase is updated, minimizing manual maintenance.

- Improved performance testing: Generative AI can create various load conditions and user behaviors. This can enhance performance testing when performed with tools like JMeter.

Run performance tests using AI-powered HyperExecute. Try LambdaTest Now!

Use Cases of Generative AI in Software Testing

Let’s see the use cases of Generative AI in software testing:

- Automated test case generation: Generative AI can analyze existing data, code, and user interactions to generate diverse and thorough test cases. This method involves evaluating the software application’s functioning and creating test scenarios for diverse use cases, including edge cases. To learn more, check out this blog on AI-based test case generation.

- Test data generation: Generative AI generates synthetic test data, which is crucial for the effective execution of testing in an environment where gathering real data is hard. You can check out this blog to learn more about how to use Generative AI for efficient test data generation.

- Predictive bug detection: Machine learning models, including predictive analytics, can identify error-prone areas by analyzing historical defect data. Generative AI supports this by generating test scenarios that focus on these areas.

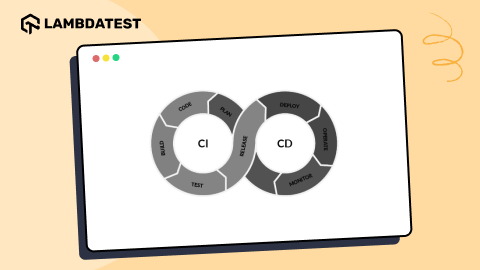

- Continuous testing: Continuous testing can also be done using CI/CD tools and Generative AI, which constantly updates and generates new test cases as the software evolves.

- Code reviews: Generative AI can help enhance code review processes by scanning code automatically for security vulnerabilities, compliance issues, and standards of coding. AI helps detect security risks in the codebase, such as SQL injection points, cross-site scripting vulnerabilities, and other known security flaws.

- Automated documentation: Generative AI can be used to automate the preparation of test documentation, such as test plans, test cases, and test reports. Such automation means consistency, updating, and completeness of the documentation. Automated documentation frees up time for testers to spend on actual testing activities instead of administrative ones.

Subscribe to the LambdaTest YouTube Channel and stay up-to-date with more such tutorials.

How to Use Generative AI in Testing?

Generative AI requires a well-planned implementation strategy to realize its full potential. Here are essential considerations for successful implementation:

- Define objectives: Begin by clearly defining the specific objectives you want to achieve by using Generative AI tools in your testing process. Decide whether your goal is increasing test coverage, reducing manual testing, enhancing issue detection, or a combination of these.

- Choose the right tool: Select the Generative AI tool that best suits your needs. Each AI tool has specific strengths, so evaluate each tool’s capabilities and how effectively it works with your current tech stack.

- Analyze test infrastructure requirements: Analyze your current test infrastructure to ensure that it can handle the AI tool’s requirements. Some AI tools may require cloud-based settings or hardware upgrades. If your organization lacks the required resources, you may need to invest in infrastructure changes or migrate to cloud solutions that are capable of handling the AI tool’s computational demands.

- Train your team: Provide comprehensive training for your team to efficiently use Generative AI tools. Training should include the principles of Generative AI, including how to interact with the tools, the testing process, and how to understand AI-driven findings. Your team should also be trained on how to debug AI-generated tests and deal with scenarios that may still require human intervention.

- Monitor and evaluate progress: Set up a continuous monitoring procedure to track the effectiveness of Generative AI in your testing cycle. Review the performance of AI-driven tests regularly, keeping an eye on key metrics like bug detection rates and test execution duration, and resolve any issues or shortcomings as soon as possible. It will ensure that the AI tools provide the expected results.

Generative AI Tools for Software Testing

Generative AI is transforming software testing by automating different processes and increasing accuracy and efficiency. Several tools have emerged that use Generative AI to transform the way tests are conducted.

The following are some popular ones:

KaneAI

KaneAI by LambdaTest is a GenAI native QA Agent-as-a-Service platform for high-speed quality engineering teams. It leverages natural language processing to develop, automate, and manage tests, allowing users to create and adapt tests effortlessly through simple conversational input.

It supports multi-language code export, intelligent test planning, and AI-powered debugging, offering a comprehensive solution for modern testing needs.

Key Features:

- Effortless test creation: Generates and refines tests through natural language, simplifying the test creation process.

- Intelligent test planner: Creates and automates test steps based on high-level objectives, ensuring they align with your project goals.

- Multi-language code export: Converts automated tests into various programming languages with automation testing frameworks like Selenium and Playwright, offering flexibility across different environments.

- 2-way test editing: Edits tests in either natural language or code, with changes automatically synchronized.

- Smart show-me mode: Converts your actions into natural language instructions.

Functionize

Functionize is a testing tool that uses artificial intelligence and machine learning methods to automate testing. It examines and learns from user activity to create tests that replicate those activities. The tool also has self-healing technology, which detects and fixes errors automatically.

Key Features:

- Intelligent test creation: Automates the process of producing test cases by understanding application activity.

- Self-healing tests: Modifies automatically and changes test scripts when the application changes, ensuring that tests continue to work even after upgrades.

- AI visual testing: Offers full-page screenshot visual comparison using computer vision techniques.

Testim

Testim is an automated testing platform that allows you to quickly create AI-powered stable tests and tools to help you scale quality. It uses machine learning to build and manage automated tests.

Its adaptability to UI changes ensures that automated tests are resilient even in dynamic environments. Testim’s distinguishing feature is the use of smart locators, which automatically react to UI changes, decreasing the need for manual intervention.

Key Features:

- Smart locators: Identifies and adjusts to changes in UI elements, ensuring that small interface changes do not break tests.

- Self-healing tests: Monitors the user interface and changes test scripts as needed, reducing the requirement for manual updates.

- AI-driven test authoring: Expedites test creation using AI to create custom test steps.

mabl

mabl is a modern, low-code, intelligent test automation platform that improves software quality through web, mobile, API, accessibility, and performance testing. It uses machine learning and artificial intelligence to deliver comprehensive testing solutions that simplify test preparation, execution, and maintenance.

Key Features:

- Auto-healing: Uses artificial intelligence to detect and adapt to changes in the application’s user interface. When the UI changes, its auto-healing capability updates the test scripts, guaranteeing that tests are still valid without manual intervention.

- AI visual testing: Compares screenshots of the software application across test runs. It detects visual flaws and shows any modifications that may impact the user experience.

- Performance monitoring: Uses AI to examine the software application’s performance under a variety of scenarios, identifying bottlenecks and faults.

ACCELQ

ACCELQ is an AI-powered cloud platform that automates and manages testing across enterprise software applications with codeless automation. It supports automation for web, mobile, API, and desktop systems and uses AI to deliver reliable, long-term automation for consistent test execution.

Key Features:

- Adaptive relevance engine: Accelerates test scenario creation by automatically suggesting the next steps in the flow,

- AI-powered root cause analysis: Categorizes errors and instantly recommends fixes.

- Smart locators: Creates self-healing locators that adapt to UI changes in evolving software applications.

These are some of the popular tools for Generative AI testing. To explore more tools, refer to this blog on AI testing tools.

Impact of Generative AI on QA Job Roles

The rise of Generative AI in the QA industry is going to change job roles and work dynamics; it’s set to bring an enormous shift in responsibility for QA experts. While artificial intelligence will handle repetitive and time-consuming jobs, human involvement within a testing process will require a change in skill sets and knowledge.

- Roles of manual testers: Rather than a complete reduction in demand for manual testers, their roles will evolve. Manual testers will focus more on exploratory and user-experience testing, areas where human creativity and critical thinking are essential.

- Emphasis on AI supervision and analysis: Human testers will manage AI-driven workflows by validating test results, ensuring ethical use of AI, identifying AI limitations, and making sure AI-driven outcomes align with real-world expectations.

- Increased demand for AI-experienced QA professionals: The rise of AI in QA, not just Generative AI, will increase the demand for QA professionals with broader AI expertise, covering both generative models and predictive analytics to create more robust, intelligent testing frameworks.

- Shift toward strategic and analytical roles: The broader adoption of AI (not just Generative AI) will shift QA roles towards more strategic, technical, and analytical functions, requiring proficiency in AI, coding, and advanced test automation methodologies.

- Scalability and flexibility: AI testing technologies are scalable and flexible, allowing QA teams to easily manage larger and more complex projects. QA experts must adapt to these scalable solutions, managing and optimizing testing procedures to meet increasing demands and different project requirements.

Beyond evaluating AI-generated results, QA professionals will need to optimize AI models, handle failures or false positives, and refine AI-driven test strategies to ensure comprehensive software quality.

Future Trends in Generative AI for Software Testing

According to the Future of Quality Assurance Survey Report, 29.9% of experts believe AI can enhance QA productivity, while 20.6% expect it would make testing more efficient. Furthermore, 25.6% believe AI can effectively bridge the gap between manual and automated testing.

Therefore, a future in which Generative AI will not only increase productivity and efficiency but also help to smoothly integrate manual and automated testing.

Let’s look at some future trends in using Generative AI for software testing.

- Integration with DevOps and CI/CD pipelines: Generative AI will be seamlessly integrated into DevOps practices and the Continuous Integration/Continuous Delivery pipelines. This integration will automate test processes and accelerate the continuous delivery of quality software.

- Advanced anomaly detection using predictive analytics: Generative AI, using predictive analytics, is going to be much more effective at finding anomalies in software behavior well before they become large-scale issues.

- Natural Language Processing (NLP) for test case creation: Natural Language Processing will allow Generative AI to comprehend requirements written in plain language and automatically create test cases. This trend will speed up the testing process, minimize human error, and enable non-technical stakeholders to make more effective contributions to test case creation.

- Dynamic test environment configuration: Generative AI will dynamically configure test environments based on the specific requirements of the software being tested. By optimizing resource use and modifying configurations based on testing requirements, this trend will improve test execution efficiency and scalability.

- Enhanced reporting and analytics: AI will improve testing process reporting and analytics, providing more in-depth insights into software performance and optimization opportunities. This increased visibility will allow teams to make more educated decisions and continuously improve their testing strategies.

By embedding AI into these CI/CD pipelines, organizations ensure that automated tests run with every code change, hence accelerating development cycles without compromising robust quality assurance.

Analytics of past data and the performance of the software in real time lets AI forecast possible issues and thus enable mitigation measures early, improving software reliability and security.

Shortcomings of Generative AI in Testing

As organizations seek to adopt Generative AI in testing, it is critical to understand the possible shortcomings that may occur during the implementation phase, as well as how to efficiently manage them.

The following are some of the shortcomings related to introducing Generative AI into software testing workflows:

- Creation of irrelevant or useless tests: One of the primary problems with Generative AI in software testing is its limited knowledge of the context and complexity of individual software applications. It can result in the development of irrelevant or absurd tests that do not reflect the actual needs of the application under test.

- High computational demands: Generative AI models, particularly complicated ones such as Generative Adversarial Networks or large Transformer-based models, need substantial computational resources for both training and execution. This is a challenge, particularly for smaller organizations that may lack access to the required infrastructure.

- Adapting to new workflows: The integration of Generative AI into QA processes often requires adjustments to standard workflows. AI-based tools may necessitate training for existing QA teams, and there may be resistance to implementing these new methods.

- Dependence on quality data: Generative AI is dependent on high-quality, diverse, and representative training data to perform appropriately. Poor or biased datasets lead to inaccurate or ineffective tests.

- Challenges in understanding AI-generated tests: While Generative AI can automate the creation of tests, evaluating them can be difficult, particularly when they fail. QA teams may require additional tools or training to properly comprehend AI-generated tests and fix errors, ensuring that the AI’s output provides useful insights.

- Ethical considerations: As Generative AI continues to transform QA testing, it creates significant ethical concerns such as business and privacy. While AI can provide significant benefits, it is critical to address ethical considerations to ensure fair and responsible use.

Constant development and validation of these AI-generated tests are required to ensure they provide value.

Balancing AI’s potential with available resources is critical for successful implementation.

Overcoming this obstacle requires a clear understanding of the benefits of AI integration, as well as extensive training and support to ease the transition.

Ensuring that high-quality data is available and well-managed is key to having AI produce accurate and relevant results.

You can also refer to this blog to learn how to improve QA testing with Generative AI.

Conclusion

Generative AI in testing can greatly improve efficiency and software quality. Automating complex procedures and enabling more advanced testing methodologies allows teams to focus on developing more effective test cases and delivering robust software applications more quickly. Though Generative AI has some shortcomings, strategically incorporating Generative AI into QA processes can result in more efficient, accurate testing.

Frequently Asked Questions (FAQs)

What is Generative AI for testing code?

Generative AI for testing code automates the creation of test cases and test data by analyzing patterns in existing code. It can also identify potential bugs and optimize code quality.

What is GenAI in STLC?

GenAI in the Software Testing Life Cycle (STLC) enhances automation by generating test scripts, predicting defects, and speeding up test execution. It streamlines the testing process, reducing manual intervention.

How to use Generative AI in performance testing?

Generative AI in performance testing can simulate various user behaviors and load conditions, helping teams assess how applications perform under different scenarios. It leads to deeper insights into system performance and optimization.

How can Generative AI be used in software testing?

Generative AI in testing automates tasks like test case generation, bug prediction, and test data creation. It increases testing efficiency, accuracy, and coverage by reducing human effort.

Got Questions? Drop them on LambdaTest Community. Visit now