Unleashing the Potential of AI in Testing – Future of Quality Assurance

Ilam Padmanabhan

Posted On: October 26, 2023

![]() 32533 Views

32533 Views

![]() 13 Min Read

13 Min Read

Nearly every company in today’s business landscape heavily relies on software (and hardware), regardless of whether they sell burgers or manufacture race cars.The transition to all companies becoming ‘primarily tech companies’ is almost complete. And the most popular tech word today? No prize for guessing – it is AI.

Hackers use AI to break the security layers. E-commerce stores use AI to increase customer purchases. If you ask ChatGPT, it’ll give you another million ways AI can be used. So, regardless of where an organization sits on the spectrum of ‘I love AI’ to ‘I hate AI’, they cannot avoid dealing with it anymore.

While AI has quietly made its way into the software engineering world, it hasn’t permeated into the testing discipline as much it has in the other disciplines within the software engineering world.

Appropriate usage of AI can not only catapult the quality assurance process, but also improve the overall quality culture within the organization.

Note: I have used the term AI to include all related technologies – AI, Machine Learning, NLP, and advanced automated systems. These technologies collectively represent the evolving landscape of engineering and not just one tech.

Predictive Analysis in Testing

Imagine a critical moment in a software development cycle: your organization is on the verge of releasing the next major update. The entire team has worked tirelessly to reach this point, and morale is high. However, there’s one individual who seems less jubilant – an experienced tester. Testers are often seen as the bearers of pessimism, but in this case, the concern seems distinct.

The QA lead takes notice and asks, “We’ve identified potential issues and risks and received the green light from the leadership. What’s bothering you?”

This scenario may sound familiar to many of us who have been in the tester’s seat. When prompted, the typical response might be something like, “I can’t put my finger on it, but I have a nagging feeling that this specific module or component isn’t as robust as our test results suggest. We never manage to run 100% of all possible tests, and there’s always something that emerges in production. We’ve encountered problems with it in the last few releases, but…”

What might seem like a “gut feeling” isn’t merely a subjective hunch. It’s based on a wealth of data and the processing power we’ve accumulated over time. Our internal systems excel at crunching this data, far better than we can explain how it works.

If we could think of ourselves (testers) as advanced robots, what we’re essentially conveying to our manager is that we’ve analyzed vast volumes of data, and the prevailing trends indicate a potential issue in this specific module. By combining our experience (data) with the capacity to forecast the future (computation using available data), we can estimate the likelihood of a failure with a certain degree of confidence.

But as humans, we still fall short of the computing power required (& poor memory) to predict the future with absolute certainty, pinpointing the exact location and nature of potential issues.

Let’s play AI

What if, metaphorically speaking, we were to be struck by lightning and suddenly granted AI superpowers?

Why did that “gut feeling” arise in the first place? In our past experiences, we’ve observed that:

- Test environments are never entirely stable.

- Test environment configurations don’t perfectly match production.

- Test data doesn’t sync up precisely with production data

- New synthetic data doesn’t fully represent all potential production scenarios

- There’s never sufficient time to run 100% of test combinations

- Issues have surfaced in production that we never even considered testing for

And the list goes on.

What if we could convert this experiential knowledge into data comprehensible by an AI system? We’d feed it:

- Code and configurations down to the smallest detail

- Historical test cases and results

- Version changes and code increments

- Issues encountered during deployment and rollout

- A comprehensive business use case matrix

- All possible test data combinations

- Past defects

- Records of past environmental disturbances

- And everything else we have in our repository

You know where I am getting at! If you know everything about the past, the future is something you can predict with high confidence!

AI systems can do the same, and more!

Now let’s look at other areas in testing where AI can play a significant role.

How AI can Enhance Testing – The Future State

A quick note before I let you immerse yourself on how AI will change testing beyond recognition – The current maturity levels of AI & ability to integrate it with other systems is still nascent. I’ve taken an approach to describe ‘the potential’, not the current performance of AI in testing.

Improve Test Case Writing and Prioritization

AI has the potential to move away from the currently popular ‘risk based testing’ approach. Testers have to now pick and choose what to test due to limitations of time and resources. I expect with intelligent use of AI, the primary role of testers would be to oversee that their AI engines have done the job correctly. The role of testers would be to complement and cover the gaps, rather than create everything from the start.

By integrating the existing testing tool and the backlog management tool into an AI engine, we could also potentially remove the concept of test planning (or at least the bulk of it).

Automation/ Non-Functional Testing

We already know AI can write code, so we won’t spend much time here. The future test experts will certainly need ‘AI skills’, just like how the crane operators came about replacing manual work. It is not that AI will do the testing and sign-off your release, but you can lift a lot more testing work with AI than ever before.

Note for managers: If you are a manager, don’t fear AI. Your teams are already using it anyway to write and correct their code (probably on their personal devices if your organizations don’t allow it). Embrace the future and help your teams get skilled. Imagine all the time your people can save without having to physically type all that code in!

Test Data Management

Test data management is one of the most under-rated testing disciplines. In my experience, the best testing teams have this in common – they are extremely efficient at creating and managing test data. While most data management teams use fancy tools to create/maintain data, the level of efficiency possible with AI is truly mind-boggling.

Imagine your AI engine reading all your test cases, and adding the data prior to you even needing to ask. AI can already do it, if you just let it and enable it with the data it needs!

Reporting & communication

Everyone wants to know what is happening, what is wrong and when things will be fixed and retested. The trend of ‘more meetings’ continues, and testers are no exception to it. Getting an AI engine to present dashboards & metrics can make the entire organization more efficient and use the time to talk about ‘how to improve’ and not ‘what is wrong’.

AI can also present reports in a highly visual, easy-to-understand format, making it simpler for stakeholders to comprehend the testing status at a glance. This could lead to more effective communication and collaboration between the testing team, project stakeholders, and customers.

Leveraging AI to Enhance CI/CD Pipelines

With the drive towards DevOps culture, we cannot talk about testing without talking about CI/CD. Let’s explore some of the potential areas where AI can make a significant impact in improving CI/CD processes and methods. Remember, I’m talking about potential, not current performance).

Automated Testing

AI can automatically generate and execute test cases based on simple inputs. Furthermore, it can update the existing code base in response to code changes. Machine learning algorithms can identify which tests are critical and prioritize them (based on simple user input), optimizing test execution time.

AI testing agents such as KaneAI takes this a step further, bringing advanced AI capabilities to your testing process.

KaneAI is an GenAI Native QA Agent-as-a-Service platform, built specifically for high-speed quality engineering teams. It helps create, manage, and debug tests using natural language commands, streamlining the automation process while enhancing test data management to make testing faster and more precise.

Code Quality and Static Analysis

AI-powered static code analyzers can detect code smells, security vulnerabilities, and maintainability issues at an early stage of development. AI can also suggest code improvements, such as refactoring suggestions, based on best practices and coding standards.

Anomaly Detection & Predictive Analysis

AI models are adept at identifying unusual patterns or behaviors in CI/CD pipelines, such as performance regressions or unexpected errors. They can trigger alerts and even halt deployments if necessary. Additionally, AI can analyze historical pipeline data to predict potential bottlenecks or issues, allowing teams to proactively address them.

Optimizing Build and Deployment Times

AI algorithms have the capacity to identify opportunities for parallelizing or optimizing build and deployment tasks. This results in a reduction in overall pipeline execution time, far surpassing the efficiency achievable by humans alone.

Infrastructure Scaling

AI can monitor resource utilization during CI/CD pipeline runs and automatically adjust infrastructure capacity to meet demand, effectively optimizing costs and performance. While some human intervention may be involved in the current process, AI takes this to a new level.

Automatic Rollback

In the event of unusual behavior or errors detected post-deployment, AI can automatically initiate rollback procedures, reducing the manual effort required to handle such situations.

Test Environment Management

AI can support and manage test environments by provisioning, scaling, and tearing down environments as needed. This reduces resource waste and cuts costs while ensuring that test environments align with requirements.

Release Planning and Risk Assessment

AI can assist in assessing the risk associated with a release by analyzing factors such as code changes, test coverage, and historical data. This empowers teams to make informed decisions about deployment and helps identify potential issues before they escalate.

Natural Language Processing (NLP)

NLP-powered chatbots or virtual assistants can provide real-time status updates, answer questions, and offer guidance to developers and teams throughout the CI/CD process, improving communication and collaboration.

Security Scanning

AI can run automated security scans during the CI/CD pipeline, identifying vulnerabilities and compliance issues in code and dependencies. Given that attackers are already leveraging AI, it’s only natural that AI fortifies virtual security fences.

Automatic Documentation Generation

AI can automatically generate and update documentation, release notes, and change logs based on code changes and commit messages, reducing manual effort and ensuring documentation accuracy.

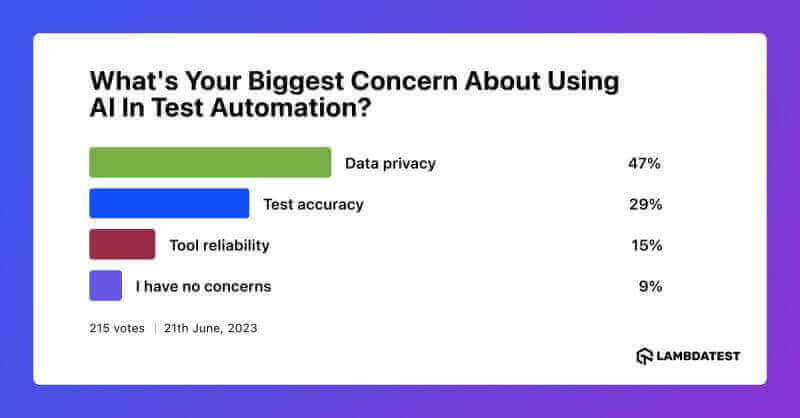

AI is Great, but isn’t Easy

In our journey through AI’s role in testing and quality assurance, it’s vital to consider the views of industry professionals. We conducted a social media poll asking, ‘What’s your biggest concern about using AI in test automation?’ The insights from this poll provide a crucial perspective on the real-world challenges and opportunities of AI in testing.

AI in testing is great, but getting it to work effectively needs a bit of work. We’ll cover more in detail in a follow up post, but here’s a short version of some factors to consider before implementing AI-powered tools in your testing process and CI/CD pipeline.

Data Quality and Quantity

The quality of AI solutions is directly dependent on the data you feed it. In cases where organizations lack access to such data, AI performance can be suboptimal (or downright dangerous). This limitation can be addressed through data collection and cleaning processes, as well as by utilizing synthetic data generation techniques.

If your organization wants to move in the direction of AI, the first stop is to achieve high data quality. If you haven’t crossed the ‘data hurdle’ successfully, you’ll not go further in your AI implementation journey.

Readiness for Change

Introducing AI is not just a technical shift; it’s also a cultural change. It affects how employees work and think. Organizations should have strategies for helping their staff adapt to the new way of doing things.

Without the right change management and support, organizations risk leaving people behind. Even in this age of AI, people are still the core of any organization. AI may be able to replace that, but not yet in the immediate future.

Model Interpretability

Understanding why AI makes certain decisions can be tricky, making it hard to fix problems and explain results.

Organizations can enhance model interpretability by creating AI models that are clear and easy to understand. They can also use interpretability techniques like LIME or SHAP to shed light on the AI’s decision-making process.

Training and Maintenance Costs

The resource-intensive nature of AI model development and maintenance requires careful budgeting and resource allocation. Organizations should evaluate the long-term costs and consider adopting cost-efficient AI development practices.

Dependency on Third-Party Services

Relying on third-party AI services and APIs exposes organizations to potential service disruptions and changes. To mitigate these risks, organizations can explore strategies like in-house AI model development or diversification of third-party service providers.

Wrapping up

We’ve seen a glimpse of the utopian state that AI could bring to the world of testing and software engineering in general. As AI triggers sweeping changes on how software is created and used, the testing discipline cannot afford to take a ‘wait and watch’ approach.

However, integrating AI systems is not a straightforward process. Organizations must grapple with challenges related to data organization, technical complexities, resistance to change and the need to mitigate the inherent risks associated with AI adoption.

Got Questions? Drop them on LambdaTest Community. Visit now